Standardized Testing and the Controversy Surrounding It

Standardized Testing and the Controversy Surrounding It PDF

Polster, P.P., Detrich, R., & States, J., (2021). Standardized Testing: The Controversy Surrounding It. Oakland, CA: The Wing Institute. https://www.winginstitute.org/student-standardized-tests.

The depth and breadth of the backlash against statewide standardized testing in recent years and the continued attacks on testing from both the left and the right suggest there’s a very real chance that Congress could abandon the ESSA [Every Student Succeeds Act] testing mandate when it next rewrites the law. That, in turn, could mean the end of annual statewide testing in many parts of the country and an end to the transparency and civil rights protections it was designed to produce. (Olson & Jerald, 2020, p. 12)

As is the case with so many other topics these days, the term “standardized testing” has taken on a new meaning and may provoke surprisingly passionate responses from administrators, teachers, parents, and school board members. The purpose of this overview is to provide a general understanding of standardized testing as well as the current controversy surrounding it, particularly in the context of performance-based accountability systems. The overview addresses the following questions related to standardized testing:

- What do stakeholders need to understand about standardized testing?

- What is the history of standardized tests and how have the tests been used?

- What are the reasons for the current controversy over standardized testing?

References to the state of Missouri are used as examples throughout the overview because the first author has several years of experience supporting improvement efforts in the state. Missouri may not be a representative example but is used as an example.

Introduction

If you don’t know where you are you’re lost, and it doesn’t matter that you know where you want to go. (Bushell & Baer, 1994, p. 4)

Each month, a group of new Missouri school board members receives training as required by Missouri Code of State Regulations (2021). The current requirement is that new board members receive 18.5 hours of training on a range of topics, including “evaluation of progress toward goals” and “information on student performance techniques and reporting” (5 CSR 20-400.400, p. 25). For this reason, and because research on effective school boards indicates that a focus on student achievement and outcomes is correlated with positive student achievement results (Lee & Eadens, 2014; Shober & Hartney, 2014), this overview spends some time reviewing Missouri’s accountability system and district standardized testing results.

Some new board members find this portion of the training frustrating. They think that measuring a district’s performance shouldn’t be so complicated. And yet it certainly is. Aspirations for the public education system are lofty and nearly as diverse as the student body. Everyone wants schools to produce students who are model citizens and independent thinkers and problem solvers, but who are also college and career ready as well as curious and caring. But how can such goals be measured?

School board members aren’t the only stakeholders struggling with that question. Researchers studying performance-based accountability systems across sectors (Stecher et al., 2010) found agreement on broad goals in education, but not on specific performance elements. In his book on value-added measurement in education, Harris (2011) pointed out that “The process of defining performance measures forces stakeholders to think carefully about what the school is trying to accomplish. The resulting performance measures in turn help to define that mission in concrete terms” (p. 14).

Wainer (1994) asserted that the most critical measure of any educational system is student performance. Yet a common criticism of current test-based accountability systems is that they are overly reliant on standardized tests of student achievement. However, critics of standardized testing have failed to recommend any valid, reliable alternatives to standardized tests for measuring student achievement outcomes or the effectiveness of instruction in a given setting.

Given that schools are faced with an increasingly broad scope of services, the process of identifying agreed-upon outcome measures to support educational accountability systems is extraordinarily complex. In fact, measuring even one student’s academic achievement is complex. Many state accountability systems attempt to translate each and every student’s standardized achievement test score into an overall or comprehensive measure of the district (or school)’s academic performance. After all, that is what statewide annual accountability tests are intended to do. For example, the Missouri Department of Elementary and Secondary Education (2020) describes its accountability system this way:

The Missouri Assessment Program (MAP) is designed to measure how well students acquire the skills and knowledge described in the Missouri Learning Standards (MLS). The assessments yield information on academic achievement at the student, class, school, district, and state levels. This information is used to diagnose individual student strengths and weaknesses in relation to the instruction of the MLS, and to gauge the overall quality of education throughout Missouri [emphasis added].

A summary of ESSA final regulations on assessments under Title I (Every School Succeeds Act, 2017) makes the argument this way: “High-quality assessments are essential to effectively educating students, measuring progress, and promoting equity. Done well and thoughtfully, they provide critical information for educators, families, the public, and students themselves… Done poorly, in excess, or without clear purpose, however, they take valuable time away from teaching and learning” (para. 1).

Yet, in a recent report, Olson and Jerald (2020) warned that the future of statewide testing was in peril:

But a close analysis of the political landscape of standardized testing makes clear that unless a new generation of tests can play a more meaningful role in classroom instruction, and unless testing proponents can reconvince policymakers and the public that state testing is an important ingredient of school improvement and integral to advancing educational equity, annual state tests and the safeguards they provide are clearly at risk. (p. 2)

Around that same time, The Washington Post ran an article titled “It Looks Like the Beginning of the End of America’s Obsession With Student Standardized Testing” (Strauss, 2020a). For some, the article was cause for celebration, and for others, cause for concern.

Key Questions

What Do Stakeholders Need to Understand About Standardized Testing?

The terms “standardized testing” and “standardized assessment” are often used interchangeably, but generally a test is a specific type of assessment. Kubiszyn and Borich (2016) described tests and specific assessments as tools in a comprehensive assessment process. A test consists of one product or tool to support assessment, whereas the assessment process may include other tools such as an interview and observation. The standardized test, which poses the same questions to all test takers and scores the answers in a consistent or standard way in order to produce results that are as objective, comparable, and reliable as possible, is the type of test used across the country for accountability purposes.

In his primer on standardized testing, Phelps (2007) lauded the value of standardization: “The student scores can be compared either to each other or to a threshold level of achievement. Arguably, the chief benefit of standardized testing is the standardization itself” (p. 4). However, in the years since the No Child Left Behind (NCLB) Act of 2001 enacted punitive measures associated with poor performance on annual state assessments, the annual standardized tests administered by states were often referred to as high-stakes tests.

The fact that standardized tests have been used in ways that can have serious, often punitive consequences for students, teachers, and administrators has ultimately led to inappropriate practices in schools and, in turn, to much of the controversy surrounding standardized testing. Complaints related to standardized testing include excessive time spent on test preparation; cheating by teachers and administrators; exclusion or reduction of content that is not tested, such as fine arts or physical education; and increased stress on teachers and students.

Standardized tests can be norm referenced, meaning a test-taker’s performance is compared to a representative sample. However, in the case of state accountability systems, standardized tests are generally standards based or criterion referenced, meaning that a test-taker’s performance is dependent on mastery of content standards.

Harris (2011) examined the critical features of standardized tests: design (norm referenced versus criterion referenced); how results are reported (e.g., proficiency, scale scores, percentile rank); and how results are used (low stakes versus high stakes). Other crucial features of tests used for accountability or program evaluation are reliability (the extent to which consistent results can be expected if a test were readministered) and validity (the extent to which a test actually measures what it is intended to measure).

Perhaps the most important consideration when it comes to the quality of any test is validity, or the purpose for which it was created. Hamilton et al. (2002) noted, “One of the key points to understand in evaluating and using tests is that tests should be evaluated relative to the purpose for which the scores are used. A test that is valid for one purpose is not necessarily valid for another” (p. xvi).

Some of the current criticism related to standardized tests used for state accountability systems is rooted in a misunderstanding of their purpose. This misunderstanding often is reported in the popular press as it was in a Forbes article, “There are many problems with the testing regimen, but a big issue for classroom teachers is that the tests do not help the teacher do her job” (Greene, 2019). It is also used as a criticism of testing, as in this case: “High-stakes testing regimes have limits as information tools. The data from high-stakes tests are useful to policymakers for assessing school and system-level performance but insufficient for individual-level accountability and provide meager information for instructional guidance.” (Supowitz, 2021).

Statements such as these are confusing and misleading to stakeholders because they misrepresent the purpose of standardized tests used for accountability. The standardized tests used as a critical aspect of state accountability systems are not intended to guide teachers’ daily instruction. Rather, they are designed to measure the performance of the district (or school, depending on the level at which data are examined) and facilitate performance-based accountability. No single test can effectively serve multiple purposes.

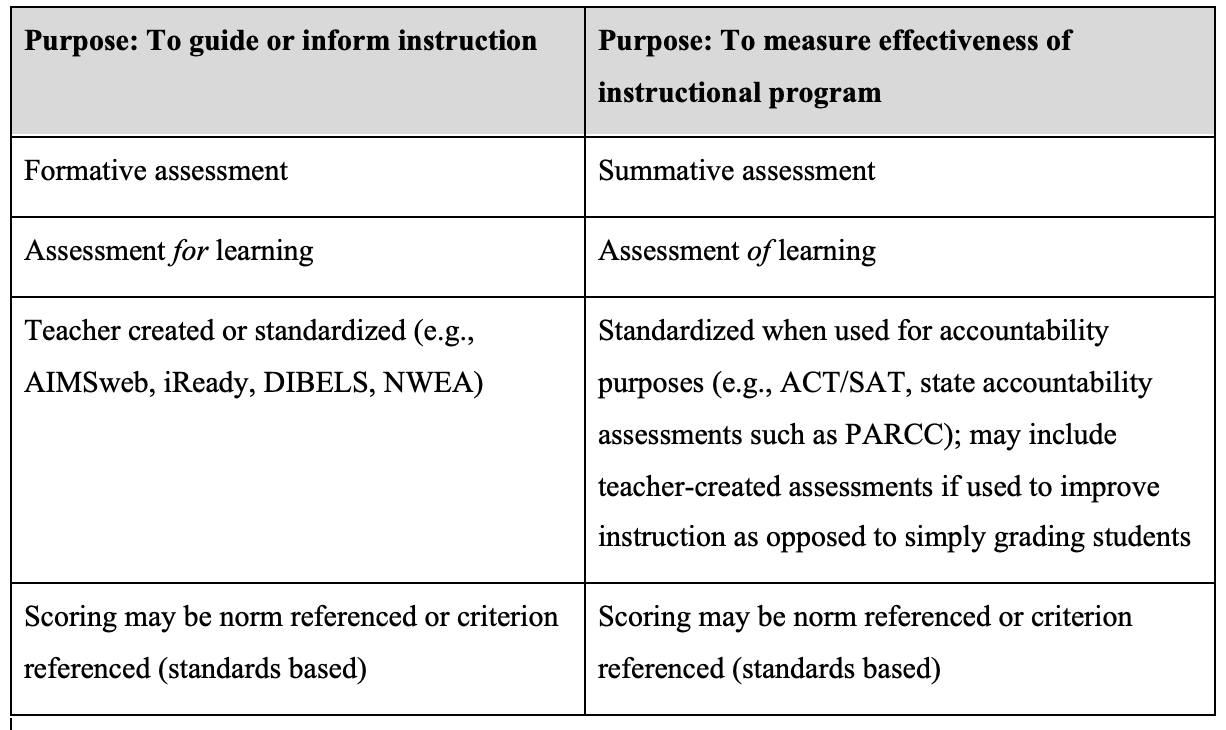

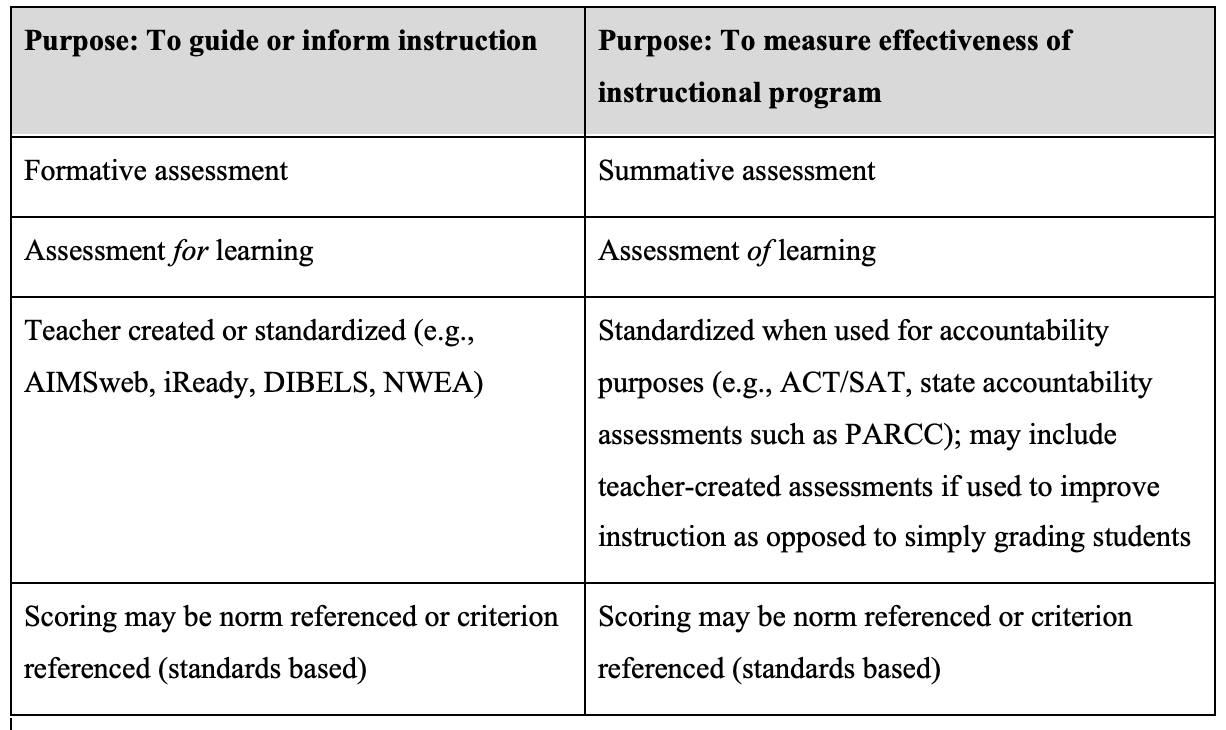

Chingos et al. (2015) stated that “how much students learn in school makes a big difference in their lives, and standardized tests capture valid information on this.” Put another way, annual standardized tests are intended to serve as an assessment of learning. Stiggins et al. (2006) made the following distinction between assessment of learning and assessment for learning:

Assessments of learning are those assessments that happen after learning is supposed to have occurred to determine if it did. ...Assessments for learning happen while learning is still underway. These are the assessments that we conduct throughout teaching and learning to diagnose student needs, plan our next steps in instruction, provide students with feedback they can use to improve the quality of their work, and help students see and feel in control of their journey to success. (pg. 31)

The distinction between formative assessment and summative assessment is similar to that between assessment of learning and assessment for learning. Formative assessment is an assessment for learning (States et al., 2017) while summative assessment is an assessment of learning (States et al., 2018). Because summative assessment occurs after instruction has taken place, it is not expected to impact a particular student’s learning. A teacher who administers a brief pretest before beginning a unit of instruction to gauge a given student’s familiarity with the content and then uses the results to plan instruction that better meets the student’s needs is engaging in formative assessment, or assessment for learning. If that same teacher administers an assessment after teaching several lessons and uses the results to grade the student, then he or she is engaging in summative assessment, or assessment of learning. Summative assessment can be expected to improve learning outcomes if the results are used in a manner that improves instructional practices; even so, the improvement may occur only for subsequent cohorts of students, when the teacher teaches the same course again.

Both formative and summative assessments can be standardized. For instance, AIMSweb and iReady are web-based standardized formative assessments intended to be administered throughout the school year. End-of-year state assessments are examples of standardized summative assessments. Likewise, both formative and summative assessments can be teacher created (not standardized). A quick teacher-created pop quiz administered in the middle of a unit as a way of planning the next day’s instruction is an example of a non-standardized formative assessment. A teacher-created end-of-semester exam that will be graded, returned to students, and included on the report card is an example of a non-standardized summative assessment.

Table 1

Key terms related to assessment

Because state accountability tests are now standards based, many states have seen numerous changes in accountability testing in the past 10 years or so. Federal changes to accountability requirements and the subsequent adjustments by states have resulted in changes to tests. The push to get all states to adopt the same standards (Common Core), which most states did, was followed by a backlash against those standards and, in several states, a return to state-specific learning standards. Changes in standards result in changes in tests, and changes in standards and tests result in changes in curriculum. All of these changes in standards, tests, and curriculum have resulted in frustration and stress for teachers, who are expected to adjust their instruction with each change.

Tests aren’t perfect from the outset. The fact that most stakeholders are unfamiliar with the process of test development likely adds to a sense of skepticism. The tests must be calibrated to the learning standards they are intended to measure. This process is defined in each state and includes varying levels of stakeholder input; for example, stakeholders in Missouri can reference the 2019 grade-level assessment technical report (Missouri Department of Elementary and Secondary Education, 2019). Hamilton et al. (2002) provided a general overview of the process as well as evaluation strategies. Ultimately, the true test of annual standardized tests as measures of accountability would be to analyze the results in the context of longer term desired student outcomes, which would require several years of consistent implementation as well as longitudinal follow-up of graduates.

What Is the History of Standardized Tests and How Have the Tests Been Used?

Despite the frequent good intentions and abundant rhetoric about “equal educational opportunity,” schools have rarely taught the children of the poor effectively—and this failure has been systematic, not idiosyncratic. (Tyack, 1974, p. 8)

As was mentioned previously, school board members in Missouri are required to receive training on how to monitor progress toward goals. A look back at the history of standardized assessments indicates that this was the original intention of some early testing proponents. Gallagher (2003) noted that the educational reformer Horace Mann became one of the first proponents of standardized assessment when, in 1845, he persuaded the Boston Public School Committee to allow him to administer a common written exam that he hoped would “provide objective information about the quality of teaching and learning in urban schools, monitor the quality of instruction, and compare schools and teachers within each school” (p. 85). Phelps (2007) reported that Mann used standardized tests for systemwide quality control and to ensure content or performance standards. His approach was adopted by school systems in nearly all U.S. cities.

Another example of the early use of standardized testing to measure instructional outcomes is described in a book by Samuel S. Brooks published in 1922. The author explained that his book

to tell in a simple way how standardized tests and scales were used to improve schools in a newly organized rural-school district in New Hampshire. …It is the story of how a corps of faithful, hard-working, but mostly untrained teachers, with the aid of an inexperienced superintendent, put standardized tests and measurements to practical use throughout a school system to the considerable advantage of all concerned. (p. 6)

In addition to measuring school or district performance, standardized tests were also developed to measure individual student cognitive functioning. These efforts led to the release in 1916 of the Stanford-Binet intelligence test, which was followed by various aptitude tests. The aptitude tests were embraced by the military and seen as useful tools. In education, however, the use of IQ tests has led to some questionable practices and outcomes. For example, in California the use of IQ testing for the purpose of special education placement was banned for Black students in a decision referred to as the Larry P case (Larry P v Riles, 1979). In that case, Black male students were overidentified for special education services and those services did not meet their needs. California adjusted the identification process and now incorporates a discrepancy between grade level and academic achievement (Title 5 California Code of Regulations § 3030, 2014.). However, it can be argued that any discrepancy model is in fact a “wait to fail” approach and is detrimental to students (Fletcher et al., 2002; Gresham, 2002; Torgeson, 2001). For further discussion of the early misuse of IQ testing in education, see Goslin, 1967.

The first voluntary statewide administration of student achievement tests took place in Iowa in 1929 (Gallagher, 2003). In 1965, President Lyndon Johnson recognized significant disparities in educational funding and outcomes throughout the country and among local school districts; he issued the first federal educational testing mandate with the passage of the Elementary and Secondary Education Act (ESEA) (Paul, 2016). The National Assessment of Educational Progress (NAEP), developed in response to this legislation, was intended to help assess the impact of additional education funding. Today, the NAEP is still administered across the country, although the frequency of its administration has been reduced (Loveless, 2016).

ESEA has been renamed with each subsequent reauthorization. Its 2001 reauthorization as No Child Left Behind (NCLB) further addressed equity with a requirement to measure student achievement in reading and math every year from grades 3 through 8 and to report student achievement data in a disaggregated format that allowed for analysis of the persistent achievement gaps the legislation was intended to address. However, with that authorization, performance targets and punitive measures were also established, resulting in a significant backlash against standardized testing. Before the legislation was reauthorized, the Obama administration did away with the punitive measures through a waiver process. ESEA was most recently reauthorized in 2015 as the Every Student Succeeds Act (ESSA).

What Are the Reasons for the Current Controversy Over Standardized Testing?

If our children’s scores on standard tests were getting significantly higher, or if the spread of scores were more equitably distributed by race, class, and gender, or if American kids were further from the bottom on international rankings, these unceasing attacks on standardized tests would subside. (Hirsch, 1996, p. 180)

A great deal of literature (but very little research) criticizing the practice of annual standardized testing and/or advocating for alternative methods of measuring school quality preceded and followed the long overdue reauthorization of ESEA as ESSA in 2015 (e.g., Gabor, 2020; Greene, 2019; Kohn, 2020; Koretz, 2017; Ravitch, 2011; Sawchuk, 2019; Strauss, 2020b). With ESSA, opponents of testing succeeded in establishing a requirement to include a new or different measure of school performance (to be determined by each state in its accountability system) as a way of offsetting some of the weight of standardized academic achievement test results. However, they were unable to remove the annual testing requirement in grades 3 through 8 math and reading, which allows for measures of growth or value-added at the school or district level.

Olson and Jerald (2020) found increasing evidence of anti-testing efforts in state capitals across the country. Between 2014 and 2019, lawmakers in 44 states introduced at least 426 bills and 20 resolutions to reduce the amount of testing, and 36 of those states enacted measures. In 2020, for the first time since 1994, the federally mandated annual standardized tests were not administered due to the closure of schools during the pandemic (Gewertz, 2020). In the months since, with the continued effects of the pandemic and the transition to a new presidential administration, numerous articles about standardized testing indicated that public opinion was leaning heavily against the use of standardized tests in spring 2021 (e.g., Bell-Ellwanger, 2021; Gabor, 2020; Greene, 2020; Olson & Jerald, 2020; Silver & Polikoff, 2020; Strauss, 2020b, 2021). This would leave district leaders without objective data to assess the effectiveness of instruction provided during exceedingly difficult circumstances. Also, district leaders wouldn’t be able to compare the effects of in-person versus virtual learning unless they administered their own standardized tests.

In 2005, Phelps reported, “Public support for high-stakes testing is consistent and long standing, and majority opinion has never flipped from support to opposition” (p. 21). However, more recent evidence regarding public opinion on standardized testing is mixed. The PDK/Gallup Poll (2015) reported that “Testing came in last as a measure of effectiveness with just 14% of public school parents rating test scores as very important. …64% of Americans and a similar proportion of public school parents said there is too much emphasis on standardized testing in the public schools in their community” (p. 8). A valid argument could be made about the dubious wording of some of the questions in the poll such as this one, for example “Thinking of the standardized tests your child in public school takes, how confident are you that these tests do a good job measuring how well your child is learning?” Yet a survey by Education Next (2019) indicated that a large majority of the general public (those who strongly support as well as those who somewhat support,74%) still supported the federal government’s testing requirements. Teachers who were members of unions were the only reported demographic in which a majority (those who were strongly opposed as well as those who were somewhat opposed, 64%) opposed the testing requirements.

Some of the criticism against the use of standardized testing in accountability systems has been well founded. For example, NCLB’s use of status or simple proficiency did not accurately measure the contribution of schools to student outcomes. The fact that proficiency is so tightly correlated with poverty means that measuring only proficiency may simply be a reflection of a student’s starting point or learning opportunities outside of school rather than the effectiveness of the instructional program.

The shift from simple proficiency to measures of student growth represents a significant improvement in accountability practice and in the use of standardized test scores to measure the performance of schools and districts. However, the methodology has evolved over the past decade and is quite complex, which may make it difficult for those unfamiliar with the methodology to accept. (See Cleaver et al., 2020, and Harris, 2011, for a thorough explanation of value-added measures of school performance.) An important point to remember is that measures of growth and subsequent value-added can only be calculated if tests are administered annually. Those who wish to remove the requirement for annual testing may argue that demographic adjustments can be made to account for background concerns. Chingos and West (2015) explained why this is not a satisfactory alternative:

The reason growth measures outperform demographic adjustments when judging school quality is straightforward: although student characteristics such as family income are strongly correlated with test scores, the correlation is not perfect.

In sum, our results confirm that using average test scores from a single year to judge school quality is unacceptable from a fairness and equity perspective. Using demographic adjustments is an unsatisfying alternative for at least two reasons. In addition to providing less accurate information about the causal impact of schools on their students’ learning, the demographic adjustments implicitly set lower expectations for some groups of students than for others (para. 9 and 10).

Additional criticisms that have been leveled against standardized testing are addressed below, by topic area.

Testing vs. assessment (narrow vs. broad). A test can be seen as part of a broader assessment process. Critics of standardized tests (e.g., Kohn, 2020; Koretz, 2017; Ravitch, 2011) are concerned that the tests cannot measure important qualities like citizenship or higher order thinking skills, but instead measure only rote memorization and basic skills. According to Kohn (2020), “The problems with standardized tests aren’t just a function of overusing or attaching high stakes to them. By their very nature, they fail to capture the intellectual proficiencies that matter most.” Kohn did not suggest how those proficiencies might be accurately or adequately measured.

With the ESSA reauthorization, the U.S. Congress invited states to develop their own new measures by requiring the identification of non-academic factors in their accountability systems. Adams et al. (2017) recommended what they called “next generation accountability,” which would change the goal from increasing test scores to encouraging deeper learning. They proposed developing a new system of measuring student learning that emphasized assessing higher order cognitive skills and benchmarking assessments to international standards, and suggested including surveys to assess students’ perceptions of more conceptual qualities of education such as student social climate, student-teacher trust, motivating instruction, and perceived opportunities to engage in deeper learning. The authors described deeper learning opportunities as “cognitive and noncognitive competencies needed for effective participation in the workforce and active citizenship” (p. 33) but they provided no suggestions as to how those competencies might be measured.

Time spent testing and test prepping. A report from Council of the Great City Schools (Hart, 2015) found that school districts sometimes administer several different assessments to the same students in the same grade levels in order to facilitate more specific data analysis. This type of inefficiency, coupled with the addition of formative assessments (which may be standardized), contributes to the perception that students are over-tested and may well influence public opinion against standardized testing.

The practice of test preparation is a frequent target of critics and for good reason. Any preparation for standardized testing that goes beyond a simple explanation of the format and what students can expect likely represents a misunderstanding of the purpose of the standardized tests used for accountability purposes, which is to evaluate the quality of the instructional program. District leaders who allow any significant amount of test preparation may actually be invalidating their results because they won’t know if the outcomes represent the effects of the instructional program or the test preparation. Both formative assessments and test preparation are under the control of local education officials. They are not required by federal or state accountability systems. Yet to uninformed stakeholders they may be lumped into the same category: over-testing.

Teaching to the test. Critics also claim that standardized testing results in a narrowing of the curriculum because teachers begin to “teach to the test,” that is, focus on tested subjects such as math and reading to the detriment of other subjects such as history or science. Many non-critics take the view that teaching to the test is simply teaching to a set of agreed-upon standards (Crocker, 2005; Popham, 2001).

Good instructional practice dictates that there should be a clear relationship between what is taught and what is tested. Each state has identified agreed-upon standards and designed their accountability tests to assess mastery of those standards. It is up to each local district to design and deliver a curricular program that will promote student mastery of those standards and to assess the effectiveness of that curricular program.Unfortunately, Supowitz (2021) has reported that although high-stakes testing does prompt some changes in instruction, “these changes tend to be superficial adjustments of practice that are often focused on modifications in content coverage and test preparation practices rather than deep improvements to instruction efforts.” This indicates that districts are not using accountability testing results to evaluate the effectiveness of their instructional programming.

Standards. NCLB required annual standardized testing in all states, in math and reading for grades 3 through 8. The legislation set targets for states in terms of the percentage of students scoring “proficient,” although there was no consistent definition of “proficiency” as states had their own standards (Cronin et al., 2007). Each year, the proficiency target increased. Punitive consequences for districts and schools that failed to meet targets increased incrementally and could ultimately result in schools being reconstituted (meaning that administrators and/or other staff were assigned to other buildings). Because NCLB enacted such punitive measures and was associated with an increase in standardized testing nationwide, negative sentiment about the legislation (Jackson, 2015) may well have carried over to negative feelings about standardized testing.

The variance in measuring proficiency is still an issue (Hamlin & Peterson, 2018; National Center for Education Statistics [NCES], 2020); however, the situation seems to be less problematic without the punitive consequences under ESSA.

The controversy over the Common Core State Standards also may have contributed to negative perceptions of the standardized tests designed to measure them. Critics claimed that the standards represented a federal overreach and that the testing companies contracted to design tests were driven by self-interest and profit motive (McArdle, 2014). Advocates of standardized testing would generally support the notion of common standards across states due to the enhanced comparability of data; the ability to examine one district’s data in the context of one or more demographically similar districts adds context and makes the data more meaningful.

Concerns about the origins of learning standards don’t end with the Common Core. Even in states that have returned to their own standards, critics question how standards were developed and more importantly, how “cut scores” were established. Cut scores are the points at which new levels of performance are determined. For example, in Missouri, student performance falls into one of four categories: advanced, proficient, basic, and below basic. Interested Missouri residents can peruse a 564 page technical report for answers to their questions about Missouri Assessment Program test development and scoring determinations (Missouri Department of Elementary and Secondary Education, 2019). A similar document was not located for the Partnership for Assessment of Readiness for College or Careers (PARCC) or Smarter Balanced Assessment Consortium (SBAC) assessments which are both based on the Common Core State Standards.

The misuse of tests in education. Even well-known testing critic Daniel Koretz (2017) admitted (more than halfway into his book condemning standardized testing) that standardized tests belong in any system of monitoring and accountability. “Many critics of our current system blame standardized tests, but for all the damage that test-based accountability has caused, the problem has not been testing itself but rather the rampant misuse of testing” (p. 219).

Kubiszyn and Borich (2016) described how Waco, Texas, used standardized test scores in 1998 to make promotion decisions, resulting in the percentage of students held back increasing from 2% the previous year to 20%. The authors noted that Chicago public schools had launched a similar program in 1994, substantially increasing the number of students held back.

At times, achievement has been conflated with ability and standardized tests have been a factor in those discussions. Education’s history of tracking students into certain study programs based on estimations of ability is problematic. No parent wants to be told that his or her child’s potential has been established and is fixed. Claiming to measure ability puts a cap on possibility. To some extent, education has implied that achievement test results are indicative of potential, failing to acknowledge that even students who have faced significant adversity and who have not had a rich learning background can achieve at high levels with effective instruction. Parents and other stakeholders have been rightly frustrated by this. See Goslin (1963, 1967) for a thorough discussion on the early use, and misuse, of standardized testing.

Role of standardized tests in accountability systems. Much of the consternation over standardized testing may well be related to the punitive consequences associated with it, as well as concerns about its role in instruction and grade promotion. Perhaps the most telling example of the high stakes for teachers can be seen in a website hosted by the Los Angeles Times (2021) that provides value-added ratings for 11,500 Los Angeles Unified elementary school teachers. Imagine a similar rating system in a small town where everyone knows everyone else. It’s easy to understand how these practices could generate negative opinions around testing and an inordinate amount of stress for teachers. It also may explain why some educators have resorted to cheating.

Critics of standardized testing often point to cheating scandals as evidence that standardized test results are not valid. However, standardized tests include security protocols that make irregularities easier to detect and cheating more difficult. Critics also assert that it is the high-stakes nature of the tests that causes professionals to game the system (Porter-Magee, 2013). A proponent of standardized testing might say that any cheating is a symptom of dysfunction within the system. Education leaders who embrace standardized testing as a tool to support improvement will not cause students or staff to feel the need to cheat. Those leaders will demonstrate that the test results are a reflection of the instructional program by utilizing the results to gauge program effectiveness and not to point fingers or call out individuals. They may also use the results to support or inform teacher development, but in a manner that emphasizes collaborative improvement.

Teachers’ perception of their limited control over student achievement outcomes may contribute to the stress caused by the use of standardized test scores in the teacher evaluation process. Much of the education literature is filled with the factors correlated with low student achievement, such as poverty, trauma, violence, and screen time. This may lead some teachers to feel that they face insurmountable odds and are expected to work miracles. Holding teachers alone responsible for the results of standardized tests would be a violation of Harris’s (2011) “cardinal rule of accountability—hold people accountable for what they can control” (p. 4). We might consider asking, To what extent do teachers have a say in the instructional programs and practices that they are expected to implement? As Carnine (1992) pointed out, “Teachers deserve the same protection as members of other professions—access to tools that have been carefully evaluated to ascertain their effectiveness” (p. 7). If teachers were provided with effective instructional tools and trained in effective instructional practices, then the next appropriate step would be to consider their implementation of those tools and practices. Unfortunately, that has not always been the case.

This gets at a much bigger issue in education: failure to embrace an evidence-based approach. Wiggins (1994) pointed the finger at a failure to be results oriented rather than at the tests themselves. In their discussion of whether or not accountability was working for education, Elmore and Furhman (2001) noted that few schools took the bold step of reevaluating their instructional programs. Many educators are still implementing reading practices and tools that are listed as “not recommended” (National Council on Teacher Quality, 2020). If a district dictates instructional tools and practices but fails to evaluate their effectiveness, is it fair to hold teachers accountable for outcomes?

If school and district leaders focused their attention on test results at the system level, they could foster a focus on instructional practices, which are the highest leverage factors teachers have at their disposal to improve student achievement. Yet, instructional materials and practices are often implemented without any empirical evidence of their effectiveness (Carnine, 1992; National Research Council, 2004; Whitehurst, 2004). For example, between 1990 and 2007, the National Science Foundation spent an estimated $93 million on the development, revision, and dissemination of mathematics materials. Yet, a report by the National Research Council (2004) found the effectiveness of individual programs that benefited from that funding could not be determined with a high degree of certainty. Walker (2004) noted that educators did not have a good track record of accessing evidence-based interventions and putting them to effective use in schools.

Conclusion

One of the biggest problems with test-based accountability is that educators do not have the time, resources, or training to interpret and use test data to improve teaching and learning. (Harris, 2011, p. 31)

According to Chingos et al. (2015), “Scores that students receive on standardized tests administered in schools are strongly predictive of later life outcomes” and “information on school performance that does not include information on student learning as measured by standardized tests will be badly compromised” (Student Learning Impacts Long-Term Outcomes section). The current trajectory of the swinging pendulum of public opinion on standardized testing may result in throwing the baby out with the bath water and compromise district leaders’ ability to effectively evaluate the effectiveness of instructional programming to improve student learning outcomes.

A report from Council of the Great City Schools (Hart et al., 2015) found no correlation between the amount of mandated testing time and reading and math scores in grades 4 and 8 on NAEP. One would be hard-pressed to find a credible researcher claiming that time spent testing could be expected to improve performance, yet the fact that the authors performed such an analysis indicates that someone thought such a result was possible. Could it be that some stakeholders believed the tests themselves would somehow improve achievement? If so, this may explain some amount of the dissatisfaction that’s been reported. It is best to keep in mind testing critic Diane Ravitch’s clear statement: “The tests are a measure, not a remedy” (Strauss, 2021).

In the 2016–2017 school year, expenditures for public elementary and secondary schools totaled $739 billion dollars (NCES, n.d.). For such an investment, stakeholders expect to see evidence of results. School board members across the nation are tasked with a fiduciary responsibility for the responsible allocation of district resources. Given that the purpose of public schools is ultimately related to student learning, responsible leaders require a high-quality measure—meaning that it should be objective, valid, and reliable—of student learning to facilitate improvement efforts. However, in 2019, the board president in a well-resourced district in Missouri stated that she felt teacher-created tests were more valid than the state tests. Such a statement by a board president indicates a disregard for objectivity, validity, and reliability in assessment. The statement also indicates that this particular board president did not value or desire system-level measurement or comparability across districts.

This brings to mind a statement by Koretz (2017), who acknowledged that the schools his children attended were among the highest scoring in the state and that his neighborhood was filled with parents holding advanced degrees: “I used to joke that students in the schools my kids attended could be locked in the basement all day and would still do fine on tests” (p. 196). In circumstances of privilege, maybe standardized testing isn’t as essential. Perhaps, Koretz’s observation is an indication that our education system is perfectly geared toward students who come from privilege. Or maybe it’s an indication that it doesn’t much matter what the instructional program looks like for some children because the majority of them will do just fine, regardless. But even in circumstances of privilege, there will be some students who struggle. Some districts will see this struggle as a sign of some sort of disability while others will take the responsibility to ensure that their instructional program is effective for all students.

Perhaps a shift in the conversation around standardized testing in education away from accountability and toward improvement would be helpful. There is a meaningful difference in these two approaches or mindsets. For many, the term “accountability” leads to an unpleasant feeling of defensiveness while the term “improvement” is not as threatening. If the annual standardized assessments were used as a tool to support improvement in the instructional program rather than as an evaluation of individuals, they would likely arouse fewer negative emotions, not the least of which is stress. However, as Kane (2015) has pointed out, education lacks an infrastructure to identify what works and to build consensus among education leaders about what is working.

The central debate about standardized tests may come down to how they are being used as well as how they are not being used. Much of the critics’ consternation is rooted in the use of tests to hold schools, educators, and sometimes students accountable (making them high stakes). At the same time, little evidence exists to evaluate the extent to which school district leaders use testing results to improve instructional programs.

Citations

Adams, C. M., Ford, T. G., Forsyth, P. B., Ware, J. K., Olsen, J. J., Lepine, J. A., Sr., Barnes, L. L. B., Khojasteh, J., & Mwavita, M. (2017). Next generation accountability: A vision for improvement under ESSA. Learning Policy Institute. https://learningpolicyinstitute.org/sites/default/files/product-files/Next_Generation_Accountability_REPORT.pdf

Bell-Ellwanger, J. (2021, January 5). Analysis: Spring exams are the best shot state leaders have at knowing what’s happening with their students. The 74. https://www.the74million.org/article/analysis-spring-exams-are-the-best-shot-state-leaders-have-at-knowing-whats-happening-with-their-students/

Brooks, S. S. (1922). Improving schools by standardized tests. Houghton Mifflin. https://ia800300.us.archive.org/29/items/improvingschools00broo/improvingschools00broo.pdf

Bushell, D. & Baer, D. M. (1994). Measurably superior instruction means close, continual contact with the relevant outcome data. Revolutionary! In R. Gardner, D. M. Sainato, J. O. Cooper, T. E. Heron, W. L. Heward, J. Eshleman, & T. A. Grossi (Eds.), Behavior analysis in education: Focus on measurably superior instruction (pp. 3–10). Brooks/Cole.

Carnine, D. (1992). Expanding the notion of teachers’ rights: Access to tools that work. Journal of Applied Behavior Analysis, 25(1), 13–19. https://doi.org/10.1901/jaba.1992.25-13

Chingos, M. M., Dynarski, M., Whitehurst, G., & West, M. (2015, January 8). The case for annual testing. Brookings Institution. https://www.brookings.edu/research/the-case-for-annual-testing/

Chingos, M. M., & West, M. R. (2015, January 20). Why annual statewide testing is critical to judging school quality. Brookings Institution. https://www.brookings.edu/research/why-annual-statewide-testing-is-critical-to-judging-school-quality/#:~:text=Why%20Annual%20Statewide%20Testing%20Is%20Critical%20to%20Judging%20School%20Quality,-Matthew%20M.&text=The%20type%20of%20growth%20measure,of%20schools%20on%20student%20achievement

Cleaver, S., Dietrich, R. & States, J. (2020). Overview of value-added research in education: Reliability, validity, efficacy, and usefulness. The Wing Institute. https://www.winginstitute.org/staff-value-added

Crocker, L. (2005) Teaching for the test: How and why test preparation is appropriate. In R. Phelps (Ed.) Defending standardized testing (pp. 159-174). Lawrence Erlbaum Associates, Inc.

Cronin, J., Dahlin, M., Adkins, D., & Kingsbury, G. G. (2007). The proficiency illusion. Thomas B. Fordham Institute. https://fordhaminstitute.org/ohio/research/proficiency-illusion

Education Next. (2019). Program on education policy and governance, survey 2019. https://www.educationnext.org/wp-content/uploads/2020/07/2019ednextpoll-1.pdf

Elmore, R., & Fuhrman, S. (2001). Holding schools accountable: Is it working? Phi Delta Kappan, 83(1), 67–70, 72.

Every Student Succeeds Act. (2017). Assessments under Title I, Part A & Title I, Part B: Summary of final regulations.https://www2.ed.gov/policy/elsec/leg/essa/essaassessmentfactsheet1207.pdf

Fletcher, J. M., Lyon, G. R., Barnes, M., Stuebing, K. K., Francis, D. J., Olson, R. K., Shaywitz, S. E., & Shaywitz, B. A. (2002). Classification of learning disabilities: An evidence-based evaluation. In R. Bradley, L. Danielson, & D. Hallahan (Eds.), Identification of learning disabilities: Research to practice (pp. 185–250). Lawrence Erlbaum.

Gabor, A. (2020, December 27). Education secretary’s first task: Curb standardized tests. Pittsburgh Post-Gazette.https://www.post-gazette.com/opinion/2020/12/28/Andrea-Gabor-Education-secretary-s-first-task-Curb-standardized-tests/stories/202012280011

Gallagher, C. (2003). Reconciling a tradition of testing with a new learning paradigm. Educational Psychology Review, 15(1), 83–99.

Gewertz, C. (2020, April 2). It’s official: All states have been excused from statewide testing this year. Education Week.https://www.edweek.org/teaching-learning/its-official-all-states-have-been-excused-from-statewide-testing-this-year/2020/04

Goslin, D. A. (1963). The search for ability: Standardized testing in social perspective. Russell Sage Foundation.

Goslin, D. A. (1967). Teachers and testing. Russell Sage Foundation.

Greene, P. (2019, April 24). Why the big standardized test is useless for teachers. Forbes.https://www.forbes.com/sites/petergreene/2019/04/24/why-the-big-standardized-test-is-useless-for-teachers/?sh=60756d044bc8

Greene, P. (2020, August 14). Schools should scrap the big standardized test this year. Forbes.https://www.forbes.com/sites/petergreene/2020/08/14/schools-should-scrap-the-big-standardized-test-this-year/?sh=71b18e0a79f5

Gresham, F. M. (2002). Responsiveness to intervention: An alternative approach to the identification of learning disabilities. In R. Bradley, L. Donaldson, & D. Hallahan (Eds.), Identification of learning disabilities: Research to practice (pp. 467–519). Lawrence Erlbaum.

Hamilton, L., Stecher, B., & Klein, S. (Eds.) (2002). Making sense of test-based accountability in education. RAND Education. https://www.rand.org/content/dam/rand/pubs/monograph_reports/2002/MR1554.pdf

Hamlin, D., & Peterson, P. E. (2018, May 22). Have states maintained high expectations for student performance? Education Next. https://www.educationnext.org/have-states-maintained-high-expectations-student-performance-analysis-2017-proficiency-standards/

Harris, D. N. (2011). Value-added measures in education: What every educator needs to know. Harvard Education Press.

Hart, R., Casserly, M., Uzzell, R., Palacios, M., Corcoran, A., & Spurgeon, L. (2015). Student testing in America’s great city schools: An inventory and preliminary analysis. Council of the Great City Schools.https://www.cgcs.org/cms/lib/DC00001581/Centricity/Domain/87/Testing%20Report.pdf

Hirsch, E. D. (1996). The schools we need and why we don’t have them. Doubleday.

Jackson, A. (2015, March 25). 3 Big ways No Child Left Behind failed. Business Insider. https://www.businessinsider.com/heres-what-no-child-left-behind-got-wrong-2015-3

Kane, T. J. (2015, March 5). Frustrated with the pace of progress in education? Invest in better evidence. Brookings Institution. https://www.brookings.edu/research/frustrated-with-the-pace-of-progress-in-education-invest-in-better-evidence/

Kohn, A. (2020, August 18). The accidental education benefits of Covid-19. Education Week.https://www.edweek.org/teaching-learning/opinion-the-accidental-education-benefits-of-covid-19/2020/08

Koretz, D. (2017). The testing charade: Pretending to make schools better. University of Chicago Press.

Kubiszyn, T., & Borich, G. (2016). Educational testing and measurement: Classroom application and practice (11th ed.). Wiley.

Larry P. v. Riles, 495 F. Supp. 926 (N.D. Cal. 1979). https://law.justia.com/cases/federal/district-courts/FSupp/495/926/2007878/

Lee, D. E., & Eadens, D. W., (2014). The problem: Low-achieving districts and low-performing boards. International Journal of Education Policy and Leadership, 9(3), 1–13 https://files.eric.ed.gov/fulltext/EJ1045888.pdf

Los Angeles Times. (2021). Los Angeles teacher ratings. http://projects.latimes.com/value-added/

Loveless, T., (2016, October 17). The strange case of the disappearing NAEP. Brookings Institution. https://www.brookings.edu/blog/brown-center-chalkboard/2016/10/17/the-strange-case-of-the-disappearing-naep/

McArdle, E. (2014). What happened to the Common Core? Harvard Ed. Magazine.https://www.gse.harvard.edu/news/ed/14/09/what-happened-common-core#:~:text=Common%20Concerns&text=In%20fact%2C%20the%20Core%20sets,albeit%20incentivized%20with%20federal%20money

Missouri Code of State Regulations. (2021). Rules of Department of Elementary and Secondary Education. https://www.sos.mo.gov/cmsimages/adrules/csr/current/5csr/5c20-400.pdf

Missouri Department of Elementary and Secondary Education. (2019). Missouri Assessment Program: Grade level assessments. https://dese.mo.gov/sites/default/files/asmt-gl-2019-tech-report.pdf

Missouri Department of Elementary and Secondary Education. (2020). Missouri Assessment Program. https://dese.mo.gov/special-education/effective-practices/student-assessments/missouri-assessment-program

National Center for Education Statistics. (n.d.). Fast facts: Expenditures. https://nces.ed.gov/fastfacts/display.asp?id=66

National Center for Education Statistics. (2020). Mapping state proficiency standards. https://nces.ed.gov/nationsreportcard/studies/statemapping/

National Council on Teacher Quality (2020). The four pillars to reading success.https://www.nctq.org/dmsView/the_four_pillars_to_reading_success

National Research Council. (2004). On evaluating curricular effectiveness: Judging the quality of K–12 mathematics evaluations. National Academies Press.

No Child Left Behind Act of 2001. P.L. 107-110, 20 U.S.C. § 6319 (2002).

Olson, L., & Jerald, C. (2020). The big test: The future of statewide standardized assessments. Georgetown University, FutureEd. https://www.future-ed.org/wp-content/uploads/2020/04/TheBigTest_Final-1.pdf

Paul, C. A. (2016). Elementary and Secondary Education Act of 1965. Social Welfare History Project. http://socialwelfare.library.vcu.edu/programs/education/elementary-and-secondary-education-act-of-1965/

PDK/Gallup Poll (2015). Testing lacks public support. Phi Delta Kappan, 97(1), 8–10. http://www.fsba.org/wp-content/uploads/2014/01/PDK-Gallup-Poll-2015.pdf

Phelps, R. (Ed.) (2005). Defending standardized testing. Lawrence Erlbaum Associates, Inc.

Phelps, R. (2007). Standardized testing. Peter Lang.

Popham, W. (2001). Teaching to the test? Educational Leadership, 58(6) 16-20.

Porter-Magee, K. (2013, February 26). Trust but verify: The real lessons of Campbell’s Law. Thomas B. Fordham Institute. https://fordhaminstitute.org/ohio/commentary/trust-verify-real-lessons-campbells-law

Ravitch, D. (2011, April 28). Standardized testing undermines teaching [Interview]. National Public Radio. https://www.npr.org/2011/04/28/135142895/ravitch-standardized-testing-undermines-teaching

Sawchuk, S. (2019, January 8). Is it time to kill annual testing? Education Week. https://www.edweek.org/teaching-learning/is-it-time-to-kill-annual-testing/2019/01

Shober, A. F., & Hartney, M. T. (2014, March 25). Does school board leadership matter? Thomas B. Fordham Institute. https://fordhaminstitute.org/national/research/does-school-board-leadership-matter

Silver, D. & Polikoff, M. (2020, November 16). Getting testy about testing: K–12 parents support canceling standardized testing this spring. That might not be a good idea. The 74. https://www.the74million.org/article/silver-polikoff-getting-testy-about-testing-k-12-parents-support-canceling-standardized-testing-this-spring-that-might-not-be-a-good-idea/

States, J., Detrich, R. & Keyworth, R. (2017). Overview of formative assessment. The Wing Institute. http://www.winginstitute.org/student-formative-assessment

States, J., Detrich, R. & Keyworth, R. (2018). Overview of summative assessment. The Wing Institute. https://www.winginstitute.org/assessment-summative

Stecher, B., Camm, F., Damberg, C. L., Hamilton, L. S., Mullen, K. J., Nelson, C., Sorensen, P., Wachs, M., Yoh, A., Zellman, G. L., & Leuschner, K. J. (2010). Are performance-based accountability systems effective? Evidence from five sectors. RAND Corporation. https://www.rand.org/pubs/research_briefs/RB9549.html

Stiggins, R. J., Arter, J. A., Chappuis, J., & Chappuis, S. (2006). Classroom assessment for student learning: Doing it right—using it well. ETS Assessment Training Institute.

Strauss, V. (2020a, June 21). It looks like the beginning of the end of America’s obsession with student standardized testing. The Washington Post. https://www.washingtonpost.com/education/2020/06/21/it-looks-like-beginning-end-americas-obsession-with-student-standardized-tests/

Strauss, V. (2020b, December 30). Calls are growing for Biden to do what DeVos did: Let states skip annual standardized tests this spring. The Washington Post. https://www.washingtonpost.com/education/2020/12/30/calls-are-growing-biden-do-what-devos-did-let-states-skip-annual-standardized-tests-this-spring/

Strauss, V. (2021, February 1). What you need to know about standardized testing. The Washington Post. https://www.washingtonpost.com/education/2021/02/01/need-to-know-about-standardized-testing/

Supowitz, J. (2021) Is high-stakes testing working? University of Pennsylvania, Graduate School of Education. https://www.gse.upenn.edu/review/feature/supovitz

Title 5 California Code of Regulations § 3030. Eligibility criteria. (2014). https://www.casponline.org/pdfs/pdfs/Title 5 Regs, CCR update.pdf

Torgeson, J. K., Alexander, A. W., Wagner, R. K., Rashotte, C .A., Voeller, K. K., & Conway, T. (2001). Intensive remedial instruction for children with severe reading disabilities: Immediate and long-term outcomes from two instructional approaches. Journal of Learning Disabilities, 34(1), 33–58, 78.

Tyack, D. (1974). The one best system. Harvard University Press.

Wainer, H. (1994). On the academic performance of New Jersey’s public school children: Fourth and eighth grade mathematics in 1992. Education Policy Analysis Archives, 2(10), 1–17.

Walker, H. M. (2004). Commentary: Use of evidence-based intervention in schools: Where we've been, where we are, and where we need to go. School Psychology Review, 33(3), 398–407.

Whitehurst, G. (2004). Making education evidence-based: Premises, principles, pragmatics, and politics. IPR Distinguished Public Policy Lecture Series 2003–04. Institute for Policy Research Northwestern University. https://ies.ed.gov/director/pdf/2004_04_26.pdf

Wiggins, G. (1994). Assessing student performance: Exploring the purpose and limits of testing. Jossey-Bass.

TITLE

SYNOPSIS

CITATION

LINK

Is the three-term contingency trial a predictor of effective instruction?

Two experiments are reported which test the effect of increased three-term contingency trials on students' correct and incorrect math responses. The results warrant further research to test whether or not rates of presentation of three-term contingency trials are predictors of effective instruction.

Albers, A. E., & Greer, R. D. (1991). Is the three-term contingency trial a predictor of effective instruction?. Journal of Behavioral Education, 1(3), 337-354.

Effects of Acceptability on Teachers' Implementation of Curriculum-Based Measurement and Student Achievement in Mathematics Computation

The authors investigated the hypothesis that treatment acceptability influences teachers' use of a formative evaluation system (curriculum-based measurement) and, relatedly, the amount of gain effected in math for their students.

Allinder, R. M., & Oats, R. G. (1997). Effects of acceptability on teachers' implementation of curriculum-based measurement and student achievement in mathematics computation. Remedial and Special Education, 18(2), 113-120.

Standards for educational and psychological testing

The “Standards for Educational and Psychological Testing” were approved as APA policy by the APA Council of Representatives in August 2013.

American Educational Research Association, American Psychological Association, Joint Committee on Standards for Educational, Psychological Testing (US), & National Council on Measurement in Education. (1985). Standards for educational and psychological testing. American Educational Research Association.

High-Stakes Testing, Uncertainty, and Student Learning

This study evaluated the relationship between scores on high stakes test and scores on other measures of learning such as NAEP and SAT scores. In general, there was no increase in student learning as a function of high stakes testing.

Amrein, A. L., & Berliner, D. C. (2002). High-Stakes Testing, Uncertainty, and Student Learning. Education Policy Analysis Archives.

The Impact of High-Stakes Tests on Student Academic Performance

The purpose of this study is to assess whether academic achievement in fact increases after the introduction of high-stakes tests. The first objective of this study is to assess whether academic achievement has improved since the introduction of high-stakes testing policies in the 27 states with the highest stakes written into their grade 1-8 testing policies.

Amrein-Beardsley, A., & Berliner, D. C. (2002). The Impact of High-Stakes Tests on Student Academic Performance.

Beyond Standardized Testing: Assessing Authentic Academic Achievement in the Secondary School.

This book was designed as an assessment of standardized testing and its alternatives at the secondary school level.

Archbald, D. A., & Newmann, F. M. (1988). Beyond standardized testing: Assessing authentic academic achievement in the secondary school.

Effectiveness of frequent testing over achievement: A meta analysis study

In current study, through a meta-analysis of 78 studies, it is aimed to determine the overall effect size for testing at different frequency levels and to find out other study characteristics, related to the effectiveness of frequent testing.

Başol, G., & Johanson, G. (2009). Effectiveness of frequent testing over achievement: A meta analysis study. Journal of Human Sciences, 6(2), 99-121.

Problems with the use of student test scores to evaluate teachers

There is also little or no evidence for the claim that teachers will be more motivated to improve student learning if teachers are evaluated or monetarily rewarded for student test score gains.

Baker, E. L., Barton, P. E., Darling-Hammond, L., Haertel, E., Ladd, H. F., Linn, R. L., ... & Shepard, L. A. (2010). Problems with the Use of Student Test Scores to Evaluate Teachers. EPI Briefing Paper# 278. Economic Policy Institute.

Mapping State Proficiency Standards Onto the NAEP Scales: Results From the 2015 NAEP Reading and Mathematics Assessments

Standardized tests play a critical role in tracking and comparing K-12 student progress across time, student demographics, and governing bodies (states, cities, districts). One methodology is to benchmark the each state’s proficiency standards against those of the National Assessment of Educational Progress (NAEP) test. This study does just that. Using NAEP as a common yardstick allows a comparison of different state assessments. The results confirm the wide variation in proficiency standards across states. It also documents that the significant majority of states have standards are much lower than those established by the NAEP.

Bandeira de Mello, V., Rahman, T., and Park, B.J. (2018). Mapping State Proficiency Standards Onto NAEP Scales: Results From the 2015 NAEP Reading and Mathematics Assessments (NCES 2018-159). U.S. Department of Education, Washington, DC: Institute of Education Sciences, National Center for Education Statistics.

Meeting performance-based training demands: Accountability in an intervention-based practicum.

Describes the ways in which accountability methods were built into practicum experiences for specialist- and doctoral-level school psychology trainees at the University of Cincinnati.

Barnett, D. W., Daly III, E. J., Hampshire, E. M., Rovak Hines, N., Maples, K. A., Ostrom, J. K., & Van Buren, A. E. (1999). Meeting performance-based training demands: Accountability in an intervention-based practicum. School Psychology Quarterly, 14(4), 357.

High-stakes testing, uncertainty, and student learning

A brief history of high-stakes testing is followed by an analysis of eighteen states with severe consequences attached to their testing programs.

Beardsley, A., & Berliner, D. C. (2002). High-stakes testing, uncertainty, and student learning. Education Policy Analysis Archives, 10.

A follow-up of Follow Through: The later effects of the Direct Instruction model on children in fifth and sixth grades.

The later effects of the Direct Instruction Follow Through program were assessed at five diverse sites. Low-income fifth and sixth graders who had completed the full 3 years of this first- through third-grade program were tested on the Metropolitan Achievement Test (Intermediate level) and the Wide Range Achievement Test (WRAT).

Becker, W. C., & Gersten, R. (1982). A follow-up of Follow Through: The later effects of the Direct Instruction Model on children in fifth and sixth grades. American Educational Research Journal, 19(1), 75-92.

Predicting Success in College: The Importance of Placement Tests and High School Transcripts.

This paper uses student-level data from a statewide community college system to examine the validity of placement tests and high school information in predicting course grades and college performance.

Belfield, C. R., & Crosta, P. M. (2012). Predicting Success in College: The Importance of Placement Tests and High School Transcripts. CCRC Working Paper No. 42. Community College Research Center, Columbia University.

Analysis: Spring exams are the best shot state leaders have at knowing what’s happening with their students.

As 2021 begins, we can’t make assumptions about what students have learned this school year. Education leaders and teachers, of course, have interacted with students and watched them through computer screens for many months — but we won’t truly know what happened and where learning gaps exist without statewide exams.

Bell-Ellwanger, J. (2021, January 5). Analysis: Spring exams are the best shot state leaders have at knowing what’s happening with their students. The 74

What Does the Research Say About Testing?

Since the passage of the No Child Left Behind Act (NCLB) in 2002 and its 2015 update, the Every Student Succeeds Act (ESSA), every third through eighth grader in U.S. public schools now takes tests calibrated to state standards, with the aggregate results made public. In a study of the nation’s largest urban school districts, students took an average of 112 standardized tests between pre-K and grade 12.

Berwick, C. (2019). What Does the Research Say About Testing? Marin County, CA: Edutopia.

Assessing The Value-Added Effects Of Literacy Collaborative Professional Development On Student Learning

This article reports on a 4-year longitudinal study of the effects of Literacy Collaborative (LC), a school-wide reform model that relies primarily on the one-on-one coaching of teachers as a lever for improving student literacy learning.

Biancarosa, G., Bryk, A. S., & Dexter, E. R. (2010). Assessing the value-added effects of literacy collaborative professional development on student learning. The elementary school journal, 111(1), 7-34.

Inside the black box: Raising standards through classroom assessment

Firm evidence shows that formative assessment is an essential component of classroom work and that its development can raise standards of achievement, Mr. Black and Mr. Wiliam point out. Indeed, they know of no other way of raising standards for which such a strong prima facie case can be made.

Black, P., & Wiliam, D. (2010). Inside the black box: Raising standards through classroom assessment. Phi Delta Kappan, 92(1), 81-90.

Houston ties teachers’ pay to test scores.

Over the objection of the teachers' union, the Board of Education here on Thursday unanimously approved the nation's largest merit pay program, which calls for rewarding teachers based on how well their students perform on standardizes tests.

Blumenthal, R. (2006). Houston ties teachers’ pay to test scores. New York Times, 13.

Who Leaves? Teacher Attrition and Student Achievement

The goal of this paper is to estimate the extent to which there is differential attrition based on teachers' value-added to student achievement.

Boyd, D., Grossman, P., Lankford, H., Loeb, S., & Wyckoff, J. (2008). Who leaves? Teacher attrition and student achievement. Working Paper No. 14022. Cambridge, MA: National Bureau of Economic Research. Retrieved from https://www.nber.org/papers/w14022

Explaining the short careers of high-achieving teachers in schools with low-performing students

This paper examines New York City elementary school teachers’ decisions to stay in the same school, transfer to another school in the district, transfer to another district, or leave teaching in New York state during the first five years of their careers.

Boyd, D., Lankford, H., Loeb, S., & Wyckoff, J. (2005). Explaining the short careers of high-achieving teachers in schools with low-performing students. American Economic Review, 95(2), 166-171.

The narrowing gap in New York City teacher qualifications and its implications for student achievement in high-poverty schools.

By estimating the effect of teacher attributes using a value-added model, the analyses in this paper predict that observable qualifications of teachers resulted in average improved achievement for students in the poorest decile of schools of .03 standard deviations.

Boyd, D., Lankford, H., Loeb, S., Rockoff, J., & Wyckoff, J. (2008). The narrowing gap in New York City teacher qualifications and its implications for student achievement in high‐poverty schools. Journal of Policy Analysis and Management: The Journal of the Association for Public Policy Analysis and Management, 27(4), 793-818.

Reconsidering the impact of high-stakes testing.

This article is an extended reanalysis of high-stakes testing on achievement. The paper focuses on the performance of states, over the period 1992 to 2000, on the NAEP mathematics assessments for grades 4 and 8.

Braun, H. (2004). Reconsidering the impact of high-stakes testing. Education Policy Analysis Archives, 12(1).

Educational Measurement

This fourth edition provides in-depth treatments of critical measurement topics, and the chapter authors are acknowledged experts in their respective fields.

Brennan, R. L. (Ed.) (2006). Educational measurement (4th ed.). Westport, CT: Praeger Publishers.

Improving schools by standardized tests

This book divides itself naturally into two parts. The first part has to do with the situation in which Superintendent Brooks found himself, with his successful campaign in educating his teachers to use standardized tests, with the results which he obtained, with the way he used these results to grade his pupils, to rate his teachers, and to evaluate methods of teaching, and finally with the use he made of intelligence tests.

Brooks, S. S. (1905). Improving schools by standardized tests. Houghton Mifflin.

National board certification and teacher effectiveness: Evidence from a random assignment experiment

The National Board for Professional Teaching Standards (NBPTS) assesses teaching practice based on videos and essays submitted by teachers. They compared the performance of classrooms of elementary students in Los Angeles randomly assigned to NBPTS applicants and to comparison teachers.

Cantrell, S., Fullerton, J., Kane, T. J., & Staiger, D. O. (2008). National board certification and teacher effectiveness: Evidence from a random assignment experiment (No. w14608). National Bureau of Economic Research.

International Test Score Comparisons and Educational Policy: A Review of the Critiques

This paper review the main (four) critiques that have been made of international tests, as well as the rationales and education policy analyses accompanying these critiques. This brief also discusses a set of (four) critiques around the underlying social meaning and educational policy value of international test comparisons. These comparisons indicate how students in various countries score on a particular test, but do they carry a larger meaning? This paper also have some recommendations based on their critiques.

Carnoy, M. (2015). International Test Score Comparisons and Educational Policy: A Review of the Critiques. National Education Policy Center.

Does external accountability affect student outcomes? A cross-state analysis.

This study developed a zero-to-five index of the strength of accountability in 50 states based on the use of high-stakes testing to sanction and reward schools, and analyzed whether that index is related to student gains on the NAEP mathematics test in 1996–2000.

Carnoy, M., & Loeb, S. (2002). Does external accountability affect student outcomes? A cross-state analysis. Educational Evaluation and Policy Analysis, 24(4), 305-331.

Seeking the Magic Metric: Using Evidence to Identify and Track School System Quality

This paper discusses the search for a “magic metric” in education: an index/number that would be generally accepted as the most efficient descriptor of school’s performance in a district.

Celio, M. B. (2013). Seeking the Magic Metric: Using Evidence to Identify and Track School System Quality. In Performance Feedback: Using Data to Improve Educator Performance (Vol. 3, pp. 97-118). Oakland, CA: The Wing Institute.

Buried Treasure: Developing a Management Guide From Mountains of School Data

This report provides a practical “management guide,” for an evidence-based key indicator data decision system for school districts and schools.

Celio, M. B., & Harvey, J. (2005). Buried Treasure: Developing A Management Guide From Mountains of School Data. Center on Reinventing Public Education.

Why annual statewide testing is critical to judging school quality

With Congress moving rapidly to revise the No Child Left Behind Act (NCLB), no issue has proven more contentious than whether the federal government should continue to require that states test all students in math and reading annually in grades three through eight.

Chingos, M. M., & West, M. R. (2015). Why Annual Statewide Testing Is Critical to Judging School Quality. Brookings Institution, Brown Center Chalkboard Series, January, 20.

The case for annual testing

The most recent incarnation of ESEA, signed into law in January of 2002 by President George W. Bush, is the No Child Left Behind Act (NCLB). We’re now 13 years into NCLB, so reauthorization is long overdue. It is not just the long delay that argues for congressional action, but the extent to which the Obama administration has replaced the provisions of the bill with its own set of priorities implemented through Race to the Top and state waivers.

Chingos, M. M., Dynarski, M., Whitehurst, G., & West, M. (2015, January 8). The case for annual testing. Brookings Institution

Can teachers be evaluated by their students’ test scores? Should they be? The use of value-added measures for teacher effectiveness in policy and practice

In this report, the author aim to provide an accessible introduction to these new measures of teaching quality and put them into the broader context of concerns over school quality and achievement gaps.

Corcoran, S. P. (2010). Can Teachers Be Evaluated by Their Students' Test Scores? Should They Be? The Use of Value-Added Measures of Teacher Effectiveness in Policy and Practice. Education Policy for Action Series. Annenberg Institute for School Reform at Brown University (NJ1).

Crocker, L. (2005). Teaching for the test: How and why test preparation is appropriate. Defending standardized testing, 159-174.

One flashpoint in the incendiary debate over standardized testing in American public

schools is the area of test preparation. The focus of this chapter is test preparation in achievement testing and it's purportedly harmful effects on students and teachers.

Crocker, L. (2005). Teaching for the test: How and why test preparation is appropriate. Defending standardized testing, 159-174.

The proficiency illusion

The Proficiency Illusion reveals that the tests that states use to measure academic progress under the No Child Left Behind Act are creating a false impression of success, especially in reading and especially in the early grades.

Cronin, J., Dahlin, M., Adkins, D., & Kingsbury, G. G. (2007). The Proficiency Illusion. Thomas B. Fordham Institute.

Curriculum-based measurement and special education services: A fundamental and direct relationship.

special education as a problem solving the nature of mild handicaps person-centered versus situation-centered problems.

Deno, S. L. (1989). Curriculum-based measurement and special education services: A fundamental and direct relationship.

Identifying Valid Measures of Reading

Three concurrent validity studies were conducted to determine the relationship between performances on formative measures of reading and standardized achievement measures of reading.

Deno, S. L., Mirkin, P. K., & Chiang, B. (1982). Identifying valid measures of reading. Exceptional children, 49(1), 36-47.

Program on education policy and governance, survey 2019.

On several issues, our analysis teases out nuances in public opinion by asking variations of questions to randomly selected segments of survey participants. We divided respondents at random into two or more segments and asked each group a different version of the same general question.

Education Next. (2019). Program on education policy and governance, survey 2019.

Implementation, Sustainability, and Scaling Up of SocialEmotional and Academic Innovations in Public Schools

Based on the experiences of the Collaborative for Academic, Social, and Emotional Learning (CASEL) and reviews of literature addressing implementation failures, observations about failures to "scale up" are presented.

Elias, M. J., Zins, J. E., Graczyk, P. A., & Weissberg, R. P. (2003). Implementation, sustainability, and scaling up of social-emotional and academic innovations in public schools. School Psychology Review, 32(3), 303-319.

The Utility of Curriculum-Based Measurement and Performance Assessment as Alternatives to Traditional Intelligence and Achievement Tests.

Curriculum-based measurement and performance assessments can provide valuable data for making special-education eligibility decisions. Reviews applied research on these assessment approaches and discusses the practical context of treatment validation and decisions about instructional services for students with diverse academic needs.

Elliott, S. N., & Fuchs, L. S. (1997). The Utility of Curriculum-Based Measurement and Performance Assessment as Alternatives to Traditional Intelligence and Achievement Tests. School Psychology Review, 26(2), 224-33.