Treatment Integrity Overview

View this Overview as PDF

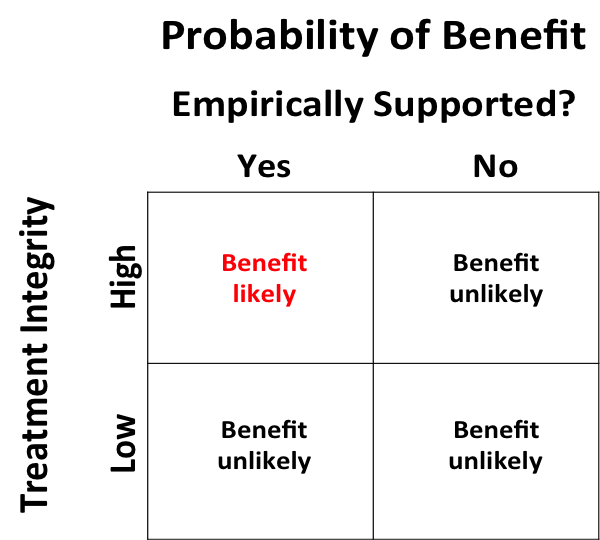

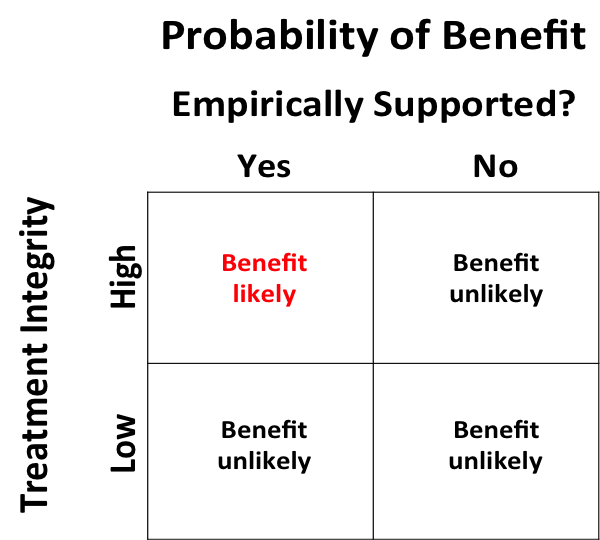

For the best chance of producing positive educational outcomes for all children, two conditions must be met: (a) adopting effective empirically supported (evidence-based) practices and (b) implementing those practices with sufficient quality that they make a difference (treatment integrity) (Detrich, 2014). Both are necessary as neither on its own is sufficient to result in positive outcomes. Figure 1 describes the relationship between empirically supported practices and treatment integrity.

Figure 1. Relationship between empirically supported practices and treatment integrity

If an intervention has strong empirical support and is implemented with high integrity, then there is a high probability that positive outcomes will be achieved (upper left quadrant). The other quadrants illustrate that the lack of either element reduces the probability of positive outcomes: If a well-supported, research-based intervention is not implemented with high integrity (lower left quadrant); if an intervention is implemented with high integrity but is not empirically supported (upper right quadrant); or if a nonempirically supported intervention is implemented poorly (lower right quadrant).

It should be noted that some interventions are not empirically supported because sufficient research has demonstrated that they do not produce positive outcomes. Alternatively, some interventions are not empirically supported because they have not been experimentally evaluated. This latter class of interventions is still in the experimental phase of development. Using these interventions is tantamount to conducting research and all of the rules for engaging in research should be followed. Because these interventions are still experimental, their effectiveness is unknown and they should be implemented only with the fully informed consent of both educators and parents.

The advent of the evidence-based practice movement in education has resulted in considerable effort to identify practices that are empirically supported. Organizations such as the What Works Clearinghouse (https://ies.ed.gov/ncee/wwc) and the Best Evidence Encyclopedia (http://www.bestevidence.org) have reviewed a large number of interventions to discern the research base for each intervention. Until the past 20 years, treatment integrity did not receive significant scholarly attention. Even with the increased attention, treatment integrity measures are reported in about half of the published intervention research reports (Sanetti, Dobey, & Gritter, 2012; Sanetti, Gritter, & Dobey, 2011). When treatment integrity data are not published in research reports, it is difficult to know if the intervention that the researchers reported was actually implemented and was responsible for the outcomes or if there was some undocumented variation of the intervention that actually accounted for the outcomes.

As important as treatment integrity is in research, it is equally important in practice settings. Measures of treatment integrity are fundamental to data-based decision making. Effective interventions may be prematurely terminated if the level of treatment integrity is not known. Student performance data tell us only how well a student is responding to the intervention as it is implemented. Treatment integrity measures tell us how well the intervention is being implemented. Without knowing about treatment integrity, it is not possible to know if a student’s failure to benefit from an intervention is a function of an ineffective intervention or ineffective implementation (see Treatment Integrity in the Problem-Solving Process for more discussion).

References

Detrich, R. (2014). Treatment integrity: Fundamental to education reform. Journal of Cognitive Education and Psychology, 13(2), 258–271.

Sanetti, L. M. H., Dobey, L. M., & Gritter, K. L. (2012). Treatment integrity of interventions with children in the Journal of Positive Behavior interventions from 1999 to 2009. Journal of Positive Behavior Interventions, 14(1), 29–46.

Sanetti, L. M. H., Gritter, K. L., & Dobey, L. M. (2011). Treatment integrity of interventions with children in the school psychology literature from 1995 to 2008. School Psychology Review, 40(1), 72–84.

View this Overview as PDF

Publications

TITLE

SYNOPSIS

CITATION

LINK

Treatment Integrity in the Problem-Solving Process Overview

Treatment integrity is a core component of data-based decision making (Detrich, 2013). The usual approach is to consider student data when making decisions about an intervention; however, if there are no data about how well the intervention was implemented, then meaningful judgments cannot be made about effectiveness.

Treatment Integrity: Fundamental to Education Reform

To produce better outcomes for students two things are necessary: (1) effective, scientifically supported interventions (2) those interventions implemented with high integrity. Typically, much greater attention has been given to identifying effective practices. This review focuses on features of high quality implementation.

Detrich, R. (2014). Treatment integrity: Fundamental to education reform. Journal of Cognitive Education and Psychology, 13(2), 258-271.

Innovation, Implementation Science, and Data-Based Decision Making: Components of Successful Reform

Schools are often expected to implement innovative instructional programs. Most often these initiatives fail because what we know from implementation science is not considered as part of implementing the initiative. This chapter reviews the contributions implementation science can make for improving outcomes for students.

Detrich, R. Innovation, Implementation Science, and Data-Based Decision Making: Components of Successful Reform. Handbook on Innovations in Learning, 31.

Treatment Integrity: A Fundamental Unit of Sustainable Educational Programs.

Reform efforts tend to come and go very quickly in education. This paper makes the argument that the sustainability of programs is closely related to how well those programs are implemented.

Detrich, R., Keyworth, R. & States, J. (2010). Treatment Integrity: A Fundamental Unit of Sustainable Educational Programs. Journal of Evidence-Based Practices for Schools, 11(1), 4-29.

Approaches to Increasing Treatment Integrity

Strategies designed to increase treatment integrity fall into two categories: antecedent-based strategies and consequence-based strategies.

Detrich, R., States, J. & Keyworth, R. (2017). Approaches to Increasing Treatment Integrity. Oakland, Ca. The Wing Institute

Dimensions of Treatment Integrity Overview

Historically, treatment integrity has been defined as implementation of an intervention as planned (Gresham, 1989). More recently, treatment integrity has been reimagined as multidimensional (Dane & Schneider, 1998). In this conceptualization of treatment integrity are four dimensions relevant to practice: (a) exposure (dosage), (b) adherence, (c) quality of delivery, and (d) student responsiveness. It is important to understand that these dimensions do not stand alone but rather interact to impact the ultimate effectiveness of an intervention. It is important for educators to assess all dimensions of treatment integrity to assure that it is being implemented as intended.

Detrich, R., States, J. & Keyworth, R. (2017). Dimensions of Treatment Integrity Overview. Oakland, Ca. The Wing Institute

Treatment Integrity in the Problem Solving Process

The usual approach to determining if an intervention is effective for a student is to review student outcome data; however, this is only part of the task. Student data can only be understood if we know something about how well the intervention was implemented. Student data without treatment integrity data are largely meaningless because without knowing how well an intervention has been implemented, no judgments can be made about the effectiveness of the intervention. Poor outcomes can be a function of an ineffective intervention or poor implementation of the intervention. Without treatment integrity data, the is a risk that an intervention will be judged as ineffective when, in fact, the quality of implementation was so inadequate that it would be unreasonable to expect positive outcomes.

Detrich, R., States, J. & Keyworth, R. (2017). Treatment Integrity in the Problem Solving Process. Oakland, Ca. The Wing Institute.

Overview of Treatment Integrity

For the best chance of a positive impact on educational outcomes, two conditions must be met: (a) Effective interventions must be adopted, and (b) those interventions must be implemented with sufficient quality (treatment integrity) to ensure benefit. To date, emphasis in education has been on identifying effective interventions and less concern with implementing the interventions. The research on the implementation of interventions is not encouraging. Often, treatment integrity scores are very low and, in practice, implementation is rarely assessed. If an intervention with a strong research base is not implemented with a high level of treatment integrity, then the students do not actually experience the intervention and there is no reason to assume they will benefit from it. Under these circumstances, it is not possible to know if poor outcomes are the result of an ineffective intervention or poor implementation of that intervention. Historically, treatment integrity has been defined as implementing an intervention as prescribed. More recently, it has been conceptualized as having multiple dimensions, among them dosage and adherence which must be measured to ensure that it is occurring at adequate levels.

Detrich, R., States, J., & Keyworth, R. (2107). Overview of Treatment Integrity. Oakland, Ca. The Wing Institute.

Sustainability of evidence-based programs in education

This paper discusses common elements of successfully sustaining effective practices across a variety of disciplines.

Fixsen, D. L., Blase, K. A., Duda, M., Naoom, S. F., & Van Dyke, M. (2010). Sustainability of evidence-based programs in education. Journal of Evidence-Based Practices for Schools, 11(1), 30-46.

Roles and responsibilities of researchers and practitioners for translating research to practice

This paper outlines the best practices for researchers and practitioners translating research to practice as well as recommendations for improving the process.

Shriver, M. D. (2007). Roles and responsibilities of researchers and practitioners for translating research to practice. Journal of Evidence-Based Practices for Schools, 8(1), 1-30.

Why Education Practices Fail?

This paper examines a range of education failures: common mistakes in how new practices are selected, implemented, and monitored. The goal is not a comprehensive listing of all education failures but rather to provide education stakeholders with an understanding of the importance of vigilance when implementing new practices.

States, J., & Keyworth, R. (2020). Why Practices Fail. Oakland, CA: The Wing Institute. https://www.winginstitute.org/roadmap-overview

Treatment Integrity Strategies Overview

Student achievement scores in the United States remain stagnant despite repeated attempts to reform the education system. New initiatives promising hope arise, only to disappoint after being adopted, implemented, and quickly found wanting. The cycle of reform followed by failure has had a demoralizing effect on schools, making new reform efforts problematic. These efforts frequently fail because implementing new practices is far more challenging than expected and require that greater attention be paid to how initiatives are implemented. Treatment integrity is increasingly recognized as an essential component of effective implementation in an evidence-based education model that produces results, and inattention to treatment integrity is seen as a primary reason new initiatives fail. The question remains, what strategies can educators employ to increase the likelihood that practices are implemented as designed? The Wing Institute overview on the topic of Treatment Integrity Strategies examines the essential practice elements indispensable for maximizing treatment integrity.

States, J., Detrich, R. & Keyworth, R. (2017). Overview of Treatment Integrity Strategies. Oakland, CA: The Wing Institute. http://www.winginstitute.org/effective-instruction-treatment-integrity-strategies.

Data Mining

TITLE

SYNOPSIS

CITATION

LINK

How does performance feedback affect the way teachers carry out interventions?

This analysis examined the impact of performance feedback on the quality of implementation of interventions.

Detrich, R. (2015). How does performance feedback affect the way teachers carry out interventions? Retrieved from how-does-performance-feedback.

How long do reform initiatives last in public schools?

This analysis examined sustainability of education reforms in education.

Detrich, R. (2015). How long do reform initiatives last in public schools? Retrieved from how-long-do-reform.

How often are treatment integrity measures reported in published research?

This analysis examined the frequency that treatment integrity is reported in studies of research-based interventions.

Detrich, R. (2015). How often are treatment integrity measures reported in published research? Retrieved from how-often-are-treatment.

Presentations

TITLE

SYNOPSIS

CITATION

LINK

Roles and Responsibilities of Researchers and Practitioners Translating Research to Practice

This paper outlines the best practices for researchers and practitioners translating research to practice as well as recommendations for improving the process.

Shriver, M. (2006). Roles and Responsibilities of Researchers and Practitioners Translating Research to Practice [Powerpoint Slides]. Retrieved from 2006-wing-presentation-mark-shriver.

The Role of Data-based Decision Making

This paper identifies the critical features required for developing and maintaining a systemic data-based decision making model aligned at all levels, beginning with an individual student in the classroom and culminating with policy makers.

States, J. (2009). The Role of Data-based Decision Making [Powerpoint Slides]. Retrieved from 2009-campbell-presentation-jack-states.

The Role of Data-based Decision Making

This presentation examines the impact of data-based decision making in schools. It looks at the critical role data plays in building an evidence-based model for education that relies on monitoring for the reliable implementation of practices.

States, J. (2010). The Role of Data-based Decision Making [Powerpoint Slides]. Retrieved from 2010-hice-presentation-jack-states.

What the Data Tell Us

This paper offers an overview of issues practitioners must consider in selecting practices. Types of evidence, sources of evidence, and the role of professional judgment are discussed as cornerstones of effective evidenced-based decision-making.

States, J. (2010). What the Data Tell Us [Powerpoint Slides]. Retrieved from 2010-capses-presentation-jack-states.

Evolution of the Revolution: How Can Evidence-based Practice Work in the Real World?

This paper provides an overview of the considerations when introducing evidence-based services into established mental health systems.

Chorpita, B. (2008). Evolution of the Revolution: How Can Evidence-based Practice Work in the Real World? [Powerpoint Slides]. Retrieved from 2008-wing-presentation-bruce-chorpita.

Treatment Integrity and Program Fidelity: Necessary but Not Sufficient to Sustain Programs

If programs are to sustain they must be implemented with integrity. If there is drift over time, it raises questions about whether the program is sustaining or has been substantially changed.

Detrich, R. (2008). Treatment Integrity and Program Fidelity: Necessary but Not Sufficient to Sustain Programs [Powerpoint Slides]. Retrieved from 2008-aba-presentation-ronnie-detrich.

The Four Assumptions of the Apocalypse

This paper examines the four basic assumptions for effective data-based decision making in education and offers strategies for addressing problem areas.

Detrich, R. (2009). The Four Assumptions of the Apocalypse [Powerpoint Slides]. Retrieved from 2009-wing-presentation-ronnie-detrich.

Toward a Technology of Treatment Integrity

If research supported interventions are to be effective it is necessary that they are implemented with integrity. This paper describes approahes to assuring high levels of treatment integrtiy.

Detrich, R. (2011). Toward a Technology of Treatment Integrity [Powerpoint Slides]. Retrieved from 2011-apbs-presentation-ronnie-detrich.

Treatment Integrity: Necessary by Not Sufficient for Improving Outcomes

Treatment integrity is necessary to improve outcomes but it is not sufficient. It is also necessary to implement scientifically supported interventions.

Detrich, R. (2015). Treatment Integrity: Necessary by Not Sufficient for Improving Outcomes [Powerpoint Slides]. Retrieved from 2015-ebpindisabilities-txint-presentation-ronnie-detrich.

Student Research

TITLE

SYNOPSIS

CITATION

LINK

Effects of a problem solving team intervention on the problem-solving process: Improving concept knowledge, implementation integrity, and student outcomes.

This study evaluated the effects of a problem solving intervention package that included problem-solving information, performance feedback, and coaching in a student intervention planning protocol.

Vaccarello, C. A. (2011). Effects of a problem solving team intervention on the problem-solving process: Improving concept knowledge, implementation integrity, and student outcomes. Retrieved from student-research-2011.

Transporting an evidence-based school engagement intervention to practice: Outcomes and barriers to implementation.

Check and Connect is an intervention designed to increase student engagement in school. This study was a transportability study that evaluated the impact of Check and Connect when implemented by school personnel rather than researchers.

Pankow, C. (2009). Transporting an evidence-based school engagement intervention to practice: Outcomes and barriers to implementation. Retrieved from student-research-2009-a.

TITLE

SYNOPSIS

CITATION

LINK

Treatment Integrity in the Problem-Solving Process Overview

Treatment integrity is a core component of data-based decision making (Detrich, 2013). The usual approach is to consider student data when making decisions about an intervention; however, if there are no data about how well the intervention was implemented, then meaningful judgments cannot be made about effectiveness.

Introduction: Proceedings from the Wing Institute’s Sixth Annual Summit on Evidence-Based Education: Performance Feedback: Using Data to Improve Educator Performance.

This book is compiled from the proceedings of the sixth summit entitled “Performance Feedback: Using Data to Improve Educator Performance.” The 2011 summit topic was selected to help answer the following question: What basic practice has the potential for the greatest impact on changing the behavior of students, teachers, and school administrative personnel?

States, J., Keyworth, R. & Detrich, R. (2013). Introduction: Proceedings from the Wing Institute’s Sixth Annual Summit on Evidence-Based Education: Performance Feedback: Using Data to Improve Educator Performance. In Education at the Crossroads: The State of Teacher Preparation (Vol. 3, pp. ix-xii). Oakland, CA: The Wing Institute.

Increasing Pre-service Teachers' Use of Differential Reinforcement: Effects of Performance Feedback on Consequences for Student Behavior.

This study evaluated the effects of performance feedback to increase the implementation of skills taught during in-service training.

Auld, R. G., Belfiore, P. J., & Scheeler, M. C. (2010). Increasing Pre-service Teachers’ Use of Differential Reinforcement: Effects of Performance Feedback on Consequences for Student Behavior. Journal of Behavioral Education, 19(2), 169-183.

Increasing pre-service teachers’ use of differential reinforcement: Effects of performance feedback on consequences for student behavior

Significant dollars are spent each school year on professional development programs to improve teachers’ effectiveness. This study assessed the integrity with which pre-service teachers used a differential reinforcement of alternate behavior (DRA) strategy taught to them during their student teaching experience.

Auld, R. G., Belfiore, P. J., & Scheeler, M. C. (2010). Increasing pre-service teachers’ use of differential reinforcement: Effects of performance feedback on consequences for student behavior. Journal of Behavioral Education, 19(2), 169-183.

The Use of E-Mail to Deliver Performance-Based Feedback to Early Childhood Practitioners.

This study evaulates the effects of performance feedback as part of proffessional development across three studies.

Barton, E. E., Pribble, L., & Chen, C.-I. (2013). The Use of E-Mail to Deliver Performance-Based Feedback to Early Childhood Practitioners. Journal of Early Intervention, 35(3), 270-297.

Putting the pieces together: An Integrated Model of program implementation

One of the primary goals of implementation science is to insure that programs are implemented with integrity. This paper presents an integrated model of implementation that emphasizes treatment integrity.

Berkel, C., Mauricio, A. M., Schoenfelder, E., Sandler, I. N., & Collier-Meek, M. (2011). Putting the pieces together: An Integrated Model of program implementation. Prevention Science, 12, 23-33.

A review of published studies involving parametric manipulations of treatment integrity

We reviewed parametric analyses of treatment integrity levels published in 10 behavior analytic journals through 2017. We discuss the general findings from the identified literature as well as directions for future research.

Brand, D., Henley, A. J., Reed, F. D. D., Gray, E., & Crabbs, B. (2019). A review of published studies involving parametric manipulations of treatment integrity. Journal of Behavioral Education, 28(1), 1-26.

Examining how treatment fidelity is supported, measured, and reported in K–3 reading intervention research

Treatment fidelity data (descriptive and statistical) are critical to interpreting and generalizing outcomes of intervention research. Despite recommendations for treatment fidelity reporting from funding agencies and researchers, past syntheses have found treatment fidelity is frequently unreported in educational interventions and fidelity data are seldom used to analyze its relation to student outcomes.

Capin, P., Walker, M. A., Vaughn, S., & Wanzek, J. (2018). Examining how treatment fidelity is supported, measured, and reported in K–3 reading intervention research. Educational psychology review, 30(3), 885-919.

Graphical Feedback to Increase Teachers' Use of Incidental Teaching.

Incidental teaching is often a component of early childhood intervention programs. This study evaluated the use of grahical feedback to increase the use of incidental teaching.

Casey, A. M., & McWilliam, R. A. (2008). Graphical Feedback to Increase Teachers’ Use of Incidental Teaching. Journal of Early Intervention, 30(3), 251-268.

The impact of checklist-based training on teachers' use of the zone defense schedule.

One of the challenges for increasing treatment integrity is finding effective methods for doing so. This study evaluated the use of checklist-based training to increase treatment integrity.

Casey, A. M., & McWilliam, R. A. (2011). The impact of checklist-based training on teachers’ use of the zone defense schedule. Journal of Applied Behavior Analysis, 44(2), 397-401.

Using Performance Feedback To Decrease Classroom Transition Time And Examine Collateral Effects On Academic Engagement.

This study evaluated the impact of performance feedback on how well problem-solving teams implemeted a structured decision-making protocal. Teams performed better when feedback was provided.

Codding, R. S., & Smyth, C. A. (2008). Using Performance Feedback To Decrease Classroom Transition Time And Examine Collateral Effects On Academic Engagement. Journal of Educational & Psychological Consultation, 18(4), 325-345.

Effects of Immediate Performance Feedback on Implementation of Behavior Support Plans.

This study investigated the effects of performance feedback to increase treatment integrity.

Codding, R. S., Feinberg, A. B., & Dunn, E. K. (2005). Effects of Immediate Performance Feedback on Implementation of Behavior Support Plans. Journal of Applied Behavior Analysis, 38(2), 205-219.

Using Performance Feedback to Improve Treatment Integrity of Classwide Behavior Plans: An Investigation of Observer Reactivity

This study evaluated the effects of performance feedback in increasing treatment integrity. It also evaluated the possible reactivitiy effects of being observed.

Codding, R. S., Livanis, A., Pace, G. M., & Vaca, L. (2008). Using Performance Feedback to Improve Treatment Integrity of Classwide Behavior Plans: An Investigation of Observer Reactivity. Journal of Applied Behavior Analysis, 41(3), 417-422.

Assessment of consultation and intervention implementation: A review of conjoint behavioral consultation studies

Reviews of treatment outcome literature indicate treatment integrity is not regularly assessed. In consultation, two levels of treatment integrity (i.e., consultant procedural integrity [CPI] and intervention treatment integrity [ITI]) provide relevant implementation data.

Collier-Meek, M. A., & Sanetti, L. M. (2014). Assessment of consultation and intervention implementation: A review of conjoint behavioral consultation studies. Journal of Educational and Psychological Consultation, 24(1), 55-73.

How are treatment integrity data assessed? Reviewing the performance feedback literature.

Collecting treatment integrity data is critical for (a) strengthening internal validity within a research study, (b) determining the impact of an intervention on student outcomes, and (c) assessing the need for implementation supports. Although researchers have noted the increased inclusion of treatment integrity data in published articles, there has been limited attention to how treatment integrity is assessed.

Collier-Meek, M. A., Fallon, L. M., & Gould, K. (2018). How are treatment integrity data assessed? Reviewing the performance feedback literature. School Psychology Quarterly, 33(4), 517.

Barriers to Implementing Classroom Management and Behavior Support Plans: An Exploratory Investigation.

This study examines obstacles encountered by 33 educators along with suggested interventions to overcome impediments to effective delivery of classroom management interventions or behavior support plans. Having the right classroom management plan isn’t enough if you can’t deliver the strategies to the students in the classroom.

Collier‐Meek, M. A., Sanetti, L. M., & Boyle, A. M. (2019). Barriers to implementing classroom management and behavior support plans: An exploratory investigation. Psychology in the Schools, 56(1), 5-17.

Elaborated corrective feedback and the acquisition of reasoning skills: A study of computer-assisted instruction

The study compared basic and elaborated corrections within the context of otherwise identical computer-assisted instruction (CAI) programs that taught reasoning skills. Twelve learning disabled and 16 remedial high school students were randomly assigned to either the basic-corrections or elaborated-corrections treatment. Criterion-referenced test scores were significantly higher for the elaborated-corrections treatment on both the post and maintenance tests and on the transfer test. Time to complete the program did not differ significantly for the two groups.

Collins, M., Carnine, D., & Gersten, R. (1987). Elaborated corrective feedback and the acquisition of reasoning skills: A study of computer-assisted instruction. Exceptional Children, 54(3), 254-262.

The effects of video modeling on staff implementation of a problem-solving intervention with adults with developmental disabilities.

This study evaluated the effects of video modeling on staff implementation of a problem solving intervention.

Collins, S., Higbee, T. S., & Salzberg, C. L. (2009). The effects of video modeling on staff implementation of a problem-solving intervention with adults with developmental disabilities. Journal of applied behavior analysis, 42(4), 849-854.

Program integrity in primary and early secondary prevention: are implementation effects out of control

Dane and Schneider propose treatment integrity as a multi-dimensional construct and describe five dimensions that constitute the construct.

Dane, A. V., & Schneider, B. H. (1998). Program integrity in primary and early secondary prevention: are implementation effects out of control. Clinical psychology review, 18(1), 23-45.

Program integrity in primary and early secondary prevention: are implementation effects out of control?

The authors examined the extent to which program integrity (i.e., the degree to which programs were implemented as planned) was verified and promoted in evaluations of primary and early secondary prevention programs published between 1980 and 1994.

Dane, A. V., & Schneider, B. H. (1998). Program integrity in primary and early secondary prevention: are implementation effects out of control?. Clinical psychology review, 18(1), 23-45.

Test Driving Interventions to Increase Treatment Integrity and Student Outcomes.

This study evaluated the effects of allowing teachers to “test drive” interventions and then select the intervention they most preferred. The result was an increase in treatment integrity.

Dart, E. H., Cook, C. R., Collins, T. A., Gresham, F. M., & Chenier, J. S. (2012). Test Driving Interventions to Increase Treatment Integrity and Student Outcomes. School Psychology Review, 41(4), 467-481.

Examining dimensions of treatment intensity and treatment fidelity in mathematics intervention research for students at risk

To prevent academic failure and promote long-term success, response-to-intervention (RtI) is designed to systematically increase the intensity of delivering research-based interventions. Interventions within an RtI framework must not only be effective but also be implemented with treatment fidelity and delivered with the appropriate level of treatment intensity to improve student mathematics achievement.

DeFouw, E. R., Codding, R. S., Collier-Meek, M. A., & Gould, K. M. (2019). Examining dimensions of treatment intensity and treatment fidelity in mathematics intervention research for students at risk. Remedial and Special Education, 40(5), 298-312.

Increasing treatment fidelity by matching interventions to contextual variables within the educational setting

The impact of an intervention is influenced by how well it fis into the context of a classroom. This paper suggests a number of variables to consider and how they might be measured prior to the development of an intervention.

Detrich, R. (1999). Increasing treatment fidelity by matching interventions to contextual variables within the educational setting. School Psychology Review, 28(4), 608-620.

Treatment Integrity: Fundamental to Education Reform

To produce better outcomes for students two things are necessary: (1) effective, scientifically supported interventions (2) those interventions implemented with high integrity. Typically, much greater attention has been given to identifying effective practices. This review focuses on features of high quality implementation.

Detrich, R. (2014). Treatment integrity: Fundamental to education reform. Journal of Cognitive Education and Psychology, 13(2), 258-271.

Treatment integrity: A wicked problem and some solutions

Presentation by Wing Institute with goals: Make the case that treatment integrity monitoring is a necessary part of service delivery; describe dimensions of treatment integrity; suggest methods for increasing treatment integrity; place treatment integrity within systems framework .

Detrich, R. (2015). Treatment integrity: A wicked problem and some solutions. Missouri Association for Behavior Analysis 2015 Conference. http://winginstitute.org/2015-MissouriABA-Presentation-Ronnie-Detrich

Innovation, Implementation Science, and Data-Based Decision Making: Components of Successful Reform

Over the last fifty years, there have been many educational reform efforts, most of which have had a relatively short lifespan and failed to produce the promised results. One possible reason for this is for the most part these innovations have been poorly implemented. In this chapter, the author proposes a data-based decision making approach to assuring high quality implementation.

Detrich, R. Innovation, Implementation Science, and Data-Based Decision Making: Components of Successful Reform. In M. Murphy, S. Redding, and J. Twyman (Eds). Handbook on Innovations in Learning, 31. Charlotte, NC: Information Age Publishing

Treatment Integrity: A Fundamental Unit of Sustainable Educational Programs.

Reform efforts tend to come and go very quickly in education. This paper makes the argument that the sustainability of programs is closely related to how well those programs are implemented.

Detrich, R., Keyworth, R. & States, J. (2010). Treatment Integrity: A Fundamental Unit of Sustainable Educational Programs. Journal of Evidence-Based Practices for Schools, 11(1), 4-29.

Dimensions of Treatment Integrity Overview

In this conceptualization of treatment integrity, there are four dimensions relevant to practice: (a) exposure (dosage), (b) adherence, (c) quality of delivery, and (d) student responsiveness. It is important to understand that these dimensions do not stand alone but rather interact to impact the ultimate effectiveness of an intervention.

Detrich, R., States, J. & Keyworth, R. (2017). Dimensions of Treatment Integrity Overview. Oakland, Ca. The Wing Institute

Treatment Integrity in the Problem Solving Process

The usual approach to determining if an intervention is effective for a student is to review student outcome data; however, this is only part of the task. Student data can only be understood if we know something about how well the intervention was implemented. Student data without treatment integrity data are largely meaningless because without knowing how well an intervention has been implemented, no judgments can be made about the effectiveness of the intervention. Poor outcomes can be a function of an ineffective intervention or poor implementation of the intervention. Without treatment integrity data, there is a risk that an intervention will be judged as ineffective when, in fact, the quality of implementation was so inadequate that it would be unreasonable to expect positive outcomes.

Detrich, R., States, J. & Keyworth, R. (2017). Treatment Integrity in the Problem Solving Process. Oakland, Ca. The Wing Institute.

Overview of Treatment Integrity

For the best chance of producing positive educational outcomes for all children, two conditions must be met: (a) adopting effective empirically supported (evidence-based) practices and (b) implementing those practices with sufficient quality that they make a difference (treatment integrity)

Detrich, R., States, J., & Keyworth, R. (2107). Overview of Treatment Integrity. Oakland, Ca. The Wing Institute.

A comparison of performance feedback procedures on teachers' treatment implementation integrity and students' inappropriate behavior in special education classrooms.

This study comared the effects of goal setting about student performance and feedback about student performance with daily written feedback about student performance, feedback about accuracy of implementation, and cancelling meetings if integrity criterion was met.

DiGennaro, F. D., Martens, B. K., & Kleinmann, A. E. (2007). A comparison of performance feedback procedures on teachers' treatment implementation integrity and students' inappropriate behavior in special education classrooms. Journal of Applied Behavior Analysis, 40(3), 447-461.

Increasing Treatment Integrity Through Negative Reinforcement: Effects on Teacher and Student Behavior

This study evaluated the impact of allowing teachers to miss coaching meetings if their treatment integrity scores met or exceeded criterion.

DiGennaro, F. D., Martens, B. K., & McIntyre, L. L. (2005). Increasing Treatment Integrity Through Negative Reinforcement: Effects on Teacher and Student Behavior. School Psychology Review, 34(2), 220-231.

Effects of video modeling on treatment integrity of behavioral interventions.

This study evaluated the effects of video modeling on how well teachers implemented interventions. There was an increase in integrity but it remained variable. More stable patterns of implementation were observed when teachers were given feedback about their peroformance.

Digennaro-Reed, F. D., Codding, R., Catania, C. N., & Maguire, H. (2010). Effects of video modeling on treatment integrity of behavioral interventions. Journal of Applied Behavior Analysis, 43(2), 291-295.

Effects of public feedback during RTI team meetings on teacher implementation integrity and student academic performance.

This study evaluated the impact of public feedback in RtI team meetings on the quality of implementation. Feedback improved poor implementation and maintained high level implementation.

Duhon, G. J., Mesmer, E. M., Gregerson, L., & Witt, J. C. (2009). Effects of public feedback during RTI team meetings on teacher implementation integrity and student academic performance. Journal of School Psychology, 47(1), 19-37.

Implementation Matters: A Review of Research on the Influence of Implementation on Program Outcomes and the Factors Affecting Implementation

The first purpose of this review is to assess the impact of implementation on program outcomes, and the second purpose is to identify factors affecting the implementation process.

Durlak, J. A., & DuPre, E. P. (2008). Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American journal of community psychology, 41(3-4), 327-350.

An Exploration of Teacher Acceptability of Treatment Plan Implementation: Monitoring and Feedback Methods.

This paper summarizes survey results about the acceptability of different methods for monitoring treatment integrity and performance feedback.

Easton, J. E., & Erchul, W. P. (2011). An Exploration of Teacher Acceptability of Treatment Plan Implementation: Monitoring and Feedback Methods. Journal of Educational & Psychological Consultation, 21(1), 56-77. Retrieved from http://www.tandfonline.com/doi/abs/10.1080/10474412.2011.544949?journalCode=hepc20.

Innovative methodology in ecological consultation: Use of scripts to promote treatment acceptability and integrity.

Presents 4 case studies demonstrating an innovative approach for studying and promoting treatment integrity in a manner acceptable to consultees and related to treatment success.

Ehrhardt, K. E., Barnett, D. W., Lentz Jr, F. E., Stollar, S. A., & Reifin, L. H. (1996). Innovative methodology in ecological consultation: Use of scripts to promote treatment acceptability and integrity. School Psychology Quarterly, 11(2), 149.

Implementation of evidence-based treatments for children and adolescents: Research findings and their implications for the future.

A growing number of evidence-based psychotherapies hold the promise of substantial benefits for children, families, and society. For the benefits of evidence-based programs to be realized on a scale sufficient to be useful to individuals and society, evidence-based psychotherapies need to be put into practice outside of controlled clinical trials.

Fixsen, D. L., Blase, K. A., Duda, M. A., Naoom, S. F., & Van Dyke, M. (2010). Implementation of evidence-based treatments for children and adolescents: Research findings and their implications for the future.

Sustainability of evidence-based programs in education

This paper discusses common elements of successfully sustaining effective practices across a variety of disciplines.

Fixsen, D. L., Blase, K. A., Duda, M., Naoom, S. F., & Van Dyke, M. (2010). Sustainability of evidence-based programs in education. Journal of Evidence-Based Practices for Schools, 11(1), 30-46.

Implementation Research: A Synthesis of the Literature

This is a comprehensive literature review of the topic of Implementation examining all stages beginning with adoption and ending with sustainability.

Fixsen, D. L., Naoom, S. F., Blase, K. A., & Friedman, R. M. (2005). Implementation research: A synthesis of the literature.

Prereferral interventions: Quality indices and outcomes

Quality indicators of prereferral interventions (i.e., behavioral definition, direct measure, step-by-step plan, treatment integrity, graphing of results, and direct comparison to baseline) were investigated as predictors of prereferral intervention outcomes with a sample of regular education teachers and related services personnel on the same 312 students.

Flugum, K. R., & Reschly, D. J. (1994). Prereferral interventions: Quality indices and outcomes. Journal of School Psychology, 32(1), 1-14.

Sustaining fidelity following the nationwide PMTO implementation in Norway

This paper describes the scaling up and dissemination of a partent training program in Norway while maintaining fidelity of implementation.

Forgatch, M. S., & DeGarmo, D. S. (2011). Sustaining fidelity following the nationwide PMTO implementation in Norway. Prevention Science, 12(3), 235-246. Retrieved from http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3153633

Establishing Treatment Fidelity in Evidence-Based Parent Training Programs for Externalizing Disorders in Children and Adolescents.

This review evaluated methods for improving treatment integrity based on the National Institutes of Health Treatment Fidelity Workgroup. Strategies related to treatment design produced the highest levels of treatment integrity. Training and enactment of treatment skills resulted in the lowest level of treatment integrity across the 65 reviewed studies.

Garbacz, L., Brown, D., Spee, G., Polo, A., & Budd, K. (2014). Establishing Treatment Fidelity in Evidence-Based Parent Training Programs for Externalizing Disorders in Children and Adolescents. Clinical Child & Family Psychology Review, 17(3).

The impact of two professional development interventions on early reading instruction and achievement

To help states and districts make informed decisions about the PD they implement to improve reading instruction, the U.S. Department of Education commissioned the Early Reading PD Interventions Study to examine the impact of two research-based PD interventions for reading instruction: (1) a content-focused teacher institute series that began in the summer and continued through much of the school year (treatment A) and (2) the same institute series plus in-school coaching (treatment B).

Garet, M. S., Cronen, S., Eaton, M., Kurki, A., Ludwig, M., Jones, W., ... Zhu, P. (2008). The impact of two professional development interventions on early reading instruction and achievement. NCEE 2008-4030. Washington, DC: National Center for Education Evaluation and Regional Assistance.

Supporting teacher use of interventions: effects of response dependent performance feedback on teacher implementation of a math intervention

This study examined general education teachers’ implementation of a peer tutoring intervention for five elementary students referred for consultation and intervention due to academic concerns. Treatment integrity was assessed via permanent products produced by the intervention.

Gilbertson, D., Witt, J. C., Singletary, L. L., & VanDerHeyden, A. (2007). Supporting teacher use of interventions: Effects of response dependent performance feedback on teacher implementation of a math intervention. Journal of Behavioral Education, 16(4), 311-326.

Strategies for Improving Treatment Integrity in Organizational Consultation

Organizations house many individuals. Many of them are responsible implementing the same practice. If organizations are to meet their goal it is important for the organization have systems for assuring high levels of treatment integrity.

Gottfredson, D. C. (1993). Strategies for Improving Treatment Integrity in Organizational Consultation. Journal of Educational & Psychological Consultation, 4(3), 275.

A systematic review of treatment integrity assessment from 2004 to 2014: Examining behavioral interventions for students with autism spectrum disorder.

Many students with autism spectrum disorder (ASD) receive behavioral interventions to improve academic and prosocial functioning and remediate current skill deficits. Sufficient treatment integrity is necessary for these interventions to be successful.

Gould, K. M., Collier-Meek, M., DeFouw, E. R., Silva, M., & Kleinert, W. (2019). A systematic review of treatment integrity assessment from 2004 to 2014: Examining behavioral interventions for students with autism spectrum disorder. Contemporary School Psychology, 23(3), 220-230.

Assessment of Treatment Integrity in School Consultation and Prereferral Intervention.

Technical issues (specification of treatment components, deviations from treatment protocols and amount of behavior change, and psychometric issues in assessing Treatment Integrity) involved in the measurement of Treatment Integrity are discussed.

Gresham, F. M. (1989). Assessment of treatment integrity in school consultation and prereferral intervention. School Psychology Review, 18(1), 37-50.

Features of fidelity in schools and classrooms: Constructs and measurement

The concept of treatment fidelity (integrity) is important across a diversity of fields that are involved with providing treatments or interventions to individuals. Despite variations in terminology across these diverse fields, the concern that treatments or interventions are delivered as prescribed or intended is of paramount importance to document that changes in individuals’ functioning (medical, nutritional, psychological, or behavioral) are due to treatments and not from uncontrolled, extraneous variables.

Gresham, F. M. (2016). Features of fidelity in schools and classrooms: Constructs and measurement. In Treatment fidelity in studies of educational intervention (pp. 30-46). Routledge.

Generalizability of multiple measures of treatment integrity: Comparisons among direct observations, permanent products, and self-report

The concept of treatment integrity is an essential component to data-based decision making within a response-to-intervention model. Although treatment integrity is a topic receiving increased attention in the school-based intervention literature, relatively few studies have been conducted regarding the technical adequacy of treatment integrity assessment methods.

Gresham, F. M., Dart, E. H., & Collins, T. A. (2017). Generalizability of Multiple Measures of Treatment Integrity: Comparisons Among Direct Observation, Permanent Products, and Self-Report. School Psychology Review, 46(1), 108-121.

Treatment integrity in applied behavior analysis with children.

This study reviewed all intervention studies published between 1980-1990 in Journal of Applied Behavior Analysis in which children were the subjects of the study. The authors found that treatment integrity was reported in only 16% of the studies.

Gresham, F. M., Gansle, K. A., & Noell, G. H. (1993). Treatment ?integrity in ?applied behavior analysis with children. Journal of Applied ?Behavior Analysis, 26(2), 257-263.

Treatment integrity in learning disabilities intervention research: Do we really know how treatments are implemented

The authors reviewed three learning disabilities journals between 1995-1999 to determine what percent of the intervention studies reported measures of treatment integrity. Only 18.5% reported treatment integrity measures.

Gresham, F. M., MacMillan, D. L., Beebe-Frankenberger, M. E., & Bocian, K. M. ?(2000). Treatment integrity in learning disabilities intervention research: Do we really know how treatments are implemented. Learning Disabilities ?Research & Practice, 15(4), 198-205.

reatment Integrity of Literacy Interventions for Students With Emotional and/or Behavioral Disorders: A Review of Literature

This review examines the treatment integrity data of literacy interventions for students with emotional and/or behavioral disorders (EBD). Findings indicate that studies focusing on literacy interventions for students with EBD included clear operational definitions and data on treatment integrity to a higher degree than have been found in other disciplines.

Griffith, A. K., Duppong Hurley, K., & Hagaman, J. L. (2009). Treatment integrity of literacy interventions for students with emotional and/or behavioral disorders: A review of literature. Remedial and Special Education, 30(4), 245-255.

A developmental model for determining whether the treatment is actually implemented

Determining whether or not the treatment or innovation under study is actually in use, and if so, how it is being used, is essential to the interpretation of any study. The concept of Levels of Use of the Innovation (LoU) permits an operational, cost-feasible description and documentation of whether or not an innovation or treatment is being implemented.

Hall, G. E., & Loucks, S. F. (1977). A developmental model for determining whether the treatment is actually implemented. American Educational Research Journal, 14(3), 263-276.

Will the “principles of effectiveness” improve prevention practice? Early findings from a diffusion study

This study examines adoption and implementation of the US Department of Education's new policy, the `Principles of Effectiveness', from a diffusion of innovations theoretical framework. In this report, we evaluate adoption in relation to Principle 3: the requirement to select research-based programs.

Hallfors, D., & Godette, D. (2002). Will the “principles of effectiveness” improve prevention practice? Early findings from a diffusion study. Health Education Research, 17(4), 461–470.

At a loss for words: How a flawed idea is teaching millions of kids to be poor readers.

For decades, schools have taught children the strategies of struggling readers, using a theory about reading that cognitive scientists have repeatedly debunked. And many teachers and parents don't know there's anything wrong with it.

Hanford, E. (2019). At a loss for words: How a flawed idea is teaching millions of kids to be poor readers. APM Reports. https://www.apmreports.org/story/2019/08/22/whats-wrong-how-schools-teach-reading

Transporting efficacious treatments to field settings: The link between supervisory practices and therapist fidelity in MST programs

Validated a measure of clinical supervision practices, further validated a measure of therapist adherence, and examined the association between supervisory practices and therapist adherence to an evidence-based treatment model (i.e., multisystemic therapy [MST]) in real-world clinical settings.

Henggeler, S. W., Schoenwald, S. K., Liao, J. G., Letourneau, E. J., & Edwards, D. L. (2002). Transporting efficacious treatments to field settings: The link between supervisory practices and therapist fidelity in MST programs. Journal of Clinical Child and Adolescent Psychology, 31(2), 155-167.

Learning from teacher observations: Challenges and opportunities posed by new teacher evaluation systems

This article discusses the current focus on using teacher observation instruments as part of new teacher evaluation systems being considered and implemented by states and districts.

Hill, H., & Grossman, P. (2013). Learning from teacher observations: Challenges and opportunities posed by new teacher evaluation systems. Harvard Educational Review, 83(2), 371-384.

Observational Assessment for Planning and Evaluating Educational Transitions: An Initial Analysis of Template Matching

Used a direct observation-based approach to identify behavioral conditions in sending (i.e., special education) and in receiving (i.e., regular education) classrooms and to identify targets for intervention that might facilitate mainstreaming of behavior-disordered (BD) children.

Hoier, T. S., McConnell, S., & Pallay, A. G. (1987). Observational assessment for planning and evaluating educational transitions: An initial analysis of template matching. Behavioral Assessment.

Criteria for Evaluating Treatment Guidelines

This document presents a set of criteria to be used in evaluating treatment guidelines that have been promulgated by health care organizations, government agencies, professional associations, or other entities.1 The purpose of treatment guidelines is to educate health care professionals2 and health care systems about the most effective treatments available

Hollon, D., Miller, I. J., & Robinson, E. (2002). Criteria for evaluating treatment guidelines. American Psychologist, 57(12), 1052-1059.

Examining the evidence base for school-wide positive behavior support.

The purposes of this manuscript are to propose core features that may apply to any practice or set of practices that proposes to be evidence-based in relation to School-wide Positive Behavior Support (SWPBS).

Horner, R. H., Sugai, G., & Anderson, C. M. (2010). Examining the evidence base for school-wide positive behavior support. Focus on Exceptional Children, 42(8), 1.

The importance of contextual fit when implementing evidence-based interventions.

“Contextual fit” is based on the premise that the match between an intervention and local context affects both the quality of intervention implementation and whether the intervention actually produces the desired outcomes for children and families.

Horner, R., Blitz, C., & Ross, S. (2014). The importance of contextual fit when implementing evidence-based interventions. Washington, DC: U.S. Department of Health and Human Services, Office of the Assistant Secretary for Planning and Evaluation. https://aspe.hhs.gov/system/files/pdf/77066/ib_Contextual.pdf

The mirage: Confronting the hard truth about our quest for teacher development

This piece describes the widely held perception among education leaders that we already know how to help teachers improve, and that we could achieve our goal of great teaching in far more classrooms if we just applied what we know more widely.

Jacob, A., & McGovern, K. (2015). The mirage: Confronting the hard truth about our quest for teacher development. Brooklyn, NY: TNTP. https://tntp.org/assets/documents/TNTP-Mirage_2015.pdf.

Efficacy of teacher‐implemented good behavior game despite low treatment integrity

The Good Behavior Game (GBG) is a well-documented group contingency designed to reduce disruptive behavior in classroom settings. However, few studies have evaluated the GBG with students who engage in severe problem behavior in alternative schools, and there are few demonstrations of training teachers in those settings to implement the GBG.

Joslyn, P. R., & Vollmer, T. R. (2020). Efficacy of teacher‐implemented Good Behavior Game despite low treatment integrity. Journal of applied behavior analysis, 53(1), 465-474.

Student Achievement through Staff Development

This book provides research as well as case studies of successful professional development strategies and practices for educators.

Joyce, B. R., & Showers, B. (2002). Student achievement through staff development. ASCD.

Comparative outcome studies of psychotherapy: Methodological issues and strategies.

Considers design issues and strategies by comparative outcome studies, including the conceptualization, implementation, and evaluation of alternative treatments; assessment of treatment-specific processes and outcomes; and evaluation of the results. It is argued that addressing these and other issues may increase the yield from comparative outcome studies and may attenuate controversies regarding the adequacy of the demonstrations.

Kazdin, A. E. (1986). Comparative outcome studies of psychotherapy: methodological issues and strategies. Journal of consulting and clinical psychology, 54(1), 95.

The Effects of Feedback Interventions on Performance: A Historical Review, a Meta-Analysis, and a Preliminary Feedback Intervention Theory

The authors proposed a preliminary FI theory (FIT) and tested it with moderator analyses. The central assumption of FIT is that FIs change the locus of attention among 3 general and hierarchically organized levels of control: task learning, task motivation, and meta-tasks (including self-related) processes.

Kluger, A. N., & DeNisi, A. (1996). The effects of feedback interventions on performance: A historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychological bulletin, 119(2), 254.

Focus on teaching: Using video for high-impact instruction

This book examines the use of video recording to to improve teacher performance. The book shows how every classroom can easily benefit from setting up a camera and hitting “record”.

Knight, J. (2013). Focus on teaching: Using video for high-impact instruction. (Pages 8-14). Thousand Oaks, CA: Corwin.

Dubious “Mozart effect” remains music to many Americans’ ears.

Scientists have discredited claims that listening to classical music enhances intelligence, yet this so-called "Mozart Effect" has actually exploded in popularity over the years.

Krakovsky, M. (2005). Dubious “Mozart effect” remains music to many Americans’ ears. Stanford, CA: Stanford Report

The Reading Wars

An old disagreement over how to teach children to read -- whole-language versus phonics -- has re-emerged in California, in a new form. Previously confined largely to education, the dispute is now a full-fledged political issue there, and is likely to become one in other states.

Lemann, N. (1997). The reading wars. The Atlantic Monthly, 280(5), 128–133.

Demonstration of Combined Efforts in School-Wide Academic and Behavioral Systems and Incidence of Reading and Behavior Challenges in Early Elementary Grades

This study provides descriptive data on the rates of office discipline referrals and beginning reading skills for students in grades K—3 for one school district that is implementing a three-tier prevention model for both reading and behavior support.

McIntosh, K., Chard, D. J., Boland, J. B., & Horner, R. H. (2006). Demonstration of combined efforts in school-wide academic and behavioral systems and incidence of reading and behavior challenges in early elementary grades. Journal of Positive Behavior Interventions, 8(3), 146-154.

Demonstration of Combined Efforts in School-Wide Academic and Behavioral Systems and Incidence of Reading and Behavior Challenges in Early Elementary Grades

This study provides descriptive data on the rates of office discipline referrals and beginning reading skills for students in grades K—3 for one school district that is implementing a three-tier prevention model for both reading and behavior support.

McIntosh, K., Chard, D. J., Boland, J. B., & Horner, R. H. (2006). Demonstration of combined efforts in school-wide academic and behavioral systems and incidence of reading and behavior challenges in early elementary grades. Journal of Positive Behavior Interventions, 8(3), 146-154.

Treatment integrity of school‐based interventions with children

This paper examines school-based experimental studies with individuals 0 to 18 years between 1991 and 2005. Only 30% of the studies provided treatment integrity data. Nearly half of studies (45%) were judged to be at high risk for treatment inaccuracies.

McIntyre, L. L., Gresham, F. M., DiGennaro, F. D., & Reed, D. D. (2007). Treatment integrity of school‐based interventions with children in the Journal of Applied Behavior Analysis 1991–2005. Journal of Applied Behavior Analysis, 40(4), 659–672.

How to reverse the assault on science.

We should stop being so embarrassed by uncertainty and embrace it as a strength rather than a weakness of scientific reasoning

McIntyre, L., (2019, May 22). How to reverse the assault on science. Scientific American. https://blogs.scientificamerican.com/observations/how-to-reverse-the-assault-on-science1/

Whole language lives on: The illusion of “balanced” reading instruction.

This position paper contends that the whole language approach to reading instruction has been disproved by research and evaluation but still pervades textbooks for teachers, instructional materials for classroom use, some states' language-arts standards and other policy documents, teacher licensing requirements and preparation programs, and the professional context in which teachers work.

Moats, L. C. (2000). Whole language lives on: The illusion of “balanced” reading instruction. Washington, DC: DIANE Publishing.

Treatment fidelity in outcome studies

Fidelity of treatment in outcome research refers to confirmation that the manipulation of the independent variable occurred as planned. Verification of fidelity is needed to ensure that fair, powerful, and valid comparisons of replicable treatments can be made.

Moncher, F. J., & Prinz, R. J. (1991). Treatment fidelity in outcome studies. Clinical psychology review, 11(3), 247-266.

Treatment Fidelity in Social Work Intervention Research: A Review of Published Studies

This study investigated treatment fidelity in social work research. The authors systematically reviewed all articles published in five prominent social work journals over a 5- year period.

Naleppa, M. J., & Cagle, J. G. (2010). Treatment fidelity in social work intervention research: A review of published studies. Research on Social Work Practice, 20(6), 674-681.

Increasing intervention implementation in general education following consultation: A comparison of two follow-up strategies.

This study compared the effects of discussing issues of implementation challenges and performance feedback on increasing the integrity of implementation. Performance feedback was more effective than discussion in increasing integrity.

Noell, G. H., & Witt, J. C. (2000). Increasing intervention implementation in general education following consultation: A comparison of two follow-up strategies. Journal of Applied Behavior Analysis, 33(3), 271.

Does treatment integrity matter? A preliminary investigation of instructional implementation and mathematics performance

This study examined the impact of three levels of treatment integrity on students'

responding on mathematics tasks.

Noell, G. H., Gresham, F. M., & Gansle, K. A. (2002). Does treatment integrity matter? A preliminary investigation of instructional implementation and mathematics performance. Journal of Behavioral Education, 11(1), 51-67.

Increasing teacher intervention implementation in general education settings through consultation and performance feedback.

Examined the treatment integrity with which general education teachers implemented a reinforcement based intervention designed to improve the academic performance of elementary school students

Noell, G. H., Witt, J. C., Gilbertson, D. N., Ranier, D. D., & Freeland, J. T. (1997). Increasing teacher intervention implementation in general education settings through consultation and performance feedback. School Psychology Quarterly, 12(1), 77.

Why Trust Science?

Naomi Oreskes offers a bold and compelling defense of science, revealing why the social character of scientific

knowledge is its greatest strength—and the greatest reason we can trust it.

Oreskes, N. (2019). Why trust science? Princeton, NJ: Princeton University Press.

Factors Related to Intervention Integrity and Child Outcome in Social Skills Interventions

The purpose of the current investigation was to assess the relationship between the integrity with which social skills interventions were implemented in early childhood special education classrooms and 3 factors: teacher ratings of intervention acceptability, consultative support for implementation, and individual child outcomes.

Peterson, C. A., & McCONNELL, S. R. (1996). Factors related to intervention integrity and child outcome in social skills interventions. Journal of early intervention, 20(2), 146-164.

Practical statistics for educators.

The focus of the book is on essential concepts in educational statistics, understanding when to use various statistical tests, and how to interpret results. This book introduces educational students and practitioners to the use of statistics in education and basic concepts in statistics are explained in clear language.

Ravid, R. (2019). Practical statistics for educators. Rowman & Littlefield Publishers.

Using Coaching to Support Teacher Implementation of Classroom-based Interventions.

This study evaluted the impact of coaching on the implementation of an intervention. Coaching with higher rates of performance feedback resulted in the highest level of treatment integrity.

Reinke, W., Stormont, M., Herman, K., & Newcomer, L. (2014). Using Coaching to Support Teacher Implementation of Classroom-based Interventions. Journal of Behavioral Education, 23(1), 150-167.

What is a conflict of interest?

This page describes the conflict of interest and what should we do about it.

Resources for Research Ethics Education. (2001). What is a conflict of interest? San Diego, CA: University of California, San Diego. http://research-ethics.org/topics/conflicts-of-interest/

Effectiveness of a multi-component treatment for improving mathematics fluency.

An alternating treatments design was used to evaluate the effectiveness of an educational program that combined timings (via chess clocks), peer tutoring (i.e., peer-delivered immediate feedback), positive-practice overcorrection, and performance feedback on mathematics fluency (i.e., speed of accurate responding) in four elementary students with mathematics skills deficits.

Rhymer, K. N., Dittmer, K. I., Skinner, C. H., & Jackson, B. (2000). Effectiveness of a multi-component treatment for improving mathematics fluency. School Psychology Quarterly, 15(1), 40.

Diffusion of innovations

This book looks at how new ideas spread via communication channels over time. Such innovations are initially perceived as uncertain and even risky. To overcome this uncertainty, most people seek out others like themselves who have already adopted the new idea. Thus the diffusion process typically takes months or years. But there are exceptions: use of the Internet in the 1990s, for example, may have spread more rapidly than any other innovation in the history of humankind.

Rogers, E. M. (2003). Diffusion of innovations (5th ed.). New York, NY: Free Press.

Conflicts of interest in research: Looking out for number one means keeping the primary interest front and center

This review will briefly address the nature of conflicts of interest in research, including the importance of both financial and non-financial conflicts, and the potential effectiveness and limits of various strategies for managing such conflicts.

Romain, P. L. (2015). Conflicts of interest in research: Looking out for number one means keeping the primary interest front and center. Current Reviews in Musculoskeletal Medicine, 8(2), 122–127.

Treatment integrity assessment within a problem-solving model

The purpose of this chapter is to explain the role of treatment integrity assessment within the “implementing solutions” stage of a problem-solving model.

Sanetti, L. H., & Kratochwill, T. R. (2005). Treatment integrity assessment within a problem-solving model. Assessment for intervention: A problem-solving approach, 314-325.

Extending Use of Direct Behavior Rating Beyond Student Assessment.

This paper reviews options for treatment integrity measurement emphasizing how direct behavior rating technology might be incorporated within a multi-tiered model of intervention delivery.

Sanetti, L. M. H., Chafouleas, S. M., Christ, T. J., & Gritter, K. L. (2009). Extending Use of Direct Behavior Rating Beyond Student Assessment. Assessment for Effective Intervention, 34(4), 251-258.

Treatment fidelity reporting in intervention outcome studies in the school psychology literature from 2009 to 2016

Both student outcomes and treatment fidelity data are necessary to draw valid conclusions about intervention effectiveness. Reviews of the intervention outcome literature in related fields, and prior reviews of the school psychology literature, suggest that many researchers failed to report treatment fidelity data.

Sanetti, L. M. H., Charbonneau, S., Knight, A., Cochrane, W. S., Kulcyk, M. C., & Kraus, K. E. (2020). Treatment fidelity reporting in intervention outcome studies in the school psychology literature from 2009 to 2016. Psychology in the Schools, 57(6), 901-922.

Treatment Integrity of Interventions With Children in the Journal of Positive Behavior Interventions: From 1999 to 2009

The authors reviewed all intervention studies published in the Journal of Positive Behavior Interventions between 1999-2009 to determine the percent of those studies that reported a measure of treatment integrity. Slightly more than 40% reported a measure of treatment integrity.

Sanetti, L. M. H., Dobey, L. M., & Gritter, K. L. (2012). Treatment Integrity of Interventions With Children in the Journal of Positive Behavior Interventions: From 1999 to 2009. Journal of Positive Behavior Interventions, 14(1), 29-46.

Treatment integrity of interventions with children in the school psychology literature from 1995 to 2008

The authors reviewed four school psychology journals between 1995-2008 to estimate the percent of intervention studies that reported some measure of treatment integrity. About 50% reported a measure of treatment integrity.

Sanetti, L. M. H., Gritter, K. L., & Dobey, L. M. (2011). Treatment integrity of interventions with children in the school psychology literature from 1995 to 2008. School Psychology Review, 40(1), 72-84.

A review of comparison studies in applied behavior analysis

A commonly used research design in applied behavior analysis involves comparing two or more independent variables. There were some consistencies across studies, with half resulting in equivalent outcomes across comparisons. In addition, most studies employed the use of an alternating treatments or multi-element single-subject design and compared a teaching methodology.

Shabani, D. B., & Lam, W. Y. (2013). A review of comparison studies in applied behavior analysis. Behavioral Interventions, 28(2), 158-183.

Roles and responsibilities of researchers and practitioners for translating research to practice

This paper outlines the best practices for researchers and practitioners translating research to practice as well as recommendations for improving the process.

Shriver, M. D. (2007). Roles and responsibilities of researchers and practitioners for translating research to practice. Journal of Evidence-Based Practices for Schools, 8(1), 1-30.

Effects of two error-correction procedures on oral reading errors: Word supply versus sentence repeat

The effects of two error-correction procedures on oral reading errors and a control condition were compared in an alternating treatments design with three students who were moderately mentally retarded. The two procedures evaluated were word supply and sentence repeat.

Singh, N. N. (1990). Effects of two error-correction procedures on oral reading errors: Word supply versus sentence repeat. Behavior Modification, 14(2), 188-199.

Twenty years of communication intervention research with individuals who have severe intellectual and developmental disabilities

This literature review was conducted to evaluate the current state of evidence supporting communication interventions for individuals with severe intellectual and developmental disabilities. Many researchers failed to report treatment fidelity or to assess basic aspects of intervention effects, including generalization, maintenance, and social validity.

Snell, M. E., Brady, N., McLean, L., Ogletree, B. T., Siegel, E., Sylvester, L., ... & Sevcik, R. (2010). Twenty years of communication intervention research with individuals who have severe intellectual and developmental disabilities. American journal on intellectual and developmental disabilities, 115(5), 364-380.

The effect of performance feedback on teachers’ treatment integrity: A meta-analysis of the single-case literature.

The current study extracted and aggregated data from single-case studies that used Performance feedback (PF) in school settings to increase teachers' use of classroom-based interventions.

Solomon, B. G., Klein, S. A., & Politylo, B. C. (2012). The effect of performance feedback on teachers' treatment integrity: A meta-analysis of the single-case literature. School Psychology Review, 41(2).

Generalizability and Decision Studies of a Treatment Adherence Instrument.

Observational measurement of treatment adherence has long been considered the gold standard. However, little is known about either the generalizability of the scores from extant observational instruments or the sampling needed. Results suggested that reliable cognitive–behavioral therapy adherence studies require at least 10 sessions per patient, assuming 12 patients per therapists and two coders—a challenging threshold even in well-funded research. Implications, including the importance of evaluating alternatives to observational measurement, are discussed.

Southam-Gerow, M. A., Bonifay, W., McLeod, B. D., Cox, J. R., Violante, S., Kendall, P. C., & Weisz, J. R. (2020). Generalizability and decision studies of a treatment adherence instrument. Assessment, 27(2), 321-333.

Coaching Classroom Management: Strategies & Tools for Administrators & Coaches

This book is written for school administrators, staff developers, behavior specialists, and instructional coaches to offer guidance in implementing research-based practices that establish effective classroom management in schools. The book provides administrators with practical strategies to maximize the impact of professional development.

Sprick, et al. (2010). Coaching Classroom Management: Strategies & Tools for Administrators & Coaches. Pacific Northwest Publishing.

Why Education Practices Fail?

This paper examines a range of education failures: common mistakes in how new practices are selected, implemented, and monitored. The goal is not a comprehensive listing of all education failures but rather to provide education stakeholders with an understanding of the importance of vigilance when implementing new practices.

States, J., & Keyworth, R. (2020). Why Practices Fail. Oakland, CA: The Wing Institute. https://www.winginstitute.org/roadmap-overview

Treatment Integrity Strategies Overview

Inattention to treatment integrity is a primary factor of failure during implementation. Treatment integrity is defined as the extent to which an intervention is executed as designed, and the accuracy and consistency with which the intervention is implemented

States, J., Detrich, R. & Keyworth, R. (2017). Treatment Integrity Strategies. Oakland, CA: The Wing Institute. https://www.winginstitute.org/effective-instruction-treatment-integrity-strategies.

Conflict of interest in the debate over calcium-channel antagonists.

The debate about the safety of calcium-channel antagonists provided an opportunity to study financial conflicts of interest in medicine. This project was designed to examine the relation between authors' published positions on the safety of calcium-channel antagonists and their financial interactions with the pharmaceutical industry.

Stelfox, H. T., Chua, G., O'Rourke, K., & Detsky, A. S. (1998). Conflict of interest in the debate over calcium-channel antagonists. New England Journal of Medicine, 338(2), 101–106.

The effects of direct training and treatment integrity on treatment outcomes in school consultation

This study compared indirect training and direct training methods as a means of impacting levels of treatment integrity. Direct training methods produced better outcomes.

Sterling-Turner, H. E., Watson, T. S., & Moore, J. W. (2002). The effects of direct training and treatment integrity on treatment outcomes in school consultation. School Psychology Quarterly, 17(1).

Investigating the relationship between training type and treatment integrity.

The present study was conducted to investigate the relationship between training procedures and treatment integrity.

Sterling-Turner, H. E., Watson, T. S., Wildmon, M., Watkins, C., & Little, E. (2001). Investigating the relationship between training type and treatment integrity. School Psychology Quarterly, 16(1), 56.

Publication bias: The Achilles’ heel of systematic reviews?

This paper describes the problem of publication bias with reference to its history in a number of fields, with special reference to the area of educational research.

Isolating the effects of active responding in computer‐based instruction

This experiment evaluated the effects of requiring overt answer construction in computer-based programmed instruction using an alternating treatments design.

Tudor, R. M. (1995). Isolating the effects of active responding in computer‐based instruction. Journal of Applied Behavior Analysis, 28(3), 343-344.

Data point: Adult literacy in the United States.

Using the data from the Program for the International Assessment of Adult Competencies (PIAAC), this Data Point summarizes the number of U.S. adults with low levels of English literacy and describes how they differ by nativity status1 and race/ethnicity.