Overview of Summative Assessment

Summative Assessment PDF

States, J., Detrich, R. & Keyworth, R. (2018). Overview of Summative Assessment. Oakland, CA: The Wing Institute. https://www.winginstitute.org/assessment-summative.

Research supports the power of assessment to amplify learning and skill acquisition (Başol & Johanson, 2009). Summative assessment is a form of appraisal that occurs at the end of an instructional unit or at a specific point in time, such as the end of the school year. It evaluates mastery of learning and offers information on what students know and do not know. Frequently, summative assessment consists of evaluation tools designed to measure student performance against predetermined criteria based on specific learning standards. Examples of commonly employed tools include Advanced Placement exams, National Assessment of Education Progress (NAEP), end-of-lesson tests, midterm exams, final project, and term papers. These assessments are routinely used for making high-stakes decisions; for this purpose, often student knowledge or skill acquisition is compared with standards or benchmarks (examples: Common Core Standards and High School Graduation Tests).

What makes summative assessment so invaluable is that each high-stakes test may result in educators using the data for decisions with significant long-term consequences affecting a student’s future. Passing bestows important benefits, such as receiving a high school diploma, a scholarship, or entry into college, and failure can affect a child’s future employment prospects and earning potential as an adult (Geiser & Santelices, 2007). Additionally, summative assessment plays a role in improving future instruction by providing educators with data on the effectiveness of curriculum and instruction. Knowing what methods worked for a lesson or semester may not help current students, but it can provide educators with the necessary insights into how and where to redesign instructional practices to elevate next year’s student scores (Moss, 2013).

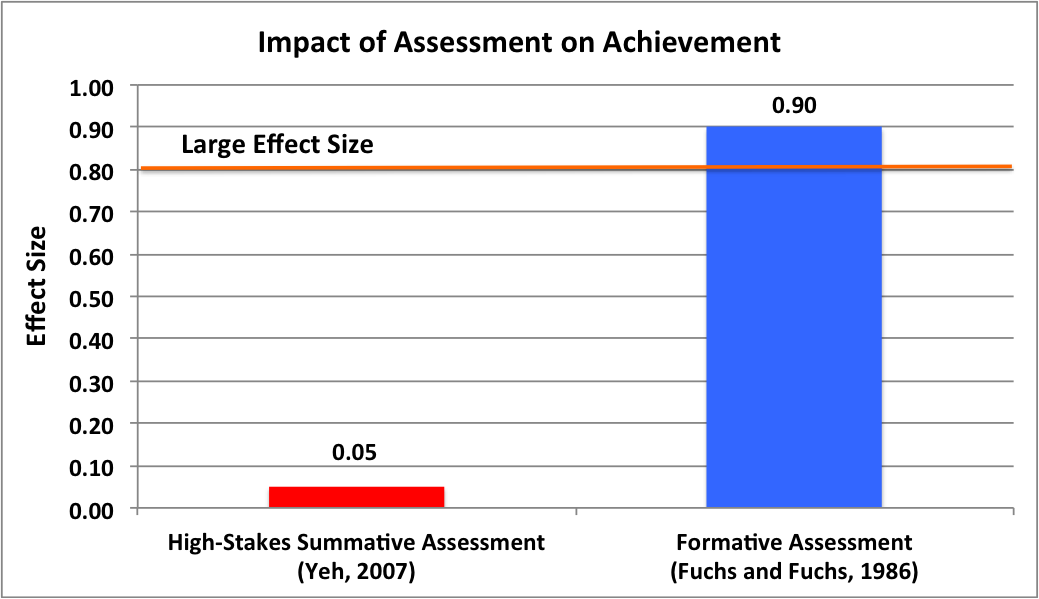

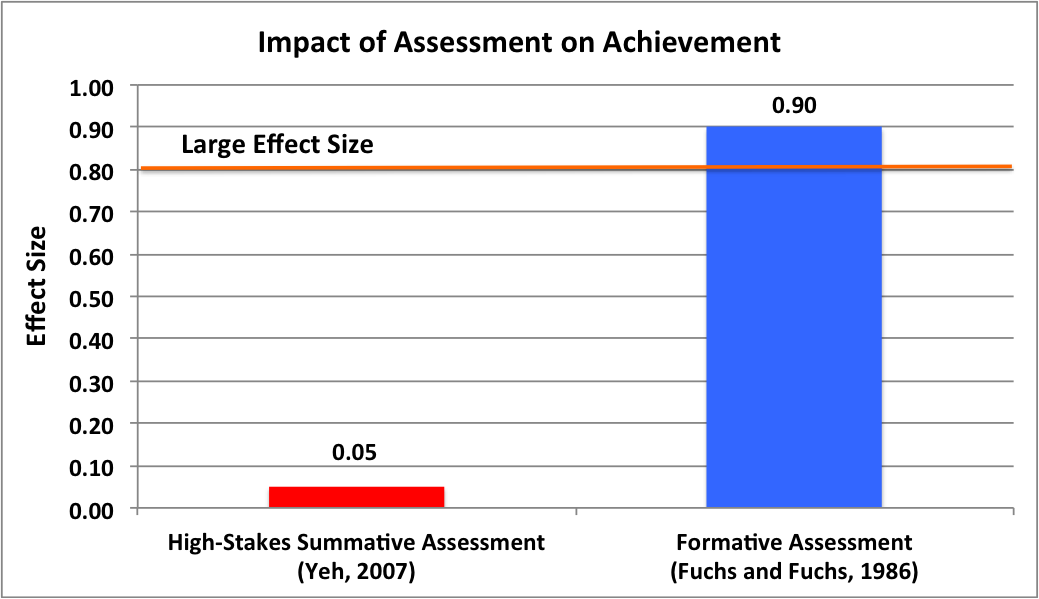

Despite the important role of summative assessment in education, research finds little evidence to support it as a critical factor in improved student achievement (Rosenshine, 2003; Yeh, 2007). Figure 1 provides a comparison of the effect size of formative assessment and high-stakes testing (an instrument of summative assessment), gleaned from multiple studies conducted over more than 40 years.

Figure 1. Comparison of formative assessment and summative assessment impact on student achievement

Because summative assessment happens after instruction is over, it has little value as a diagnostic tool to guide teachers in making timely adjustments to instruction aimed at catching students who are falling behind. It does not provide teachers with vital information to use in crafting remedial instruction. Formative assessment is a much more effective instrument for adjusting instruction to assist students master material (Garrison & Ehringhaus, 2007; Harlen & James, 1997).

Despite these shortcomings, summative assessment plays a pivotal role in education by troubleshooting weaknesses in the system. It provides educators with valuable information to determine the effectiveness of instruction for a particular unit of study, to make high-stakes decisions, and to evaluate the effectiveness of schoolwide interventions. It works to improve overall instruction (1) by providing feedback on progress measured against benchmarks, (2) by helping teachers to improve, and (3) as an accountability instrument for continuous improvement of systems (Hart et al., 2015)

Types of Summative Assessment

Educators generally rely on two forms of summative assessment: teacher constructed (informal) and standardized (systematic). Teacher-constructed assessment is the most common form of assessment found in classrooms. It can provide objective data for appraising student performance, but it is vulnerable to bias. Standardized assessment is designed to overcome many of the biases that can taint teacher-constructed tools, but this form of assessment have their own limitations. Both types of summative assessment have a place in an effective education system, but for maximum positive effects they should be employed to meet the needs for which they were designed.

Teacher Constructed (Informal)

Teacher-constructed assessment, the most common and frequently applied type of summative assessment, is derived from teachers’ daily interactions and observations of how students behave and perform in school. Since schools began, teachers have depended predominantly on informal assessment, which today includes teacher-constructed tests and quizzes, grades, and portfolios, and relies heavily on a teacher’s professional judgment. Teachers inevitably form judgments, often accurate, about students and their performance (Barnett, 1988; Spencer, Detrich, & Slocum, 2012). Although many of these judgments help teachers understand where students stand in mastering a lesson, a meaningful percentage result in false understandings and conclusions. To be effective, a teacher-constructed assessment must deliver vital information needed for the teacher to make accurate conclusions about each student’s performance in a content area and to feel confident that performance is linked to instruction. Ensuring that a teacher-constructed instrument is reliable and valid is central to the assessment design process.

Research suggests that the main weaknesses of informal assessment relate to validity and reliability (AERA, 1999; Mertler, 1999). That is why it is crucial for teachers to adopt assessment procedures that are valid indicators of a student’s performance (appraise what the assessment claims to) and that the assessment is reliable (provides information that can be replicated).

Validity is a measure of how well an instrument gauges the relevant skills of a student. The research literature identifies three basic types of validity: construct, criterion, and content. Students are best served when the teacher focuses on content validity, that is, making sure the content being tested is actually the content that was taught (Popham, 2014). Content validity requires no statistical calculations whereas both construct validity and criterion validity require knowledge of statistics and thus are not well suited to classroom teachers (Allen & Yen, 2002).

Ultimately, speedy feedback of student performance after an assessment enhances the value of all forms of assessment. To maximize the positive impact, both student and teacher should be provided with detailed and specific information on a student’s achievement. Timely comments and explanations from teachers can clarify how a student performed and are essential components of quality instruction and performance improvement. This information tells students where they stand with regard to the teacher’s expectations. Timely feedback is also essential for teachers (Gibbs & Simpson, 2005). Otherwise, teachers remain in the dark about the effectiveness of their instructional strategies and methods. Research suggests that testing without feedback is likely to produce disappointing results, and the quantity and quality of the research supports including feedback as an integral part of assessment (Başol, 2003).

Designing Teacher-Constructed Assessments

The essential question to ask when developing an informal teacher-constructed assessment is this: Does the assessment consistently assess what the teacher intended to be evaluated based on the material being taught? Best practices in assessment suggest that teachers start answering this question by incorporating assessment design into the instructional design process. Assessments are best generated at the same time as lesson plans. Although teaching to the test has acquired negative overtones, it is precisely what all student assessment is meant to accomplish. Teachers cannot and should not assess every item they teach, but it is important that they identify and prioritize the critical lesson elements for inclusion in a summary assessment.

Instruction and assessment are meant to complement one another. When this occurs it helps teachers, policymakers, administrators, and parents know what students are capable of doing at specific stages in the education process. A good match of assessment with instruction leads to more effective scope and sequencing, enhancing the acquisition of knowledge and the mastery of skills required for success in subsequent grades as well as success after graduation from school (Reigeluth, 1999).

The following are guidelines that lead to increased effectiveness of teacher-constructed assessment (Reynolds, Livingston, Willson, & Willson, 2010; Shillingburg, 2016; Taylor & Nolen, 2005):

- Clarify the purpose of the assessment and the intended use of its results.

- Define the domain (content and skills) to be assessed.

- Match instruction to standards required of each domain.

- Identify the characteristics of the population to be assessed and consider how these data might influence the design of the assessment.

- Ensure that all prerequisite skills required for the lesson have been taught to the students.

- Ensure that the assessment evaluates skills compatible with and required for success in future lessons.

- Review with the students the purpose of the assessment and the knowledge and skills to be assessed.

- Consider possible task formats, timing, and response modes and whether they are compatible with the assessment as well as how the scores will be used.

- Outline how validity will be evaluated and measured.

- Methods include matching test questions to lesson plans, lesson objectives, and standards, and obtaining student feedback after the assessment.

- Content-related evidence often consists of deciding whether the assessment methods are appropriate, whether the tasks or problems provide an adequate sample of the student’s performance, and whether the scoring system captures the performance.

- When possible, review test items with colleagues and students; revise as necessary.

- Review issues of reliability.

- Make sure that the assessment includes enough items and tasks (examples of performance) to report a reliable score.

- Evaluate the relative weight allotted to each task, to each content category, and to each skill being assessed.

- Pilot-test the assessment, then revise as necessary. Are the results consistent with formative assessments administered on the content being taught?

Standardized (Systematic)

Standardized testing is the second major category of summative assessment commonly used in schools. Students and teachers are very familiar with these standardized tests, which have become ubiquitous. Over the past 20 years, they have played an ever-increasing role in schools, especially since the passage in 2001 of the No Child Left Behind Act (NCLB, 2002). Standardized tests have increased not only in influence but also in quantity. Typically, students are engaged in taking standardized tests between 20 and 25 hours each year (Bangert-Drowns, Kulik, Kulik, & Morgan, 1991; Hart et al., 2015). The average 8th grader spends between 1.6% and 2.3% of classroom time on standardized tests, not including test preparation (Bangert-Drowns et al., 1991; Lazarín, 2014). A student will be required to participate in approximately 112 mandatory standardized exams during his or her academic career (Hart et al., 2015).

Although research finds that student performance increases with the frequency of assessment, it also shows that improvement tapers off with excess testing (Bangert-Drowns et al., 1991). Regardless of where educators stand on the issue of standardized testing, most can agree that these assessments should be reduced to the minimum number required to obtain the critical information for which they were designed. The aim is to decrease the number of standardized tests to those indispensable in providing educators with the basic information to make high-stakes decisions and for schools to implement a continuous improvement process. Ultimately, everyone is best served by reducing redundancy in test taking in order to maximize instructional time (Wang, Haertel, & Walberg, 1990).

Standardized tests provide valuable data to be used by educators for school reform and continuous improvement purposes. Data from these tests can include early indicators that point to interventions for preventing potential future problems. The data can also reveal when the system has broken down or highlight exemplary performers that schools can emulate. Using such data can be invaluable as a systemwide tool (Celio, 2013). Despite the potential value of summative assessment as a tool to monitor and improve systems, research finds minimal positive impact on student performance when the tests are used for high-stakes purposes or to hold teachers and schools accountable (Carnoy & Loeb, 2002; Hanushek & Raymond, 2005). The increased use of incentives and other accountability measures, which have cost enormous sums, reduced instruction time, and added stress to teachers, can be linked to only an average effect size of 0.05 in improvement of student achievement (Yeh, 2007).

As previously noted, formative assessment has been shown to be a much more effective tool in helping individual students maintain progress toward meeting accepted performance standards, and the rigor and cost required to design valid and reliable standardized tests places them outside the realm of tools that teachers can personally design. In the end, it is important to understand what summative assessment is best suited to accomplish. When it comes to improving systems, standardized assessment is well suited for meeting a school’s needs. But for improving an individual student’s performance, formative assessment is more appropriate.

Summary

Summative assessment is a commonplace tool used by teachers and school administrators. It ranges from a simple teacher-constructed end-of-lesson exam to standardized tests that determine graduation from high school and entry into college. If used for the purposes for which it was designed, summative assessment plays an important role in education. When used appropriately, it can deliver objective data to support a teacher’s professional judgment, to make high-stakes decisions, and as a tool for acquiring the needed information for adjustments in curriculum and instruction that will ultimately improve the education process. When used incorrectly or for accountability purposes, summative assessment can take valuable instruction time away from students and increase teacher and student stress without producing notable results.

Citations

Allen, M. J., & Yen, W. M. (2002). Introduction to measurement theory. Long Grove, IL: Waveland Press.

American Educational Research Association, American Psychological Association, & National Council on Measurement in Education. (1999). Standards for educational and psychological testing. Washington, DC: American Educational Research Association (AERA).

Bangert-Drowns, R. L., Kulik, C. L. C., Kulik, J. A., & Morgan, M. (1991). The instructional effect of feedback in test-like events. Review of educational research, 61(2), 213–238.

Barnett, D. W. (1988). Professional judgment: A critical appraisal. School Psychology Review, 17(4), 658–672

Başol, G. (2003). Effectiveness of frequent testing over achievement: a meta-analysis study. Unpublished doctorate dissertation, Ohio University, Athens, OH.

Başol, G., & Johanson, G. (2009). Effectiveness of frequent testing over achievement: A meta analysis study. International Journal of Human Sciences, 6(2), 99–121.

Belfield, C. R., & Crosta, P. M. (2012). Predicting success in college: The importance of placement tests and high school transcripts. CCRC Working Paper No. 42. New York, NY: Community College Research Center, Teachers College, Columbia University.

Brennan, R. L. (Ed.) (2006). Educational measurement (4th ed.). Westport, CT: Praeger Publishers.

Carnoy, M., & Loeb, S. (2002). Does external accountability affect student outcomes? A cross-state analysis. Educational Evaluation and Policy Analysis, 24(4), 305–331.

Celio, M. B. (2013). Seeking the magic metric: Using evidence to identify and track school system quality. In Performance Feedback: Using Data to Improve Educator Performance (Vol. 3, pp. 97–118). Oakland, CA: The Wing Institute.

Espenshade, T. J., & Chung, C. Y. (2010). Standardized admission tests, college performance, and campus diversity. Unpublished paper, Office of Population Research, Princeton University, Princeton, NJ.

Fuchs, L. S. & Fuchs, D. (1986). Effects of systematic formative evaluation: A meta-analysis. Exceptional Children, 53(3), 199–208.

Garrison, C., & Ehringhaus, M. (2007). Formative and summative assessments in the classroom. Westerville, OH: Association for Middle Level Education. https://www.amle.org/portals/0/pdf/articles/Formative_Assessment_Article_Aug2013.pdf

Geiser, S., & Santelices, M. V. (2007). Validity of high-school grades in predicting student success beyond the freshman year: High-school record vs. standardized tests as indicators of four-year college outcomes. Research and Occasional Paper Series. Berkeley, CA: Center for Studies in Higher Education, University of California.

Gibbs, G., & Simpson, C. (2005). Conditions under which assessment supports students’ learning. Learning and Teaching in Higher Education, 1, 3–31.

Hanushek, E. A., & Raymond, M. E. (2005). Does school accountability lead to improved student performance? Journal of Policy Analysis and Management, 24(2), 297–327.

Harlen, W., & James, M. (1997). Assessment and learning: Differences and relationships between formative and summative assessment. Assessment in Education: Principles, Policy & Practice, 4(3), 365–379.

Hart, R., Casserly, M., Uzzell, R., Palacios, M., Corcoran, A., & Spurgeon, L. (2015). Student testing in America’s great city schools: An inventory and preliminary analysis. Washington, DC: Council of the Great City Schools.

Lazarín, M. (2014). Testing overload in America’s schools. Washington, DC: Center for American Progress.

McMillan, J. H., & Schumacher, S. (1997). Research in education: A conceptual approach (4th ed.). New York, NY: Longman.

Mertler, C. A. (1999). Teachers’ (mis)conceptions of classroom test validity and reliability. Paper presented at the annual meeting of the Mid-Western Educational Research Association, Chicago, IL.

Moss, C. M. (2013). Research on classroom summative assessment. In J. H. McMillan (Ed.), Handbook of research on classroom assessment (pp. 235–255). Los Angeles, CA: Sage.

No Child Left Behind (NCLB) Act of 2001, Pub. L. No. 107-110, § 115, Stat. 1425. (2002).

Popham, W. J. (2014). Classroom assessment: What teachers need to know (7th ed.). Boston, MA: Pearson Education.

Reigeluth, C. M. (1999). The elaboration theory: Guidance for scope and sequence decisions. In C. M. Reigeluth (Ed.), Instructional design theories and models: A new paradigm of instructional theory (Vol. II, pp. 425–453). Mahwah, NJ: Lawrence Erlbaum.

Reynolds, C. R., Livingston, R. B., Willson, V., & Willson, V. (2010). Measurement and assessment in education. Upper Saddle River, NJ: Pearson Education.

Rosenshine, B. (2003). High-stakes testing: Another analysis. Education Policy Analysis Archives, 11(24), 1–8.

Spencer, T. D., Detrich, R., & Slocum, T. A. (2012). Evidence-based practice: A framework for making effective decisions. Education and Treatment of Children, 35(2), 127–151.

Shillingburg. W. (2016). Understanding validity and reliability in classroom, school-wide, or district-wide assessments to be used in teacher/principal evaluations. Retrieved from https://cms.azed.gov/home/GetDocumentFile?id=57f6d9b3aadebf0a04b2691a

Taylor, C. S., & Nolen, S. B. (2005). Classroom assessment: Supporting teaching and learning in real classrooms (2nd ed.). Upper Saddle River, NJ: Pearson Prentice Hall.

Wang, M. C., Haertel, G. D., & Walberg, H. J. (1990). What influences learning? A content analysis of review literature. The Journal of Educational Research, 84(1), 30–43.

Yeh, S. S. (2007). The cost-effectiveness of five policies for improving student achievement. American Journal of Evaluation, 28(4), 416–436.

TITLE

SYNOPSIS

CITATION

LINK

Assessment Overview

Research recognizes the power of assessment to amplify learning and skill acquisition. This overview describes and compares two types of Assessments educators rely on: Formative Assessment and Summative Assessment.

Must try harder: Evaluating the role of effort in educational attainment.

This paper is based on the simple idea that students’ educational achievement is affected by the effort put in by those participating in the education process: schools, parents, and, of course, the students themselves.

PISA Reports

The Programme for International Student Assessment (PISA) is survey which aims to evaluate education systems worldwide by testing the skills and knowledge of 15-year-old students.

PISA Reports Retrieved from http://www.oecd.org/pisa/.

Introduction: Proceedings from the Wing Institute’s Sixth Annual Summit on Evidence-Based Education: Performance Feedback: Using Data to Improve Educator Performance.

This book is compiled from the proceedings of the sixth summit entitled “Performance Feedback: Using Data to Improve Educator Performance.” The 2011 summit topic was selected to help answer the following question: What basic practice has the potential for the greatest impact on changing the behavior of students, teachers, and school administrative personnel?

States, J., Keyworth, R. & Detrich, R. (2013). Introduction: Proceedings from the Wing Institute’s Sixth Annual Summit on Evidence-Based Education: Performance Feedback: Using Data to Improve Educator Performance. In Education at the Crossroads: The State of Teacher Preparation (Vol. 3, pp. ix-xii). Oakland, CA: The Wing Institute.

Teachers’ subject matter knowledge as a teacher qualification: A synthesis of the quantitative literature on students’ mathematics achievement

The main focus of this study is to find different kinds of variables that might contribute to variations in the strength and direction of the relationship by examining quantitative studies that relate mathematics teachers’ subject matter knowledge to student achievement in mathematics.

Ahn, S., & Choi, J. (2004). Teachers' Subject Matter Knowledge as a Teacher Qualification: A Synthesis of the Quantitative Literature on Students' Mathematics Achievement. Online Submission.

Teachers' Subject Matter Knowledge as a Teacher Qualification: A Synthesis of the Quantitative Literature on Students' Mathematics Achievement

The aim of this paper is to examine a variety of features of research that might account for mixed findings of the relationship between teachers' subject matter knowledge and student achievement based on meta-analytic technique.

Ahn, S., & Choi, J. (2004). Teachers' Subject Matter Knowledge as a Teacher Qualification: A Synthesis of the Quantitative Literature on Students' Mathematics Achievement. Online Submission.

Observations of effective teacher-student interactions in secondary school classrooms: Predicting student achievement with the classroom assessment scoring system–secondary

Multilevel modeling techniques were used with a sample of 643 students enrolled in 37 secondary school classrooms to predict future student achievement (controlling for baseline achievement) from observed teacher interactions with students in the classroom, coded using the Classroom Assessment Scoring System—Secondary.

Allen, J., Gregory, A., Mikami, A., Lun, J., Hamre, B., & Pianta, R. (2013). Observations of effective teacher–student interactions in secondary school classrooms: Predicting student achievement with the classroom assessment scoring system—secondary. School Psychology Review, 42(1), 76.

Introduction to measurement theory

The authors effectively cover the construction of psychological tests and the interpretation of test scores and scales; critically examine classical true-score theory; and explain theoretical assumptions and modern measurement models, controversies, and developments.

Allen, M. J., & Yen, W. M. (2001). Introduction to measurement theory. Waveland Press.

school interventions that work: targeted support for low-performing students

This report breaks out key steps in the school identification and improvement process, focusing on (1) a diagnosis of school needs; (2) a plan to improve schools; and (3) evidenced-based interventions that work.

Effects of Acceptability on Teachers' Implementation of Curriculum-Based Measurement and Student Achievement in Mathematics Computation

The authors investigated the hypothesis that treatment acceptability influences teachers' use of a formative evaluation system (curriculum-based measurement) and, relatedly, the amount of gain effected in math for their students.

Allinder, R. M., & Oats, R. G. (1997). Effects of acceptability on teachers' implementation of curriculum-based measurement and student achievement in mathematics computation. Remedial and Special Education, 18(2), 113-120.

Predictors of Elementary-aged Writing Fluency Growth in Response to a Performance Feedback Writing Intervention

The goal of the proposed study was to determine whether third-grade

students’ (n = 74) transcriptional skills and gender predicted their writing fluency growth in response to a performance feedback intervention.

Alvis, A. V. (2019). Predictors of Elementary-aged Students’ Writing Fluency Growth in Response to a Performance Feedback Writing Intervention

Standards for educational and psychological testing

The “Standards for Educational and Psychological Testing” were approved as APA policy by the APA Council of Representatives in August 2013.

American Educational Research Association, American Psychological Association, Joint Committee on Standards for Educational, Psychological Testing (US), & National Council on Measurement in Education. (1985). Standards for educational and psychological testing. American Educational Research Association.

Effectiveness of frequent testing over achievement: A meta analysis study

In current study, through a meta-analysis of 78 studies, it is aimed to determine the overall effect size for testing at different frequency levels and to find out other study characteristics, related to the effectiveness of frequent testing.

Başol, G., & Johanson, G. (2009). Effectiveness of frequent testing over achievement: A meta analysis study. Journal of Human Sciences, 6(2), 99-121.

The Instructional Effect of Feedback in Test-Like Events

Feedback is an essential construct for many theories of learning and instruction, and an understanding of the conditions for effective feedback should facilitate both theoretical development and instructional practice.

Bangert-Drowns, R. L., Kulik, C. L. C., Kulik, J. A., & Morgan, M. (1991). The instructional effect of feedback in test-like events. Review of educational research, 61(2), 213-238.

Professional judgment: A critical appraisal.

Professional judgment is required whenever conditions are uncertain. This article provides an analysis of professional judgment and describes sources of error in decision making.

Barnett, D. W. (1988). Professional judgment: A critical appraisal. School Psychology Review., 17(4), 658-672.

High impact teaching for sport and exercise psychology educators.

Just as an athlete needs effective practice to be able to compete at high levels of performance, students benefit from formative practice and feedback to master skills and content in a course. At the most complex and challenging end of the spectrum of summative assessment techniques, the portfolio involves a collection of artifacts of student learning organized around a particular learning outcome.

Beers, M. J. (2020). Playing like you practice: Formative and summative techniques to assess student learning. High impact teaching for sport and exercise psychology educators, 92-102.

Predicting Success in College: The Importance of Placement Tests and High School Transcripts.

This paper uses student-level data from a statewide community college system to examine the validity of placement tests and high school information in predicting course grades and college performance.

Belfield, C. R., & Crosta, P. M. (2012). Predicting Success in College: The Importance of Placement Tests and High School Transcripts. CCRC Working Paper No. 42. Community College Research Center, Columbia University.

Stepping stones: Principal career paths and school outcomes

This study examines the detrimental impact of principal turnover, including lower teacher retention and lower student achievement. Particularly hard hit are high poverty schools, which often lose principals at a higher rate as they transition to lower poverty, higher student achievement schools.

Beteille, T., Kalogrides, D., & Loeb, S. (2012). Stepping stones: Principal career paths and school outcomes. Social Science Research, 41(4), 904-919.

The effect of charter schools on student achievement.

Assessing literature that uses either experimental (lottery) or student-level growth-based methods, this analysis infers the causal impact of attending a charter school on student performance.

Betts, J. R., & Tang, Y. E. (2019). The effect of charter schools on student achievement. School choice at the crossroads: Research perspectives, 67-89.

Assessment and classroom learning

This paper is a review of the literature on classroom formative assessment.

Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in education, 5(1), 7-74.

Assessment and classroom learning. Assessment in Education: principles, policy & practice

This is a review of the literature on classroom formative assessment. Several studies show firm evidence that innovations designed to strengthen the frequent feedback that students receive about their learning yield substantial learning gains.

Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education: principles, policy & practice, 5(1), 7-74.

Inside the black box: Raising standards through classroom assessment

Firm evidence shows that formative assessment is an essential component of classroom work and that its development can raise standards of achievement, Mr. Black and Mr. Wiliam point out. Indeed, they know of no other way of raising standards for which such a strong prima facie case can be made.

Black, P., & Wiliam, D. (2010). Inside the black box: Raising standards through classroom assessment. Phi Delta Kappan, 92(1), 81-90.

Human characteristics and school learning

This paper theorizes that variations in learning and the level of learning of students are determined by the students' learning histories and the quality of instruction they receive.

Bloom, B. (1976). Human characteristics and school learning. New York: McGraw-Hill.

Differences in the note-taking skills of students with high achievement, average achievement, and learning disabilities

In this study, the note-taking skills of middle school students with LD were compared to peers with average and high achievement. The results indicate differences in the number and type of notes recorded between students with LD and their peers and differences in test performance of lecture content.

Boyle, J. R., & Forchelli, G. A. (2014). Differences in the note-taking skills of students with high achievement, average achievement, and learning disabilities. Learning and Individual Differences, 35, 9-14.

Educational Measurement

This fourth edition provides in-depth treatments of critical measurement topics, and the chapter authors are acknowledged experts in their respective fields.

Brennan, R. L. (Ed.) (2006). Educational measurement (4th ed.). Westport, CT: Praeger Publishers.

National board certification and teacher effectiveness: Evidence from a random assignment experiment

The National Board for Professional Teaching Standards (NBPTS) assesses teaching practice based on videos and essays submitted by teachers. They compared the performance of classrooms of elementary students in Los Angeles randomly assigned to NBPTS applicants and to comparison teachers.

Cantrell, S., Fullerton, J., Kane, T. J., & Staiger, D. O. (2008). National board certification and teacher effectiveness: Evidence from a random assignment experiment (No. w14608). National Bureau of Economic Research.

Does external accountability affect student outcomes? A cross-state analysis.

This study developed a zero-to-five index of the strength of accountability in 50 states based on the use of high-stakes testing to sanction and reward schools, and analyzed whether that index is related to student gains on the NAEP mathematics test in 1996–2000.

Carnoy, M., & Loeb, S. (2002). Does external accountability affect student outcomes? A cross-state analysis. Educational Evaluation and Policy Analysis, 24(4), 305-331.

Seeking the Magic Metric: Using Evidence to Identify and Track School System Quality

This paper discusses the search for a “magic metric” in education: an index/number that would be generally accepted as the most efficient descriptor of school’s performance in a district.

Celio, M. B. (2013). Seeking the Magic Metric: Using Evidence to Identify and Track School System Quality. In Performance Feedback: Using Data to Improve Educator Performance (Vol. 3, pp. 97-118). Oakland, CA: The Wing Institute.

Buried Treasure: Developing a Management Guide From Mountains of School Data

This report provides a practical “management guide,” for an evidence-based key indicator data decision system for school districts and schools.

Celio, M. B., & Harvey, J. (2005). Buried Treasure: Developing A Management Guide From Mountains of School Data. Center on Reinventing Public Education.

Scientific practitioner: Assessing student performance: An important change is needed

A rationale and model for changing assessment efforts in schools from simple description to the integration of information from multiple sources for the purpose of designing interventions are described.

Christenson, S. L., & Ysseldyke, J. E. (1989). Scientific practitioner: Assessing student performance: An important change is needed. Journal of School Psychology, 27(4), 409-425.

Teacher Learning through Assessment: How Student-Performance Assessments Can Support Teacher Learning

This paper describes how teacher learning through involvement with student-performance assessments has been accomplished in the United States and around the world, particularly in countries that have been recognized for their high-performing educational systems

Creating reports using longitudinal data: how states can present information to support student learning and school system improvement

This report provides ten actions to get data into the right hands of educators.

Data Quality Campaign, (2010). Creating reports using longitudinal data: how states can present information to support student learning and school system improvement.

Treatment Integrity: Fundamental to Education Reform

To produce better outcomes for students two things are necessary: (1) effective, scientifically supported interventions (2) those interventions implemented with high integrity. Typically, much greater attention has been given to identifying effective practices. This review focuses on features of high quality implementation.

Detrich, R. (2014). Treatment integrity: Fundamental to education reform. Journal of Cognitive Education and Psychology, 13(2), 258-271.

A Meta-Analytic Review Of The Distribution Of Practice Effect: Now You See It, Now You Don't

This meta-analysis reviews 63 studies on the relationship between conditions of massed practice and spaced practice with respect to task performance, which yields an overall mean weighted effect size of 0.46.

Donovan, J. J., & Radosevich, D. J. (1999). A meta-analytic review of the distribution of practice effect: Now you see it, now you don't. Journal of Applied Psychology, 84(5), 795.

Performance Assessment of Students' Achievement: Research and Practice.

Examines the fundamental characteristics of and reviews empirical research on performance assessment of diverse groups of students, including those with mild disabilities. Discussion of the technical qualities of performance assessment and barriers to its advancement leads to the conclusion that performance assessment should play a supplementary role in the evaluation of students with significant learning problems

Elliott, S. N. (1998). Performance Assessment of Students' Achievement: Research and Practice. Learning Disabilities Research and Practice, 13(4), 233-41.

The Utility of Curriculum-Based Measurement and Performance Assessment as Alternatives to Traditional Intelligence and Achievement Tests.

Curriculum-based measurement and performance assessments can provide valuable data for making special-education eligibility decisions. Reviews applied research on these assessment approaches and discusses the practical context of treatment validation and decisions about instructional services for students with diverse academic needs.

Elliott, S. N., & Fuchs, L. S. (1997). The Utility of Curriculum-Based Measurement and Performance Assessment as Alternatives to Traditional Intelligence and Achievement Tests. School Psychology Review, 26(2), 224-33.

Standardized admission tests, college performance, and campus diversity

A disproportionate reliance on SAT scores in college admissions has generated a growing number and volume of complaints. Some applicants, especially members of underrepresented minority groups, believe that the test is culturally biased. Other critics argue that high school GPA and results on SAT subject tests are better than scores on the SAT reasoning test at predicting college success, as measured by grades in college and college graduation.

Espenshade, T. J., & Chung, C. Y. (2010). Standardized admission tests, college performance, and campus diversity. Office of Population Research, Princeton University.

Implementation Research: A Synthesis of the Literature

This is a comprehensive literature review of the topic of Implementation examining all stages beginning with adoption and ending with sustainability.

Fixsen, D. L., Naoom, S. F., Blase, K. A., & Friedman, R. M. (2005). Implementation research: A synthesis of the literature.

Connecting Performance Assessment to Instruction: A Comparison of Behavioral Assessment, Mastery Learning, Curriculum-Based Measurement, and Performance Assessment.

This digest summarizes principles of performance assessment, which connects classroom assessment to learning. Specific ways that assessment can enhance instruction are outlined, as are criteria that assessments should meet in order to inform instructional decisions. Performance assessment is compared to behavioral assessment, mastery learning, and curriculum-based management.

Fuchs, L. S. (1995). Connecting Performance Assessment to Instruction: A Comparison of Behavioral Assessment, Mastery Learning, Curriculum-Based Measurement, and Performance Assessment. ERIC Digest E530.

Effects of Systematic Formative Evaluation: A Meta-Analysis

In this meta-analysis of studies that utilize formative assessment the authors report an effective size of .7.

Fuchs, L. S., & Fuchs, D. (1986). Effects of Systematic Formative Evaluation: A Meta-Analysis. Exceptional Children, 53(3), 199-208.

The Effects of Frequent Curriculum-Based Measurement and Evaluation on Pedagogy, Student Achievement, and Student Awareness of Learning

This study examined the educational effects of repeated curriculumbased measurement and evaluation. Thirty-nine special educators, each having three to four pupils in the study, were assigned randomly to a repeated curriculum-based measurement/evaluation (experimental) treatment or a conventional special education evaluation (contrast) treatment

Fuchs, L. S., Deno, S. L., & Mirkin, P. K. (1984). The effects of frequent curriculum-based measurement and evaluation on pedagogy, student achievement, and student awareness of learning. American Educational Research Journal, 21(2), 449-460.

Technological Advances Linking the Assessment of Students' Academic Proficiency to Instructional Planning

This article describes a research program conducted over the past 8 years to address how technology can be used to surmount these implementation difficulties. The research program focused on one variety of objective, ongoing assessments known as curriculum-based measurement, in the areas of reading, spelling, and math.

Fuchs, L. S., Fuchs, D., & Hamlett, C. L. (1993). Technological advances linking the assessment of students' academic proficiency to instructional planning. Journal of Special Education Technology, 12(1), 49-62.

Effects of expert system advice within curriculum-based measurement on teacher planning and student achievement in spelling.

30 special education teachers were assigned randomly to 3 groups: curriculum-based measurement (CBM) with expert system advice (CBM-ES), CBM with no expert system advice (CBM-NES), and control (i.e., no CBM).

Fuchs, L. S., Fuchs, D., Hamlett, C. L., & Allinder, R. M. (1991). Effects of expert system advice within curriculum-based measurement on teacher planning and student achievement in spelling. School Psychology Review.

Formative evaluation of academic progress: How much growth can we expect?

The purpose of this study was to examine students' weekly rates of academic growth, or slopes of achievement, when Curriculum-Based Measurement (CBM) is conducted repeatedly over 1 year.

Fuchs, L. S., Fuchs, D., Hamlett, C. L., Walz, L., & Germann, G. (1993). Formative evaluation of academic progress: How much growth can we expect?. School Psychology Review, 22, 27-27.

Mathematics performance assessment in the classroom: Effects on teacher planning and student problem solving

The purpose of this study was to examine effects of classroom-basedperformance-assessment (PA)-driven instruction.

Fuchs, L. S., Fuchs, D., Karns, K., Hamlett, C. L., & Katzaroff, M. (1999). Mathematics performance assessment in the classroom: Effects on teacher planning and student problem solving. American educational research journal, 36(3), 609-646.

Comparisons among individual and cooperative performance assessments and other measures of mathematics competence

The purposes of this study were to examine how well 3 measures, representing 3 points on a traditional-alternative mathematics assessment continuum, interrelated and discriminated students achieving above, at, and below grade level and to explore effects of cooperative testing for the most innovative measure (performance assessment).

Fuchs, L. S., Fuchs, D., Karns, K., Hamlett, C., Katzaroff, M., & Dutka, S. (1998). Comparisons among individual and cooperative performance assessments and other measures of mathematics competence. The Elementary School Journal, 99(1), 23-51.

Effects of curriculum-based measurement and consultation on teacher planning and student achievement in mathematics operations

The purpose of this study was to assess the effects of (a) ongoing, systematic assessment of student growth (i.e., curriculum-based measurement) and (b) expert system instructional consultation on teacher planning and student achievement in the area of mathematics operations.

Fuchs, L. S., Hamlett, D. F. C. L., & Stecker, P. M. (1991). Effects of curriculum-based measurement and consultation on teacher planning and student achievement in mathematics operations. American educational research journal, 28(3), 617-641.

Formative and summative assessments in the classroom

As a classroom teacher or administrator, how do you ensure that the information shared in a student-led conference provides a balanced picture of the student's strengths and weaknesses? The answer to this is to balance both summative and formative classroom assessment practices and information gathering about student learning.

Garrison, C., & Ehringhaus, M. (2007). Formative and summative assessments in the classroom.

Validity of High-School Grades in Predicting Student Success beyond the Freshman Year: High-School Record vs. Standardized Tests as Indicators of Four-Year College Outcomes

High-school grades are often viewed as an unreliable criterion for college admissions, owing to differences in grading standards across high schools, while standardized tests are seen as methodologically rigorous, providing a more uniform and valid yardstick for assessing student ability and achievement. The present study challenges that conventional view. The study finds that high-school grade point average (HSGPA) is consistently the best predictor not only of freshman grades in college, the outcome indicator most often employed in predictive-validity studies, but of four-year college outcomes as well.

Geiser, S., & Santelices, M. V. (2007). Validity of High-School Grades in Predicting Student Success beyond the Freshman Year: High-School Record vs. Standardized Tests as Indicators of Four-Year College Outcomes. Research & Occasional Paper Series: CSHE. 6.07. Center for studies in higher education.

The folklore of principal evaluation

Principal evaluation shares many characteristics with the more general field of personnel evaluation. That is, evaluations may have the purpose of gathering data to help improve performance (formative), or may use the collected information to make decisions about promotion or firing (summative).

Ginsberg, R., & Berry, B. (1990). The folklore of principal evaluation. Journal of personnel evaluation in education, 3(3), 205-230.

Impacts of comprehensive teacher induction: Final results from a randomized controlled study

To evaluate the impact of comprehensive teacher induction relative to the usual induction support, the authors conducted a randomized experiment in a set of districts that were not already implementing comprehensive induction.

Glazerman, S., Isenberg, E., Dolfin, S., Bleeker, M., Johnson, A., Grider, M., & Jacobus, M. (2010). Impacts of Comprehensive Teacher Induction: Final Results from a Randomized Controlled Study. NCEE 2010-4027. National Center for Education Evaluation and Regional Assistance.

Dealing with Flexibility in Assessments for Students with Significant Cognitive Disabilities

Alternate assessment and instruction is a key issue for individuals with disabilities. This report presents an analysis, by assessment system component, to identify where and when flexibility can be built into assessments.

Gong, B., & Marion, S. (2006). Dealing with Flexibility in Assessments for Students with Significant Cognitive Disabilities. Synthesis Report 60. National Center on Educational Outcomes, University of Minnesota.

Testing High Stakes Tests: Can We Believe the Results of Accountability Tests?

This study examines whether the results of standardized tests are distorted when rewards and sanctions are attached to them.

Greene, J., Winters, M., & Forster, G. (2004). Testing high-stakes tests: Can we believe the results of accountability tests?. The Teachers College Record, 106(6), 1124-1144.

Can comprehension be taught? A quantitative synthesis of “metacognitive” studies

To assess the effect of “metacognitive” instruction on reading comprehension, 20 studies, with a total student population of 1,553, were compiled and quantitatively synthesized. In this compilation of studies, metacognitive instruction was found particularly effective for junior high students (seventh and eighth grades). Among the metacognitive skills, awareness of textual inconsistency and the use of self-questioning as both a monitoring and a regulating strategy were most effective. Reinforcement was the most effective teaching strategy.

Haller, E. P., Child, D. A., & Walberg, H. J. (1988). Can comprehension be taught? A quantitative synthesis of “metacognitive” studies. Educational researcher, 17(9), 5-8.

Does school accountability lead to improved student performance?

The authors analysis of special education placement rates, a frequently identified area of concern, does not show any responsiveness to the introduction of accountability systems.

Hanushek, E. A., & Raymond, M. E. (2005). Does school accountability lead to improved student performance?. Journal of Policy Analysis and Management: The Journal of the Association for Public Policy Analysis and Management, 24(2), 297-327.

Assessment and learning: Differences and relationships between

The central argument of this paper is that the formative and summative purposes of assessment have become confused in practice and that as a consequence assessment fails to have a truly formative role in learning.

Harlen, W., & James, M. (1997). Assessment and learning: differences and relationships between formative and summative assessment. Assessment in Education: Principles, Policy & Practice, 4(3), 365-379.

Student testing in America’s great city schools: An

Inventory and preliminary analysis

this report aims to provide the public, along with teachers and leaders in the Great City Schools, with objective evidence about the extent of standardized testing in public schools and how these assessments are used.

Hart, R., Casserly, M., Uzzell, R., Palacios, M., Corcoran, A., & Spurgeon, L. (2015). Student Testing in America's Great City Schools: An Inventory and Preliminary Analysis. Council of the Great City Schools.

Visible learning: A synthesis of over 800 meta-analyses relating to achievement

Hattie’s book is designed as a meta-meta-study that collects, compares and analyses the findings of many previous studies in education. Hattie focuses on schools in the English-speaking world but most aspects of the underlying story should be transferable to other countries and school systems as well. Visible Learning is nothing less than a synthesis of more than 50.000 studies covering more than 80 million pupils. Hattie uses the statistical measure effect size to compare the impact of many influences on students’ achievement, e.g. class size, holidays, feedback, and learning strategies.

Hattie, J. (2008). Visible learning: A synthesis of over 800 meta-analyses relating to achievement. New York, NY: Routledge.

Effects of an interdependent group contingency on the transition behavior of middle school students with emotional and behavioral disorders

An ABAB design was used to evaluate the effectiveness of an interdependent group contingency with randomized components to improve the transition behavior of middle school students identified with emotional and behavioral disorders (EBDs) served in an alternative educational setting. The intervention was implemented by one teacher with three classes of students, and the dependent variable was the percentage of students ready to begin class at the appropriate time.

Hawkins, R. O., Haydon, T., McCoy, D., & Howard, A. (2017). Effects of an interdependent group contingency on the transition behavior of middle school students with emotional and behavioral disorders. School psychology quarterly, 32(2), 282.

A review of the effectiveness of guided notes for students who struggle learning academic content.

The purpose of this article is to examine research on the effectiveness of guided notes. Results indicate that using guided notes has a positive effective on student outcomes, as this practice has been shown to improve accuracy of note taking and student test scores.

Haydon, T., Mancil, G. R., Kroeger, S. D., McLeskey, J., & Lin, W. Y. J. (2011). A review of the effectiveness of guided notes for students who struggle learning academic content. Preventing School Failure: Alternative Education for Children and Youth, 55(4), 226-231.

A Longitudinal Examination of the Diagnostic Accuracy and Predictive Validity of R-CBM and High-Stakes Testing

The purpose of this study is to compare different statistical and methodological approaches to standard setting and determining cut scores using R- CBM and performance on high-stakes tests

Hintze, J. M., & Silberglitt, B. (2005). A longitudinal examination of the diagnostic accuracy and predictive validity of R-CBM and high-stakes testing. School Psychology Review, 34(3), 372.

Variability in reading ability gains as a function of computer-assisted instruction method of presentation

This study examines the effects on early reading skills of three different methods of

presenting material with computer-assisted instruction.

Johnson, E. P., Perry, J., & Shamir, H. (2010). Variability in reading ability gains as a function of computer-assisted instruction method of presentation. Computers and Education, 55(1), 209–217.

Retention and nonretention of at-risk readers in first grade and their subsequent reading achievement

Some of the specific reasons for the success or failure of retention in the area of reading were examined via an in-depth study of a small number of both at-risk retained students and comparably low skilled promoted children

Juel, C., & Leavell, J. A. (1988). Retention and nonretention of at-risk readers in first grade and their subsequent reading achievement. Journal of Learning Disabilities, 21(9), 571-580.

Identifying Specific Learning Disability: Is Responsiveness to Intervention the Answer?

Responsiveness to intervention (RTI) is being proposed as an alternative model for making decisions about the presence or absence of specific learning disability. The author argue that there are many questions about RTI that remain unanswered, and radical changes in proposed regulations are not warranted at this time.

Kavale, K. A. (2005). Identifying specific learning disability: Is responsiveness to intervention the answer?. Journal of Learning Disabilities, 38(6), 553-562.

Uneven Transparency: NCLB Tests Take Precedence in Public Assessment Reporting for Students with Disabilities

This report analyzes the public reporting of state assessment results for students with disabilities

Klein, J. A., Wiley, H. I., & Thurlow, M. L. (2006). Uneven transparency: NCLB tests take precedence in public assessment reporting for students with disabilities (Technical Report 43). Minneapolis, MN: University of Minnesota, National Center on Educational Outcomes.

The Effects of Feedback Interventions on Performance: A Historical Review, a Meta-Analysis, and a Preliminary Feedback Intervention Theory

The authors proposed a preliminary FI theory (FIT) and tested it with moderator analyses. The central assumption of FIT is that FIs change the locus of attention among 3 general and hierarchically organized levels of control: task learning, task motivation, and meta-tasks (including self-related) processes.

Kluger, A. N., & DeNisi, A. (1996). The effects of feedback interventions on performance: A historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychological bulletin, 119(2), 254.

Teachers’ Essential Guide to Formative Assessment

Like we consider our formative years when we draw conclusions about ourselves, a formative assessment is where we begin to draw conclusions about our students' learning. Formative assessment moves can take many forms and generally target skills or content knowledge that is relatively narrow in scope (as opposed to summative assessments, which seek to assess broader sets of knowledge or skills).

Knowles, J. (2020). Teachers’ Essential Guide to Formative Assessment.

A self-regulated flipped classroom approach to improving students’ learning performance in a mathematics course.

In this paper, a self-regulated flipped classroom approach is proposed to help students schedule their out-of-class time to effectively read and comprehend the learning content before class, such that they are capable of interacting with their peers and teachers in class for in-depth discussions.

Lai, C. L., & Hwang, G. J. (2016). A self-regulated flipped classroom approach to improving students’ learning performance in a mathematics course. Computers and Education, 100, 126–140.

Testing overload in America’s schools

In undertaking this study, two goals were established: (1) to obtain a better understanding of how much time students spend taking tests; and (2) to identify the degree to which the tests are mandated by districts or states.

Lazarín, M. (2014). Testing Overload in America's Schools. Center for American Progress.

Reading on grade level in third grade: How is it related to high school performance and college enrollment.

This study uses longitudinal administrative data to examine the relationship between third- grade reading level and four educational outcomes: eighth-grade reading performance, ninth-grade course performance, high school graduation, and college attendance.

Lesnick, J., Goerge, R., Smithgall, C., & Gwynne, J. (2010). Reading on grade level in third grade: How is it related to high school performance and college enrollment. Chicago: Chapin Hall at the University of Chicago, 1, 12.

Cost-effectiveness and educational policy.

This article provides a summary of measuring the fiscal impact of practices in education

educational policy.

Levin, H. M., & McEwan, P. J. (2002). Cost-effectiveness and educational policy. Larchmont, NY: Eye on Education.

Learning through action: Teacher candidates and performance assessments

Performance assessments such as the Teacher Performance Assessment (edTPA) are used by state departments of education as one measure of competency to grant teaching certification. Although the edTPA is used as a summative assessment, research studies in other forms of performance assessments, such as the Performance Assessment for California Teachers and the National Board Certification for Professional Teaching Standards have shown that they can be used as learning tools for both preservice and experienced teachers and as a form of feedback for teacher education programs.

Lin, S. (2015). Learning through action: Teacher candidates and performance assessments (Doctoral dissertation).

Complex, performance-based assessment: Expectations and validation criteria

It is argued that there is a need to rethink the criteria by which the quality of educational assessments are judged and a set of criteria that are sensitive to some of the expectations for performancebased assessments are proposed

Linn, R. L., Baker, E. L., & Dunbar, S. B. (1991). Complex, performance-based assessment: Expectations and validation criteria. Educational researcher, 20(8), 15-21.

A Theory-Based Meta-Analysis of Research on Instruction.

This research synthesis examines instructional research in a functional manner to provide guidance for classroom practitioners.

Marzano, R. J. (1998). A Theory-Based Meta-Analysis of Research on Instruction.

A New Era of School Reform: Going Where the Research Takes Us.

This monograph attempts to synthesize and interpret the extant research from the last 4 decades on the impact of schooling on students' academic achievement.

Marzano, R. J. (2001). A New Era of School Reform: Going Where the Research Takes Us.

Classroom Instruction That Works: Research Based Strategies For Increasing Student Achievement

This is a study of classroom management on student engagement and achievement.

Marzano, R. J., Pickering, D., & Pollock, J. E. (2001). Classroom instruction that works: Research-based strategies for increasing student achievement. Ascd

Improving education through standards-based reform.

This report offers recommendations for the implementation of standards-based reform and outlines possible consequences for policy changes. It summarizes both the vision and intentions of standards-based reform and the arguments of its critics.

McLaughlin, M. W., & Shepard, L. A. (1995). Improving Education through Standards-Based Reform. A Report by the National Academy of Education Panel on Standards-Based Education Reform. National Academy of Education, Stanford University, CERAS Building, Room 108, Stanford, CA 94305-3084..

Research in education: A conceptual approach

his pioneering text provides a comprehensive and highly accessible introduction to the principles, concepts, and methods currently used in educational research. This text also helps students master skills in reading, conducting, and understanding research.

McMillan, J. H., & Schumacher, S. (1997). Research in education: A conceptual approach (4th ed.). New York, NY: Longman.

Teachers’ (mis)conceptions of classroom test validity and reliability

This study examined processes and techniques teachers used to ensure that their assessments were valid and reliable, noting the extent to which they engaged in these processes.

Mertler, C. A. (1999). Teachers'(Mis) Conceptions of Classroom Test Validity and Reliability.

Research on Classroom Summative Assessment

The primary purpose of this chapter is to review the literature on teachers’ summative assessment practices to note their influence on teachers and teaching and on students and learning.

Moss, C. M. (2013). Research on classroom summative assessment. SAGE handbook of research on classroom assessment, 235-255.

Blended learning report

This research report presents the findings of this formative and summative research effort.

Murphy, R., Snow, E., Mislevy, J., Gallagher, L., Krumm, A., & Wei, X. (2014). Blended learning report. Austin, TX: Michael and Susan Dell Foundation.

The Nation's Report Card: Math Grade 4 National Results.

To investigate the relationship between students’ achievement and various contextual factors, NAEP collects information from teachers about their background, education, and training.

National Assessment of Educational Progress (NAEP). (2011a). The nation's report card: Math grade 4 national results. Retrieved from http://nationsreportcard.gov/math_2011/ gr4_national.asp?subtab_id=Tab_3&tab_id=tab2#chart

The Nation's Report Card: Reading Grade 12 National Results

How did U.S students perform on ht most recent assessment?

National Assessment of Educational Progress (NAEP). (2011b). The nation's report card: Reading grade 12 national results. Retrieved from http://nationsreportcard.gov/ reading_2009/gr12_national.asp?subtab_id=Tab_3&tab_id=tab2#

An Introduction to NAEP

This non-technical brochure provides introductory information on the development, administration, scoring, and reporting of the National Assessment of Educational Progress (NAEP). The brochure also provides information about the online resources available on the NAEP website.

The nation’s report card: Grade 12 reading and mathematics 2009 national and pilot state results

Twelfth-graders’ performance in reading and mathematics improves since 2005. Nationally representative samples of twelfth-graders from 1,670 public and private schools across the nation participated in the 2009 National Assessment of Educational Progress (NAEP).

National Center for Education Statistics (NCES). (2010b). The nation’s report card: Grade 12 reading and mathematics 2009 national and pilot state results. (NCES 2011-455). Retrieved http://nces.ed.gov/nationsreportcard/pdf/main2009/2011455.pdf

Data Explorer for Long-term Trend.

The Data Explorer for the Long-Term Trend assessments provides national mathematics and reading results dating from the 1970s.

National Center for Education Statistics (NCES). (2011a). Data explorer for long-term trend. [Data fle]. Retrieved from http://nces.ed.gov/nationsreportcard/lttdata/

The Nation’s Report Card: Mathematics 2011

Nationally representative samples of 209,000 fourth-graders and 175,200 eighth-graders participated in the 2011 National Assessment of Educational Progress (NAEP) in mathematics.

National Center for Education Statistics (NCES). (2011d). The nation’s report card: mathematics 2011. (NCES 2012-458). Retrieved from http://nces.ed.gov/nationsreportcard/ pdf/main2011/2012458.pdf

Students Meeting State Proficiency Standards and Performing at or above the NAEP Proficient Level: 2009.

Percentages of students meeting state proficiency standards and performing at or above the NAEP Proficient level, by subject, grade, and state: 2009

National Center for Education Statistics (NCES). (2011f). Students meeting state profciency standards and performing at or above the NAEP profcient level: 2009. Retrieved from http://nces.ed.gov/nationsreportcard/studies/statemapping/2009_naep_state_table.asp

The nation's report card: Writing 2011

In this new national writing assessment sample, 24,100 eighth-graders and 28,100 twelfthgraders engaged with writing tasks and composed their responses on computer. The assessment tasks reflected writing situations common to both academic and workplace settings and asked students to write for several purposes and communicate to different audiences.

National Center for Education Statistics. (2012). The nation's report card: Writing 2011 (NCES 2012-470).

The Nation’s Report Card

The National Assessment of Educational Progress (NAEP) is a national assessment of what America's students know in mathematics, reading, science, writing, the arts, civics, economics, geography, and U.S. history.

National Center for Education Statistics

No Child Left Behind Act of 2001

No Child Left Behind Act of 2001 ESEA Reauthorization

No child left behind act of 2001. Publ. L, 107-110. (2002)

PISA 2018 Results (Volume I): What Students Know and Can Do.

International Comparisons in Fourth-Grade Reading Literacy: Finding from the Progress in International Reading Literacy Study (PIRLS) of 2001

This report describes the reading literacy of fourth-graders in 35 countries, including the United States. The report provides information on a variety of reading topics, but with an emphasis on U.S. results. The report also presents information on reading and instruction in the classroom and explores the reading habits of fourth-graders outside of school. This report defines reading literacy for fourth-graders, highlights the performance and distribution of fourth-graders relative to fourth-graders in other countries, and illustrates, through international benchmarking, the performance of assessed students.

Ogle, L. T., Sen, A., Pahlke, E., Jocelyn, L., Kastberg, D., Roey, S., & Williams, T. (2003). International Comparisons in Fourth-Grade Reading Literacy: Findings from the Progress in International Reading Literacy Study (PIRLS) of 2001.

PISA 2009 Results: Learning Trends. Changes in Student Performance Since 2000 (Volume V)

This volume of PISA 2009 results looks at the progress countries have made in raising student performance and improving equity in the distribution of learning opportunities.

Organisation for Economic Co-operation and Development (OECD). (2010a). PISA 2009 results: Learning trends–Changes in student performance since 2000 (Volume V). Retrieved from https://www.oecd-ilibrary.org/education/pisa-2009-results-learning-trends_9789264091580-en

PISA 2009 Results: Overcoming social background–Equity in learning opportunities and outcomes

Volume II of PISA's 2009 results looks at how successful education systems moderate the impact of social background and immigrant status on student and school performance.

Organisation for Economic Co-operation and Development (OECD). (2010b). PISA 2009 results: Overcoming social background–Equity in learning opportunities and outcomes (Volume II). Retrieved from http://dx.doi.org/10.1787/9789264091504-en

PISA 2006 Technical Report

The OECD’s Programme for International Student Assessment (PISA) surveys, which take place every three years, have been designed to collect information about 15-year-old students in participating countries.

Organization for Economic Co-operation and Development (OECD). (2006). PISA 2006 technical report. Retrieved from http://www.oecd.org/pisa/pisaproducts/42025182.pdf

PISA 2009 Results: What students know and can do–Student performance in reading, mathematics

and science (Volume I)

This first volume of PISA 2009 survey results provides comparable data on 15-year-olds' performance on reading, mathematics, and science across 65 countries.

Organization for Economic Co-operation and Development (OECD). (2010c). PISA 2009 results: What students know and can do–Student performance in reading, mathematics and science (Volume I). Retrieved from http://dx.doi.org/10.1787/9789264091450-en

Incorporating End-of-Course Exam Timing Into Educational Performance Evaluations

There is increased interest in extending the test-based evaluation framework in K-12 education to achievement in high school. High school achievement is typically measured by performance on end-of-course exams (EOCs), which test course-specific standards in subjects including algebra, biology, English, geometry, and history, among others. Recent research indicates that when students take particular courses can have important consequences for achievement and subsequent outcomes. The contribution of the present study is to develop an approach for modeling EOC test performance regarding the timing of course.

Parsons, E., Koedel, C., Podgursky, M., Ehlert, M., & Xiang, P. B. (2015). Incorporating end-of-course exam timing into educational performance evaluations. Journal of Research on Educational Effectiveness, 8(1), 130-147.

Classroom Assessment Scoring System (CLASS) Manual

Positive teacher-student interactions are a primary ingredient of quality early educational experiences that launch future school success. With CLASS, educators finally have an observational tool to assess classroom quality in pre-kindergarten through grade 3 based on teacher-student interactions rather than the physical environment or a specific curriculum

Pianta, R. C., La Paro, K. M., & Hamre, B. K. (2008). Classroom Assessment Scoring System™: Manual K-3. Baltimore, MD, US: Paul H Brookes Publishing.

Classroom assessment: What teachers need to know

This book contains necessary information to help teachers deal with the assessment concerns of classroom teachers.

Popham, W. J. (2014). Classroom assessment: What teachers need to know (7th ed.). Boston, MA: Pearson Education.

Instructional-design Theories and Models: A New Paradigm of Instructional Theory,

Instructional theory describes a variety of methods of instruction (different ways of facilitating human learning and development) and when to use--and not use--each of those methods. It is about how to help people learn better.

Reigeluth, C. M. (1999). The elaboration theory: Guidance for scope and sequence decisions. Instructional design theories and models: A new paradigm of instructional theory, 2, 425-453.

Measurement and assessment in education

This text employs a pragmatic approach to the study of educational tests and measurement so that teachers will understand essential psychometric concepts and be able to apply them in the classroom.

Reynolds, C. R., Livingston, R. B., Willson, V., & Willson, V. (2010). Measurement and assessment in education. Upper Saddle River, NJ: Pearson Education.

Getting back on track: The effect of online versus face-to-face credit recovery in Algebra I on high school credit accumulation and graduation

This research brief is one in a series for the Back on Track Study that presents the findings regarding the relative impact of online versus face-to-face Algebra I credit recovery on students’ academic outcomes, aspects of implementation of the credit recovery courses, and the effects over time of expanding credit recovery options for at-risk students.

Rickles, J., Heppen, J., Allensworth, E., Sorenson, N., Walters, K., & Clements, P. (2018). Getting back on track: The effect of online versus face-to-face credit recovery in Algebra I on high school credit accumulation and graduation. American Institutes for Research, Washington, DC; University of Chicago Consortium on School Research, Chicago, IL. https://www.air.org/system/files/downloads/report/Effect-Online-Versus-Face-to-Face-Credit-Recovery-in-Algebra-High-School-Credit-Accumulation-and-Graduation-June-2017.pdf

High-stakes testing: Another analysis

Amrein and Berliner (2002b) compared National Assessment of Educational Progress (NAEP) results in high-stakes states against the national average for NAEP scores. In this analysis, a comparison group was formed from states that did not attach consequences to their state-wide tests.

Rosenshine, B. (2003). High-stakes testing: Another analysis. education policy analysis archives, 11, 24.

LESSONS; Testing Reaches A Fork in the Road

CHILDREN take one of two types of standardized test, one ''norm-referenced,'' the other ''criteria-referenced.'' Although those names have an arcane ring, most parents are familiar with how the exams differ.

Rothstein, R. (2002, May 22). Lessons: Testing reaches a fork in the road. New York Times. http://www.nytimes.com/2002/05/22/nyregion/lessons-testing-reaches-a-fork-in-the-road. html

The Foundations of Educational Effectiveness

This book looks at research and theoretical models used to define educational effectiveness with the intent on providing educators with evidence-based options for implementing school improvement initiatives that make a difference in student performance.

Scheerens, J. and Bosker, R. (1997). The Foundations of Educational Effectiveness. Oxford:Pergmon

Research news and comment: Performance assessments: Political rhetoric and measurement reality

Part of the president Bush strategy for the transformation of "American Schools" lies in an accountability system that would track progress toward the nation's education goals as well as provide the impetus for reform. Here we focus primarily on issues of accountability and student achievement.

Shavelson, R. J., Baxter, G. P., & Pine, J. (1992). Research news and comment: Performance assessments: Political rhetoric and measurement reality. Educational Researcher, 21(4), 22-27.

Improvement on WASL Carries Asterisk

Scores went up in all grades and subjects this year on the Washington Assessment of Student Learning (WASL). But how much depends on how you look at them.

Shaw, L. (2004, September 2). Improvement on WASL carries asterisk. Seattle Times.

Understanding validity and reliability in classroom, school-wide, or district-wide assessments to be used in teacher/principal evaluations

The goal of this paper is to provide a general understanding for teachers and administrators of the concepts of validity and reliability; thereby, giving them the confidence to develop their own assessments with clarity of these terms.

Shillingburg. W. (2016). Understanding validity and reliability in classroom, school-wide, or district-wide assessments to be used in teacher/principal evaluations. Retrieved from https://cms.azed.gov/home/GetDocumentFile?id=57f6d9b3aadebf0a04b2691a

A Consumer’s Guide to Evaluating a Core Reading Program Grades K-3: A Critical Elements Analysis

A critical review of reading programs requires objective and in-depth analysis. For these reasons, the authors offer the following recommendations and procedures for analyzing critical elements of programs.

Simmons, D. C., & Kame’enui, E. J. (2003). A consumer’s guide to evaluating a core reading program grades K-3: A critical elements analysis. Retrieved December, 19, 2006.

Strategic responses to school accountability measures: It's all in the timing

This paper examines efforts in the State of Wisconsin to improve test scores.

Sims, D. P. (2008). Strategic responses to school accountability measures: It's all in the timing. Economics of Education Review, 27(1), 58-68.

A Quantitative Synthesis of Research on Writing Approaches in Grades 2 to 12

This Campbell systematic review examines the impact of class size on academic achievement. The review summarises findings from 148 reports from 41 countries. Ten studies were included in the meta‐analysis.

Slavin, R. E., Lake, C., Inns, A., Baye, A., Dachet, D., & Haslam, J. (2019). A Quantitative Synthesis of Research on Writing Approaches in Grades 2 to 12. Best Evidence Encyclopedia.

Averaged freshman graduation rates for public secondary schools, by state or jurisdiction: Selected years, 1990–91 through 2008–09

The averaged freshman graduation rate provides an estimate of the percentage of students who receive a regular diploma within 4 years of entering ninth grade.

Snyder, T. D., & Dillow, S. A. (2012a). Averaged freshman graduation rates for public secondary schools, by state or jurisdiction: Selected years, 1990–91 through 2008–09. [Table 113]. Retrieved from http://nces.ed.gov/programs/digest/d11/tables/dt11_113.asp

Evidence-based Practice: A Framework for Making Effective Decisions.

Evidence-based practice is a decision-making framework. This paper describes the relationships among the three cornerstones of this framework.

Spencer, T. D., Detrich, R., & Slocum, T. A. (2012). Evidence-based Practice: A Framework for Making Effective Decisions. Education & Treatment of Children (West Virginia University Press), 35(2), 127-151.

Summative Assessment Overview

Summative assessment is an appraisal of learning at the end of an instructional unit or at a specific point in time. It compares student knowledge or skills against standards or benchmarks. Summative assessment includes midterm exams, final project, papers, teacher-designed tests, standardized tests, and high-stakes tests.

States, J., Detrich, R. & Keyworth, R. (2018). Overview of Summative Assessment. Oakland, CA: The Wing Institute. https://www.winginstitute.org/assessment-summative