Assessment Overview PDF

Assessment

Overview

Research recognizes the power of assessment to amplify learning and skill acquisition. Assessing students is a fundamental ingredient of effective teaching. It is the tool that enables teachers to measure the extent to which a student or group of students have mastered the material taught in a lesson or a class or during the school year, and it gives instructors the necessary information to modify instruction when progress falters. Assessment affects decisions about grades, placement, advancement, instructional strategies, curriculum, special education placement, and funding. It works to improve instruction in the following ways: (1) as a diagnostic tool, (2) by providing feedback on progress measured against benchmarks, (3) as a motivating factor, and (4) as an accountability instrument for improving systems.

Teachers routinely make significant critical decisions to tailor instruction to the needs of students. Research suggests that, on a daily basis, teachers make between 1,000 and 1,500 student-related instructional decisions that impact learning (Jackson, 1990). Assessment provides educators with the knowledge to make these informed decisions, some seemingly small at the time and others high stakes, which may have a major influence on a child’s success in life.

Educators generally rely on two types of assessment: informal and systematic. Informal assessment, the most common and frequently applied form, is derived from teachers’ daily interactions and observations of how students behave and perform in school. This type of assessment includes incidental observations, teacher-constructed tests and quizzes, grades, and portfolios, and relies heavily on a teacher’s professional judgment. The primary weaknesses of informal assessment are issues of validity and reliability (AERA, 1999). Since schools began, teachers have depended predominantly on informal assessment. Teachers easily form judgments about students and their performance. Although many of these judgments help teachers understand where students stand in regard to lessons, a meaningful percentage result in false understandings and conclusions. That is why it is so important for teachers to adopt assessment procedures that are valid indicators of a student’s performance (appraise what the assessment claims to), and for the assessment to be reliable (provide information that can be replicated).

Systematic assessment is specifically designed to minimize bias and to increase validity and reliability, thus providing teachers and educators with the most accurate information to maximize student achievement. This type of assessment uses preplanned tools designed to identify objectively how well a student has progressed and to reveal what students have learned in relation to other students and against predetermined standards. Educators depend on two forms of systematic assessment: formative and summative. Formative assessment is used as students are learning, and summative assessment happens at the end of instruction.

Formative assessment has the greatest effect on an individual student’s performance, functioning as a problem-solving mechanism that helps teachers pinpoint impediments to learning and offers clues for adapting teaching to reduce student failure. In contrast, summative assessment is best used to evaluate learning at the conclusion of an instructional period and is employed to evaluate learning against standards.

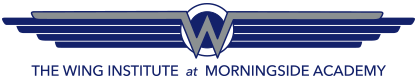

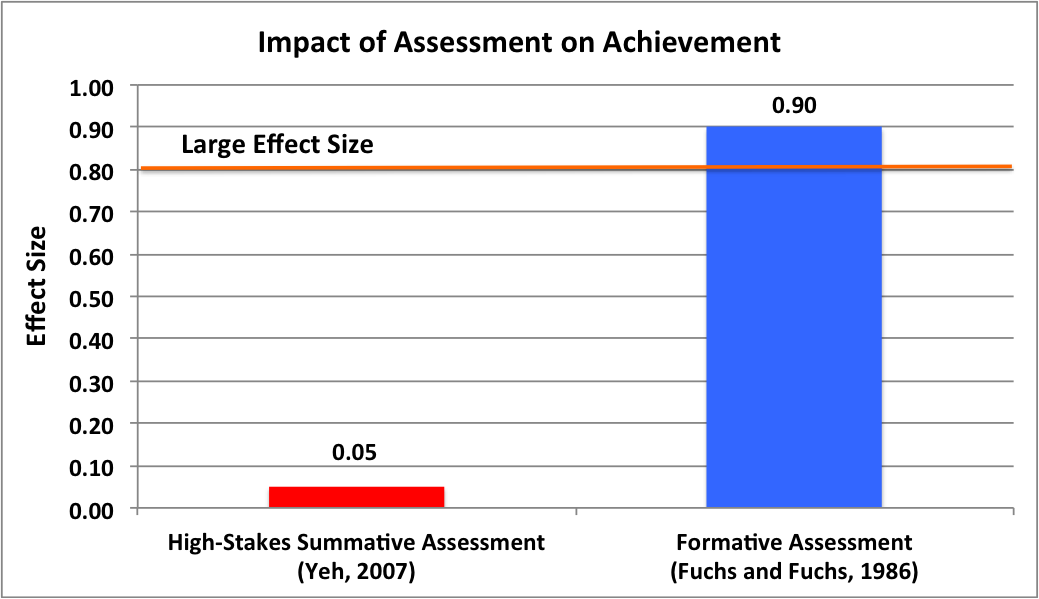

Figure 1 examines the relative impact of formative and high-stakes summative assessment on student achievement. Research shows a clear advantage for the use of formative assessment for improving student performance.

Figure 1. Comparison of formative and summative assessment impact on student achievement

Formative Assessment

For teachers, few skills are as important or powerful as formative assessment. Also known as progress monitoring and rapid assessment, formative assessment allows teachers to quickly determine if individual students are progressing at acceptable rates and provides insight into where and how to modify and adapt lessons, with the goal of making sure that all students are progressing satisfactorily. It is the process of using frequent and ongoing feedback on student performance to gain insight on how to adjust instruction to maximize learning. The assessment data are used to verify student progress and as an indicator to adjust interventions when insufficient progress has been made (VanDerHeyden, 2013). For the past 30 years, formative assessment has been found to be effective in typical classroom settings. The practice has shown power across student ages, treatment durations, and frequencies of measurement, and with students with special needs (Hattie, 2009).

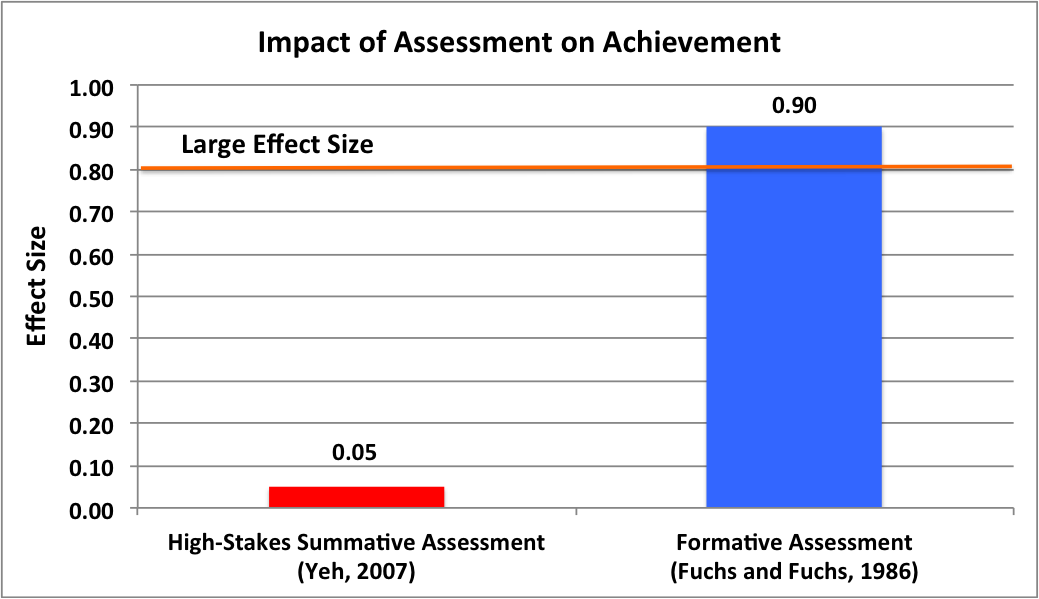

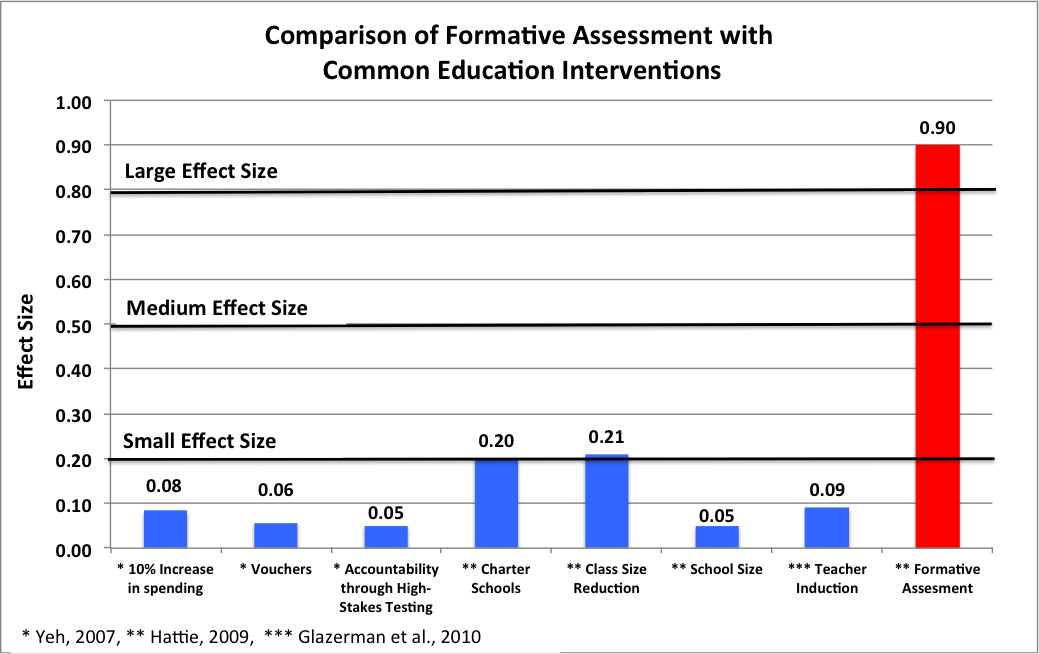

The relative power of formative assessment can be seen in Figure 2, which compares the practice with seven common education interventions (Glazerman et al., 2010; Hattie, 2009; Yeh, 2007). This comparison reveals that none of the seven interventions rise much above a small effect size, whereas formative assessment’s large effect size of 0.90 shows its sizable impact on achievement.

Figure 2: Comparing formative assessment with commonly adopted education interventions

Summative Assessment

Summative assessment is an appraisal of learning at the end of an instructional unit or at a specific point in time. It compares student knowledge or skills with standards or benchmarks. Summative assessment evaluates the mastery of learning. Generally, it gauges how a particular population rather than an individual responds to an intervention. It often aggregates data across students to act as an independent yardstick that allows teachers, administrators, and parents to judge the effectiveness of the materials, curriculum, and instruction used to meet national, state, or local standards.

This type of assessment includes midterm exams, final project, papers, teacher-designed tests, standardized tests, and high-stakes tests. Summative assessment is used to determine at a particular point in time what students know and do not know. Because it occurs at the end of a period, it is of little value as a diagnostic tool for enhancing an individual student’s performance during the school year, but it does play a pivotal role in troubleshooting weaknesses in an education system.

Summative assessment is most often associated with standardized tests such as state achievement assessments, but is also commonly used by teachers to assess the overall progress of students when determining grades. As a subset of summative assessment, standardized tests play a critical role in ensuring that schools are held to the same standards and that all students regardless of race or socioeconomic background perform to expectations. Summative assessment provides educators with metrics to know what is working and what is not.

Since the advent of No Child Left Behind, summative assessment has increasingly been used to hold schools and teachers accountable for student progress. This has led to concerns among many educators that schools place too great an emphasis on instruction that will result in higher achievement scores. In many districts, standardized tests have become the single most important indicator of teacher and school performance. A consequence of this overemphasis is the phenomenon known as “teaching to the test.” The concern is that an exclusive focus on material that will be tested in a standardized test will be accompanied by a corresponding neglect of the broader curriculum. Accentuating standardized tests has also led to a notable increase in the number of standardized tests given each year (Hart et al., 2015). Teachers and administrators feel enormous pressure to ensure that test scores rise consistently. Educators have also expressed concern that excess standardized testing limits the available time for instruction.

Parents, teachers, school administrators, and legislators have begun to voice frustration with the weight assigned to and the growth in the number of standardized tests administered each year in public schools. In 2015, resistance in Boulder, Colorado, schools resulted in zero students participating in testing in some schools. Similar protests against standardized testing have popped up across the nation over the past 5 years, leading for demands to bring balance back to the system.

Summary

Formative assessment and summative assessment play important but very different roles in an effective model of education. Both are integral in gathering information necessary for maximizing student success. In a balanced system, both types of assessment are essential components of information gathering, but they need to be used for the purposes for which they were designed.

Citations

Fuchs, L. S. & Fuchs, D. (1986). Effects of systematic formative evaluation: A meta-analysis. Exceptional Children, 53(3), 199–208.

Glazerman, S., Isenberg, E., Dolfin, S., Bleeker, M., Johnson, A., Grider, M., & Jacobus, M. (2010). Impacts of comprehensive teacher induction: Final results from a randomized controlled study. (NCEE 2010-4027.) Washington, DC: National Center for Education Evaluation and Regional Assistance, Institute of Education Sciences, U.S. Department of Education.

Hart, R., Casserly, M., Uzzell, R., Palacios, M., Corcoran, A., & Spurgeon, L. (2015). Student testing in America's great city schools: An inventory and preliminary analysis. Washington, DC: Council of the Great City Schools.

Hattie, J. (2009). Visible learning: A synthesis of over 800 meta-analyses relating to achievement. New York, NY: Routledge.

Jackson, P. W. (1990). Life in classrooms. New York, NY: Teachers College Press.

Joint Committee on Standards for the Educational and Psychological Testing of the American Educational Research Association, American Psychological Association, and National Council on Measurement in Education. (1999). Standards for educational and psychological testing. Washington DC: American Educational Research Association (AERA).

VanDerHeyden, A. (2013). Are we making the differences that matter in education? In R. Detrich, R. Keyworth, & J. States (Eds.), Advances in evidence-based education: Vol 3. Performance feedback: Using data to improve educator performance (pp. 119–138). Oakland, CA: The Wing Institute. http://www.winginstitute.org/uploads/docs/Vol3Ch4.pdf

Yeh, S. S. (2007). The cost-effectiveness of five policies for improving student achievement. American Journal of Evaluation, 28(4), 416–436.

TITLE

SYNOPSIS

CITATION

LINK

Assessment Overview

Research recognizes the power of assessment to amplify learning and skill acquisition. This overview describes and compares two types of Assessments educators rely on: Formative Assessment and Summative Assessment.

Functional assessment of the academic environment.

Discusses the relationship between academic performance or achievement and classroom environment factors. Classroom variables are conceptualized as an academic ecology that involves a network of relationships among student and environmental factors that affect the acquisition of new skills and student engagement in academic work.

Lentz, F. E., & Shapiro, E. S. (1986). Functional assessment of the academic environment. School Psychology Review, 15(3), 346-357.

Introduction: Proceedings from the Wing Institute’s Sixth Annual Summit on Evidence-Based Education: Performance Feedback: Using Data to Improve Educator Performance.

This book is compiled from the proceedings of the sixth summit entitled “Performance Feedback: Using Data to Improve Educator Performance.” The 2011 summit topic was selected to help answer the following question: What basic practice has the potential for the greatest impact on changing the behavior of students, teachers, and school administrative personnel?

States, J., Keyworth, R. & Detrich, R. (2013). Introduction: Proceedings from the Wing Institute’s Sixth Annual Summit on Evidence-Based Education: Performance Feedback: Using Data to Improve Educator Performance. In Education at the Crossroads: The State of Teacher Preparation (Vol. 3, pp. ix-xii). Oakland, CA: The Wing Institute.

A Synthesis of Quantitative Research on Reading Programs for Secondary Students

This review of the research on secondary reading programs focuses on 69 studies that used random assignment (n=62) or high-quality quasi-experiments (n=7) to evaluate outcomes of 51 programs on widely accepted measures of reading.The study found programs using one-to-one and small-group tutoring (+0.14 to +0.28 effect size), cooperative learning (+0.10 effect size), whole-school approaches including organizational reforms such as teacher teams (+0.06 effect size), and writing-focused approaches (+0.13 effect size) showed positive outcomes. Individual approaches in a few other categories also showed positive impacts. The findings are important suggesting interventions for secondary readers to improve struggling student’s chances of experiencing greater success in high school and better opportunities after graduation.

Citation: Baye, A., Lake, C., Inns, A. & Slavin, R. E. (2018, January). A Synthesis of Quantitative Research on Reading Programs for Secondary Students. Baltimore, MD: Johns Hopkins University, Center for Research and Reform in Education.

Teachers’ subject matter knowledge as a teacher qualification: A synthesis of the quantitative literature on students’ mathematics achievement

The main focus of this study is to find different kinds of variables that might contribute to variations in the strength and direction of the relationship by examining quantitative studies that relate mathematics teachers’ subject matter knowledge to student achievement in mathematics.

Ahn, S., & Choi, J. (2004). Teachers' Subject Matter Knowledge as a Teacher Qualification: A Synthesis of the Quantitative Literature on Students' Mathematics Achievement. Online Submission.

School-wide PBIS Tiered Fidelity Inventory

The purpose of the SWPBIS Tiered Fidelity Inventory is to provide a valid, reliable, and efficient measure of the extent to which school personnel are applying the core features of school-wide positive behavioral interventions and supports. The TFI is divided into three sections that can be used separately or in combination to assess the extent to which core features are in place.

Algozzine, B., Barrett, S., Eber, L., George, H., Horner, R., Lewis, T., Putnam, B., Swain-Bradway, J., McIntosh, K., & Sugai, G (2014). School-wide PBIS Tiered Fidelity Inventory. OSEP Technical Assistance Center on Positive Behavioral Interventions and Supports.

Observations of effective teacher-student interactions in secondary school classrooms: Predicting student achievement with the classroom assessment scoring system–secondary

Multilevel modeling techniques were used with a sample of 643 students enrolled in 37 secondary school classrooms to predict future student achievement (controlling for baseline achievement) from observed teacher interactions with students in the classroom, coded using the Classroom Assessment Scoring System—Secondary.

Allen, J., Gregory, A., Mikami, A., Lun, J., Hamre, B., & Pianta, R. (2013). Observations of effective teacher–student interactions in secondary school classrooms: Predicting student achievement with the classroom assessment scoring system—secondary. School Psychology Review, 42(1), 76.

Introduction to measurement theory

The authors effectively cover the construction of psychological tests and the interpretation of test scores and scales; critically examine classical true-score theory; and explain theoretical assumptions and modern measurement models, controversies, and developments.

Allen, M. J., & Yen, W. M. (2001). Introduction to measurement theory. Waveland Press.

Standards for educational and psychological testing

The “Standards for Educational and Psychological Testing” were approved as APA policy by the APA Council of Representatives in August 2013.

American Educational Research Association, American Psychological Association, Joint Committee on Standards for Educational, Psychological Testing (US), & National Council on Measurement in Education. (1985). Standards for educational and psychological testing. American Educational Research Association.

The Impact of High-Stakes Tests on Student Academic Performance

The purpose of this study is to assess whether academic achievement in fact increases after the introduction of high-stakes tests. The first objective of this study is to assess whether academic achievement has improved since the introduction of high-stakes testing policies in the 27 states with the highest stakes written into their grade 1-8 testing policies.

Amrein-Beardsley, A., & Berliner, D. C. (2002). The Impact of High-Stakes Tests on Student Academic Performance.

Functional behavior assessment in schools: Current status and future directions

Functional behavior assessment is becoming a commonly used practice in school settings. Accompanying this growth has been an increase in research on functional behavior assessment. We reviewed the extant literature on documenting indirect and direct methods of functional behavior assessment in school settings.

Anderson, C. M., Rodriguez, B. J., & Campbell, A. (2015). Functional behavior assessment in schools: Current status and future directions. Journal of Behavioral Education, 24(3), 338-371.

Applying Positive Behavior Support and Functional Behavioral Assessments in Schools

The purposes of this article are to describe (a) the context in which PBS and FBA are needed and (b) definitions and features of PBS and FBA.

applying positive behavior support

Beyond Standardized Testing: Assessing Authentic Academic Achievement in the Secondary School.

This book was designed as an assessment of standardized testing and its alternatives at the secondary school level.

Archbald, D. A., & Newmann, F. M. (1988). Beyond standardized testing: Assessing authentic academic achievement in the secondary school.

Functional behavioral assessment: A school based model

This paper describe a comprehensive model for the application of behavior analysis in the school. The model includes descriptive assessment, functional analysis, functional behavioral assessment, schools, in-home, problematic behavior.

Asmus, J. M., Vollmer, T. R., & Borrero, J. C. (2002). Functional behavioral assessment: A school based model. Education & Treatment of Children, 25(1), 67.

The need for assessment of maintaining variables in OBM

The authors describe three forms of functional assessment used in applied behavior analysis and explain three potential reasons why OBM has not yet adopted the use of such techniques.

Austin, J., Carr, J. E., & Agnew, J. L. (1999). The need for assessment of maintaining variables in OBM. Journal of Organizational Behavior Management, 19(2), 59-87.

Effectiveness of frequent testing over achievement: A meta analysis study

In current study, through a meta-analysis of 78 studies, it is aimed to determine the overall effect size for testing at different frequency levels and to find out other study characteristics, related to the effectiveness of frequent testing.

Başol, G., & Johanson, G. (2009). Effectiveness of frequent testing over achievement: A meta analysis study. Journal of Human Sciences, 6(2), 99-121.

Effectiveness of frequent testing over achievement: A meta analysis study

In current study, through a meta-analysis of 78 studies, it is aimed to determine the overall effect size for testing at different frequency levels and to find out other study characteristics, related to the effectiveness of frequent testing.

Başol, G., & Johanson, G. (2009). Effectiveness of frequent testing over achievement: A meta analysis study. Journal of Human Sciences, 6(2), 99-121.

The Instructional Effect of Feedback in Test-Like Events

Feedback is an essential construct for many theories of learning and instruction, and an understanding of the conditions for effective feedback should facilitate both theoretical development and instructional practice.

Bangert-Drowns, R. L., Kulik, C. L. C., Kulik, J. A., & Morgan, M. (1991). The instructional effect of feedback in test-like events. Review of educational research, 61(2), 213-238.

An Evaluation of the Research Evidence on the Early Start Denver Model

The Early Start Denver Model (ESDM) has been gaining popularity as a comprehensive treatment model for children ages 12 to 60 months with autism spectrum disorders (ASD). This article evaluates the research on the ESDM through an analysis of study design and purpose; child participants; setting, intervention agents, and context; density and duration; and overall research rigor to assist professionals with knowledge translation and decisions round adoption of the practices.

Baril, E. M., & Humphreys, B. P. (2017). An evaluation of the research evidence on the Early Start Denver Model. Journal of Early Intervention, 39(4), 321-338.

Changing the Landscape of Social Emotional Learning in Urban Schools: What are We Currently Focusing On and Where Do We Go from Here?

This study provides a systematic review of the use of social emotional learning (SEL) interventions in urban schools over the last 20 years. I summarize the types of interventions used and the outcomes examined, and I describe the use of culturally responsive pedagogy as a part of each intervention.

Barnes, T. N. (2019). Changing the landscape of social emotional learning in urban schools: What are we currently focusing on and where do we go from here?. The Urban Review, 51(4), 599-637.

Professional judgment: A critical appraisal.

Professional judgment is required whenever conditions are uncertain. This article provides an analysis of professional judgment and describes sources of error in decision making.

Barnett, D. W. (1988). Professional judgment: A critical appraisal. School Psychology Review., 17(4), 658-672.

The promise of meaningful eligibility determination: Functional intervention-based multifactored preschool evaluation

The authors describe minimal requirements for functional intervention-based assessment and suggest strategies for using these methods to analyze developmental delays and make special service eligibility decisions for preschool children (intervention-based multifactored evaluation or IBMFE).

Barnett, D. W., Bell, S. H., Gilkey, C. M., Lentz Jr, F. E., Graden, J. L., Stone, C. M., ... & Macmann, G. M. (1999). The promise of meaningful eligibility determination: Functional intervention-based multifactored preschool evaluation. The Journal of Special Education, 33(2), 112-124.

Psychometric qualities of professional practice.

This chapter is intended to help solve questions concerning how to judge the appropriateness of assessment or measurement decisions and of the information used to make decisions.

Barnett, D. W., Lentz Jr, F. E., & Macmann, G. (2000). Psychometric qualities of professional practice.

Seeing Students Learn Science: Integrating Assessment and Instruction in the Classroom (2017)

Seeing Students Learn Science is a guidebook meant to help educators improve the way in which students learn science. The introduction of new science standards across the nation has led to the adoption of new curricula, instruction, and professional development to align with the new standards. This publication is designed as a resource for educators to adapt assessment to these changes. It includes examples of innovative assessment formats, ways to embed assessments in engaging classroom activities, and ideas for interpreting and using novel kinds of assessment information.

Beatty, A., Schweingruber, H., & National Academies of Sciences, Engineering, and Medicine. (2017). Seeing Students Learn Science: Integrating Assessment and Instruction in the Classroom. Washington, DC: National Academies Press.

High impact teaching for sport and exercise psychology educators.

Just as an athlete needs effective practice to be able to compete at high levels of performance, students benefit from formative practice and feedback to master skills and content in a course. At the most complex and challenging end of the spectrum of summative assessment techniques, the portfolio involves a collection of artifacts of student learning organized around a particular learning outcome.

Beers, M. J. (2020). Playing like you practice: Formative and summative techniques to assess student learning. High impact teaching for sport and exercise psychology educators, 92-102.

Predicting Success in College: The Importance of Placement Tests and High School Transcripts.

This paper uses student-level data from a statewide community college system to examine the validity of placement tests and high school information in predicting course grades and college performance.

Belfield, C. R., & Crosta, P. M. (2012). Predicting Success in College: The Importance of Placement Tests and High School Transcripts. CCRC Working Paper No. 42. Community College Research Center, Columbia University.

Peer micronorms in the assessment of young children: Methodological review and examples

This paper outline the rationale, critical dimensions, and techniques for using peer micronorms and discuss technical adequacy considerations.

Bell, S. H., & Barnett, D. W. (1999). Peer micronorms in the assessment of young children: Methodological review and examples. Topics in Early Childhood Special Education, 19(2), 112-122.

What Does the Research Say About Testing?

Since the passage of the No Child Left Behind Act (NCLB) in 2002 and its 2015 update, the Every Student Succeeds Act (ESSA), every third through eighth grader in U.S. public schools now takes tests calibrated to state standards, with the aggregate results made public. In a study of the nation’s largest urban school districts, students took an average of 112 standardized tests between pre-K and grade 12.

Berwick, C. (2019). What Does the Research Say About Testing? Marin County, CA: Edutopia.

The effect of charter schools on student achievement.

Assessing literature that uses either experimental (lottery) or student-level growth-based methods, this analysis infers the causal impact of attending a charter school on student performance.

Betts, J. R., & Tang, Y. E. (2019). The effect of charter schools on student achievement. School choice at the crossroads: Research perspectives, 67-89.

Assessing The Value-Added Effects Of Literacy Collaborative Professional Development On Student Learning

This article reports on a 4-year longitudinal study of the effects of Literacy Collaborative (LC), a school-wide reform model that relies primarily on the one-on-one coaching of teachers as a lever for improving student literacy learning.

Biancarosa, G., Bryk, A. S., & Dexter, E. R. (2010). Assessing the value-added effects of literacy collaborative professional development on student learning. The elementary school journal, 111(1), 7-34.

Assessment and classroom learning

This paper is a review of the literature on classroom formative assessment.

Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in education, 5(1), 7-74.

Assessment and classroom learning. Assessment in Education: principles, policy & practice

This is a review of the literature on classroom formative assessment. Several studies show firm evidence that innovations designed to strengthen the frequent feedback that students receive about their learning yield substantial learning gains.

Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education: principles, policy & practice, 5(1), 7-74.

Inside the black box: Raising standards through classroom assessment

Firm evidence shows that formative assessment is an essential component of classroom work and that its development can raise standards of achievement, Mr. Black and Mr. Wiliam point out. Indeed, they know of no other way of raising standards for which such a strong prima facie case can be made.

Black, P., & Wiliam, D. (2010). Inside the black box: Raising standards through classroom assessment. Phi Delta Kappan, 92(1), 81-90.

Human characteristics and school learning

This paper theorizes that variations in learning and the level of learning of students are determined by the students' learning histories and the quality of instruction they receive.

Bloom, B. (1976). Human characteristics and school learning. New York: McGraw-Hill.

Developing effective assessment in higher education: A practical guide

Research and experience tell us very forcefully about the importance of assessment in higher education. It shapes the experience of students and influences their behaviour more than the teaching they receive. The influence of assessment means that ‘there is more leverage to improve teaching through changing assessment than there is in changing anything else’.

Bloxham, S., & Boyd, P. (2007). Developing Effective Assessment in Higher Education: a practical guide.

School Composition and the Black-White Achievement Gap.

This NCES study explores public schools' demographic composition, in particular, the proportion of Black students enrolled in schools (also referred to "Black student density" in schools) and its relation to the Black-White achievement gap. This study, the first of it's kind, used the 2011 NAEP grade 8 mathematics assessment data. Among the results highlighted in the report, the study indicates that the achievement gap between Black and White students remains whether schools fall in the highest density category or the lowest density category.

Bohrnstedt, G., Kitmitto, S., Ogut, B., Sherman, D., and Chan, D. (2015). School Composition and the Black–White Achievement Gap (NCES 2015-018). U.S. Department of Education, Washington, DC: National Center for Education Statistics. Retrieved from http://nces.ed.gov/pubsearch.

Educational Measurement

This fourth edition provides in-depth treatments of critical measurement topics, and the chapter authors are acknowledged experts in their respective fields.

Brennan, R. L. (Ed.) (2006). Educational measurement (4th ed.). Westport, CT: Praeger Publishers.

STATE END-OF-COURSE TESTING PROGRAMS: A Policy Brief

In recent years the number of states that have adopted or plan to implement end of course (EOC) tests as part of their high school assessment program has grown rapidly. While EOC tests certainly offer great promise, they are not without challenges. Many of the proposed uses of EOC tests open new and often complex issues related to design and implementation. The purpose of this brief is to support education leaders and policy makers in making appropriate technical and operational decisions to maximize the benefit of EOC tests and address the challenges.

Brief, A. P. (2011). State End-of-Course Testing Programs.

The debate about rewards and intrinsic motivation: Protests and accusations do not alter the results.

Cameron, J., & Pierce, W. D. (1996). The debate about rewards and intrinsic motivation: Protests and accusations do not alter the results. Review of Educational Research, 66(1), 39–51.

Principal Concerns: Leadership Data and Strategies for States.

This report tells policymakers what metrics they must track in order to make the best decisions regarding the supply and training of school leaders.

Campbell, C., & Gross, B. (2012). Principal Concerns: Leadership Data and Strategies for States. Center on Reinventing Public Education.

National board certification and teacher effectiveness: Evidence from a random assignment experiment

The National Board for Professional Teaching Standards (NBPTS) assesses teaching practice based on videos and essays submitted by teachers. They compared the performance of classrooms of elementary students in Los Angeles randomly assigned to NBPTS applicants and to comparison teachers.

Cantrell, S., Fullerton, J., Kane, T. J., & Staiger, D. O. (2008). National board certification and teacher effectiveness: Evidence from a random assignment experiment (No. w14608). National Bureau of Economic Research.

Does external accountability affect student outcomes? A cross-state analysis.

This study developed a zero-to-five index of the strength of accountability in 50 states based on the use of high-stakes testing to sanction and reward schools, and analyzed whether that index is related to student gains on the NAEP mathematics test in 1996–2000.

Carnoy, M., & Loeb, S. (2002). Does external accountability affect student outcomes? A cross-state analysis. Educational Evaluation and Policy Analysis, 24(4), 305-331.

Seeking the Magic Metric: Using Evidence to Identify and Track School System Quality

This paper discusses the search for a “magic metric” in education: an index/number that would be generally accepted as the most efficient descriptor of school’s performance in a district.

Celio, M. B. (2013). Seeking the Magic Metric: Using Evidence to Identify and Track School System Quality. In Performance Feedback: Using Data to Improve Educator Performance (Vol. 3, pp. 97-118). Oakland, CA: The Wing Institute.

Buried Treasure: Developing a Management Guide From Mountains of School Data

This report provides a practical “management guide,” for an evidence-based key indicator data decision system for school districts and schools.

Celio, M. B., & Harvey, J. (2005). Buried Treasure: Developing A Management Guide From Mountains of School Data. Center on Reinventing Public Education.

Distributed practice in verbal recall tasks: A review and quantitative synthesis.

A meta-analysis of the distributed practice effect was performed to illuminate the effects of temporal variables that have been neglected in previous reviews. This review found 839 assessments of distributed practice in 317 experiments located in 184 articles.

Cepeda, N. J., Pashler, H., Vul, E., Wixted, J. T., & Rohrer, D. (2006). Distributed practice in verbal recall tasks: A review and quantitative synthesis. Psychological bulletin, 132(3), 354.

The 2018 EdNext poll on school reform

Few issues engender stronger opinions in the American population than education, and the number and complexity of issues continue to grow. The annual Education Next Survey of Public Opinion examines the opinions of parents and teachers across a wide range of topic areas such as: student performance, common core curriculum, charter schools, school choice, teacher salaries, school spending, school reform, etc. The 12thAnnual Survey was completed in May, 2018.

Cheng, A., Henderson, M. B., Peterson, P.E. & West, M. R. (2019). The 2018 EdNext poll on school reform. Education Next, 19(1).

Do Published Studies Yield larger Effect Sizes than Unpublished Studies in Education and Special Education? A Meta-Review

The purpose of this study is to estimate the extent to which publication bias is present in education and special education journals. This paper shows that published studies were associated with significantly larger effect sizes than unpublished studies (d=0.64). The authors suggest that meta-analyses report effect sizes of published and unpublished separately in order to address issues of publication bias.

Chow, J. C., & Ekholm, E. (2018). Do Published Studies Yield Larger Effect Sizes than Unpublished Studies in Education and Special Education? A Meta-review.

Scientific practitioner: Assessing student performance: An important change is needed

A rationale and model for changing assessment efforts in schools from simple description to the integration of information from multiple sources for the purpose of designing interventions are described.

Christenson, S. L., & Ysseldyke, J. E. (1989). Scientific practitioner: Assessing student performance: An important change is needed. Journal of School Psychology, 27(4), 409-425.

Meeting urgent national needs in P–12 education: Improving relevance, evidence, and performance in teacher preparation

In the past, the accreditation process put a premium on compliance and included some reporting requirements that did not necessarily lead programs toward excellence or increase teacher candidates' impact on schools and learning. The accreditation process enabled institutions to think they were "done" once they earned accredited status, and did not do enough to encourage programs to tackle complex challenges confronting P-12 schools.

Cibulka, J. G. (2009). Improving relevance, evidence, and performance in teacher preparation. The Education Digest, 75(2), 44.

Discussion Sections in Reports of Controlled Trials Published in General Medical Journals: Islands in Search of Continents?

This Section of reports aim to assess the extent to which reports of RTCs published in 5 general medical journal have discussed new results in light of all of available evidence.

Clarke, M., & Chalmers, I. (1998). Discussion sections in reports of controlled trials published in general medical journals: islands in search of continents?. Jama, 280(3), 280-282.

Assessment of consultation and intervention implementation: A review of conjoint behavioral consultation studies

Reviews of treatment outcome literature indicate treatment integrity is not regularly assessed. In consultation, two levels of treatment integrity (i.e., consultant procedural integrity [CPI] and intervention treatment integrity [ITI]) provide relevant implementation data.

Collier-Meek, M. A., & Sanetti, L. M. (2014). Assessment of consultation and intervention implementation: A review of conjoint behavioral consultation studies. Journal of Educational and Psychological Consultation, 24(1), 55-73.

Assessing the Implementation of the Good Behavior Game: Comparing Estimates of Adherence, Quality, and Exposure

Treatment fidelity assessment is critical to evaluating the extent to which interventions, such as the Good Behavior Game, are implemented as intended and impact student outcomes. The assessment methods by which treatment fidelity data are collected vary, with direct observation being the most popular and widely recommended.

Collier-Meek, M. A., Fallon, L. M., & DeFouw, E. R. (2020). Assessing the implementation of the Good Behavior Game: Comparing estimates of adherence, quality, and exposure. Assessment for Effective Intervention, 45(2), 95-109.

A descriptive analysis of positive behavioral intervention research with young children with challenging behavior

The purpose of this study was to critically examine the positive approaches to behavioral intervention research and young children demonstrating challenging behavior. The results indicate an increasing trend of research using positive behavioral interventions with young children who demonstrate challenging behaviors. Most of the research has been conducted with children with disabilities between 3 and 6 years old.

Conroy, M. A., Dunlap, G., Clarke, S., & Alter, P. J. (2005). A descriptive analysis of positive behavioral intervention research with young children with challenging behavior. Topics in Early Childhood Special Education, 25(3), 157-166.

National board certification and teacher effectiveness: Evidence from Washington State

We study the effectiveness of teachers certified by the National Board for Professional Teaching Standards (NBPTS) in Washington State, which has one of the largest populations of National Board-Certified Teachers (NBCTs) in the nation. Certification effects vary by subject, grade level, and certification type, with greater effects for middle school math certificates. We find mixed evidence that teachers who pass the assessment are more effective than those who fail, but that the underlying NBPTS assessment score predicts student achievement.

Cowan, J., & Goldhaber, D. (2016). National board certification and teacher effectiveness: Evidence from Washington State. Journal of Research on Educational Effectiveness, 9(3), 233-258.

Assessing student’s participation in the classroom

We know that students should participate constructively in the classroom. In fact, most of us probably agree that a significant portion of a student’s grade should come from his or her participation. However, like many teachers, you may find it difficult to explain to students how you assess their participation.

Craven, J. A., & Hogan, T. (2001). Assessing participation in the classroom. Science Scope, 25(1), 36.

Crocker, L. (2005). Teaching for the test: How and why test preparation is appropriate. Defending standardized testing, 159-174.

One flashpoint in the incendiary debate over standardized testing in American public

schools is the area of test preparation. The focus of this chapter is test preparation in achievement testing and it's purportedly harmful effects on students and teachers.

Crocker, L. (2005). Teaching for the test: How and why test preparation is appropriate. Defending standardized testing, 159-174.

Big Data and data science: A critical review of issues for educational research.

This paper examines critical issues that must be considered to maximize the positive impact of big data and minimize negative effects that are currently encountered in other domains. This review is designed to raise awareness of these issues with particular attention paid to implications for educational research design in order that educators can develop the necessary policies and practices to address this complex phenomenon and its possible implications in the field of education.

Daniel, B. K. (2017). Big Data and data science: A critical review of issues for educational research. British Journal of Educational Technology.

Developing and assessing beginning teacher effectiveness: The potential of performance assessments.

The Performance Assessment for California Teachers (PACT) is an authentic tool for evaluating prospective teachers by examining their abilities to plan, teach, assess, and reflect on instruction in actual classroom practice. The PACT seeks both to measure and develop teacher effectiveness, and this study of its predictive and consequential validity provides information on how well it achieves these goals.

Darling-Hammond, L., Newton, S. P., & Wei, R. C. (2013). Developing and assessing beginning teacher effectiveness: The potential of performance assessments. Educational Assessment, Evaluation and Accountability, 25(3), 179-204.

Teacher Learning through Assessment: How Student-Performance Assessments Can Support Teacher Learning

This paper describes how teacher learning through involvement with student-performance assessments has been accomplished in the United States and around the world, particularly in countries that have been recognized for their high-performing educational systems

Sharing successes and hiding failures: ‘reporting bias’ in learning and teaching research

This paper examines factors that lead to bias as well offers specific recommendations to journals, funders, ethics committees, and universities designed to reduce reporting bias.

Dawson, P., & Dawson, S. L. (2018). Sharing successes and hiding failures:‘reporting bias’ in learning and teaching research. Studies in Higher Education, 43(8), 1405-1416.

Treatment Integrity: Fundamental to Education Reform

To produce better outcomes for students two things are necessary: (1) effective, scientifically supported interventions (2) those interventions implemented with high integrity. Typically, much greater attention has been given to identifying effective practices. This review focuses on features of high quality implementation.

Detrich, R. (2014). Treatment integrity: Fundamental to education reform. Journal of Cognitive Education and Psychology, 13(2), 258-271.

Using an activity schedule to smooth school transitions

Demonstrates the use of functional assessment procedures to identify the appropriate use of the Picture Exchange Communication system as part of a behavior support plan to resolve serious problems exhibited by a 3 yr old boy with autism and pervasive developmental disorder.

Dooley, P., Wilczenski, F. L., & Torem, C. (2001). Using an activity schedule to smooth school transitions. Journal of Positive Behavior Interventions, 3(1), 57-61.

Functional assessment and program development for problem behavior: a practical handbook

Featuring step-by-step guidance, examples, and forms, this guide to functional assessment procedures provides a first step toward designing positive and educative programs to eliminate serious behavior problems.

EDITION, N. T. T. (2015). Functional assessment and program development for problem behavior: a practical handbook.

Meta-analysis of the relationship between collective teacher efficacy and student achievement

This meta-analysis systematically synthesized results from 26 component studies, including dissertations and published articles, which reported at least one correlation between collective teacher efficacy and school achievement.

Eells, R. J. (2011). Meta-analysis of the relationship between collective teacher efficacy and student achievement.

Selecting growth measures for school and teacher evaluations: Should proportionality matter?

In this paper we take up the question of model choice and examine three competing approaches. The first approach, (SGPs) framework, eschews all controls for student covariates and schooling environments. The second approach, value-added models (VAMs), controls for student background characteristics and under some conditions can be used to identify the causal effects of schools and teachers. The third approach, also VAM-based, fully levels the playing field so that the correlation between school- and teacher-level growth measures and student demographics is essentially zero. We argue that the third approach is the most desirable for use in educational evaluation systems.

Ehlert, M., Koedel, C., Parsons, E., & Podgursky, M. (2013). Selecting growth measures for school and teacher evaluations: Should proportionality matter?. National Center for Analysis of Longitudinal Data in Education Research, 21.

Performance Assessment of Students' Achievement: Research and Practice.

Examines the fundamental characteristics of and reviews empirical research on performance assessment of diverse groups of students, including those with mild disabilities. Discussion of the technical qualities of performance assessment and barriers to its advancement leads to the conclusion that performance assessment should play a supplementary role in the evaluation of students with significant learning problems

Elliott, S. N. (1998). Performance Assessment of Students' Achievement: Research and Practice. Learning Disabilities Research and Practice, 13(4), 233-41.

The Utility of Curriculum-Based Measurement and Performance Assessment as Alternatives to Traditional Intelligence and Achievement Tests.

Curriculum-based measurement and performance assessments can provide valuable data for making special-education eligibility decisions. Reviews applied research on these assessment approaches and discusses the practical context of treatment validation and decisions about instructional services for students with diverse academic needs.

Elliott, S. N., & Fuchs, L. S. (1997). The Utility of Curriculum-Based Measurement and Performance Assessment as Alternatives to Traditional Intelligence and Achievement Tests. School Psychology Review, 26(2), 224-33.

Holding schools accountable: Is it working?

The theory that measuring performance and coupling it to rewards and sanctions will cause schools and the individuals who work in them to perform at higher levels underpins performance based accountability systems. Such systems are now operating in most states and in thousands of districts, and they represent a significant change from traditional approaches to accountability.

Elmore, R. F., & Fuhrman, S. H. (2001). Holding schools accountable: Is it working?. Phi Delta Kappan, 83(1), 67-72.

A descriptive analysis and critique of the empirical literature on school-based functional assessment

This article provides a wide range of information for 100 articles published from January 1980 through July 1999 that describe the functional assessment (FA) of behavior in school settings.

Ervin, R. A., Radford, P. M., Bertsch, K., & Piper, A. L. (2001). A descriptive analysis and critique of the empirical literature on school-based functional assessment. School Psychology Review, 30(2), 193.

Standardized admission tests, college performance, and campus diversity

A disproportionate reliance on SAT scores in college admissions has generated a growing number and volume of complaints. Some applicants, especially members of underrepresented minority groups, believe that the test is culturally biased. Other critics argue that high school GPA and results on SAT subject tests are better than scores on the SAT reasoning test at predicting college success, as measured by grades in college and college graduation.

Espenshade, T. J., & Chung, C. Y. (2010). Standardized admission tests, college performance, and campus diversity. Office of Population Research, Princeton University.

Every Student Succeeds Act

High-quality assessments are essential to effectively educating students, measuring progress, and promoting equity. Done well and thoughtfully, they provide critical information for educators, families, the public, and students themselves and create the basis for improving outcomes for all learners. Done poorly, in excess, or without clear purpose, however, they take valuable time away from teaching and learning, and may drain creative approaches from our classrooms.

Every Student Succeeds Act. (2017). Assessments under Title I, Part A & Title I, Part B: Summary of final regulations

Implementation Research: A Synthesis of the Literature

This is a comprehensive literature review of the topic of Implementation examining all stages beginning with adoption and ending with sustainability.

Fixsen, D. L., Naoom, S. F., Blase, K. A., & Friedman, R. M. (2005). Implementation research: A synthesis of the literature.

Evidence-Based Assessment of Learning Disabilities in Children and Adolescents

The reliability and validity of 4 approaches to the assessment of children and adolescents with learning disabilities (LD) are reviewed. The authors identify serious psychometric problems that affect the reliability of models based on aptitude-achievement discrepancies and low achievement.

Fletcher, J. M., Francis, D. J., Morris, R. D., & Lyon, G. R. (2005). Evidence-based assessment of learning disabilities in children and adolescents. Journal of Clinical Child and Adolescent Psychology, 34(3), 506-522.

Relation of phonological and orthographic processing to early reading: Comparing two approaches to regression-based, reading-level-match designs.

The authors replicated K. E. Stanovich and L. Siegel (regression-based logic to the reading-level-match design approach, but contrasted it with statistical matches using W scores, which are Rasch-scaled decoding scores based on a common metric regardless of age or grade.

Foorman, B. R., Francis, D. J., Fletcher, J. M., & Lynn, A. (1996). Relation of phonological and orthographic processing to early reading: Comparing two approaches to regression-based, reading-level-match designs. Journal of Educational Psychology, 88(4), 639.

Responsiveness-to-intervention: A blueprint for practitioners, policymakers, and parents

CASL's general goal is to identify instructional practices that accelerate the learning of K-3 children with disabilities. A specific goal is to identify and understand the nature of nonresponsiveness to generally effective instruction.

Fuchs, D., & Fuchs, L. S. (2005). Responsiveness-to-intervention: A blueprint for practitioners, policymakers, and parents. Teaching Exceptional Children, 38(1), 57-61.

Connecting Performance Assessment to Instruction: A Comparison of Behavioral Assessment, Mastery Learning, Curriculum-Based Measurement, and Performance Assessment.

This digest summarizes principles of performance assessment, which connects classroom assessment to learning. Specific ways that assessment can enhance instruction are outlined, as are criteria that assessments should meet in order to inform instructional decisions. Performance assessment is compared to behavioral assessment, mastery learning, and curriculum-based management.

Fuchs, L. S. (1995). Connecting Performance Assessment to Instruction: A Comparison of Behavioral Assessment, Mastery Learning, Curriculum-Based Measurement, and Performance Assessment. ERIC Digest E530.

Assessing Intervention Responsiveness: Conceptual and Technical Issues

In this article, the author uses examples in the literature to explore conceptual and technical issues associated with options for specifying three assessment components.

Fuchs, L. S. (2003). Assessing intervention responsiveness: Conceptual and technical issues. Learning Disabilities Research & Practice, 18(3), 172-186.

Curriculum-based measurement as the emerging alternative: Three decades later

This special issue of Learning Disabilities Research and Practice is dedicated to Dr. Stanley L. Deno. For most of his career, Stan was a Professor of Educational Psychology at the University of Minnesota, where in the mid-1970s, he initiated a systematic program of research on what eventually came to be known as curriculum-based measurement (CBM) Through CBM, Stan’s impact on the field of special education and beyond has been enormous.

Fuchs, L. S. (2017). Curriculum‐based measurement as the emerging alternative: Three decades later. Learning Disabilities Research & Practice.

Effects of Systematic Formative Evaluation: A Meta-Analysis

In this meta-analysis of studies that utilize formative assessment the authors report an effective size of .7.

Fuchs, L. S., & Fuchs, D. (1986). Effects of Systematic Formative Evaluation: A Meta-Analysis. Exceptional Children, 53(3), 199-208.

A framework for building capacity for responsiveness to intervention

This commentary offers such a framework and then they consider how the articles constituting this special issue address the various questions posed in the framework.

Fuchs, L. S., & Fuchs, D. (2006). A framework for building capacity for responsiveness to intervention. School Psychology Review, 35(4), 621.

The Effects of Frequent Curriculum-Based Measurement and Evaluation on Pedagogy, Student Achievement, and Student Awareness of Learning

This study examined the educational effects of repeated curriculumbased measurement and evaluation. Thirty-nine special educators, each having three to four pupils in the study, were assigned randomly to a repeated curriculum-based measurement/evaluation (experimental) treatment or a conventional special education evaluation (contrast) treatment

Fuchs, L. S., Deno, S. L., & Mirkin, P. K. (1984). The effects of frequent curriculum-based measurement and evaluation on pedagogy, student achievement, and student awareness of learning. American Educational Research Journal, 21(2), 449-460.

Strengthening the connection between assessment and instructional planning with expert systems

This article reviews an inductive assessment model for building instructional programs that satisfy the requirement that satisfy the requirement that special education be planned to address an individual student's need.

Fuchs, L. S., Fuchs, D., & Hamlett, C. L. (1994). Strengthening the connection between assessment and instructional planning with expert systems. Exceptional Children, 61(2), 138.

Formative evaluation of academic progress: How much growth can we expect?

The purpose of this study was to examine students' weekly rates of academic growth, or slopes of achievement, when Curriculum-Based Measurement (CBM) is conducted repeatedly over 1 year.

Fuchs, L. S., Fuchs, D., Hamlett, C. L., Walz, L., & Germann, G. (1993). Formative evaluation of academic progress: How much growth can we expect?. School Psychology Review, 22, 27-27.

Mathematics performance assessment in the classroom: Effects on teacher planning and student problem solving

The purpose of this study was to examine effects of classroom-basedperformance-assessment (PA)-driven instruction.

Fuchs, L. S., Fuchs, D., Karns, K., Hamlett, C. L., & Katzaroff, M. (1999). Mathematics performance assessment in the classroom: Effects on teacher planning and student problem solving. American educational research journal, 36(3), 609-646.

Comparisons among individual and cooperative performance assessments and other measures of mathematics competence

The purposes of this study were to examine how well 3 measures, representing 3 points on a traditional-alternative mathematics assessment continuum, interrelated and discriminated students achieving above, at, and below grade level and to explore effects of cooperative testing for the most innovative measure (performance assessment).

Fuchs, L. S., Fuchs, D., Karns, K., Hamlett, C., Katzaroff, M., & Dutka, S. (1998). Comparisons among individual and cooperative performance assessments and other measures of mathematics competence. The Elementary School Journal, 99(1), 23-51.

Reconciling a tradition of testing with a new learning paradigm

The entrenchment of standardized assessment in America's schools reflects its emergence from the dual traditions of democratic school reform and scientific measurement. Within distinct sociohistorical contexts, ambitious testing pioneers persuaded educators and policymakers to embrace the standardized testing movement.

Gallagher, C. J. (2003). Reconciling a tradition of testing with a new learning paradigm. Educational Psychology Review, 15(1), 83-99.

Formative Assessment As A Tool To Enhance The Development Of Inquiry Skills In Science Education

Formative assessment (FA) is considered a powerful tool to enhance learning. However, there have been few studies addressing how the implementation of FA influences the development of inquiry skills so far. This research intends to determine the efficacy of teaching using FA in the development of students' inquiry skills.

Ganajová, M., Sotakova, I., Lukáč, S., Ješková, Z., Jurkova, V., & Orosová, R. (2021). Formative Assessment As A Tool To Enhance The Development Of Inquiry Skills In Science Education. Journal of Baltic Science Education, 20(2), 204.

The Fundamental Role of Intervention Implementation in Assessing Response to Intervention

This chapter describes some of the critical conceptual issues related to intervention implementation, and provides a selected review of the research regarding the assessment and assurance of intervention implementation.

Gansle, K. A., & Noell, G. H. (2007). The fundamental role of intervention implementation in assessing response to intervention. In Handbook of response to intervention (pp. 244-251). Springer, Boston, MA.

Formative and summative assessments in the classroom

As a classroom teacher or administrator, how do you ensure that the information shared in a student-led conference provides a balanced picture of the student's strengths and weaknesses? The answer to this is to balance both summative and formative classroom assessment practices and information gathering about student learning.

Garrison, C., & Ehringhaus, M. (2007). Formative and summative assessments in the classroom.

A Comparison Study of the Program for International Student Assessment (PISA) 2012 and the National Assessment of Educational Progress (NAEP) 2013 Mathematics Assessments

In the United States, nationally representative data on student achievement come primarily from two sources: the National Assessment of Educational Progress (NAEP)—also known as “The Nation’s Report Card”—and U.S. participation in international assessments, including the Program for International Student Assessment (PISA). In Summary, this study this study found many similarities between the two assessments. However, it also found important differences in the relative emphasis across content areas or categories, in the

role of context, in the level of complexity, in the degree of mathematizing, in the overall amount

of text, and in the use of representations in assessments

Gattis, K., Kim, Y. Y., Stephens, M., Hall, L.D., Liu, F., Holmes, J. A Comparison Study of the Program for International Student Assessment (PISA) 2012 and the National Assessment of Educational Progress (NAEP) 2013 Mathematics Assessments. (2016). American Institute for Research. Retrieved from https://www.air.org/sites/default/files/downloads/report/Comparison-NAEP-PISA-Mathematics-May-2016.pdf

Validity of High-School Grades in Predicting Student Success beyond the Freshman Year: High-School Record vs. Standardized Tests as Indicators of Four-Year College Outcomes

High-school grades are often viewed as an unreliable criterion for college admissions, owing to differences in grading standards across high schools, while standardized tests are seen as methodologically rigorous, providing a more uniform and valid yardstick for assessing student ability and achievement. The present study challenges that conventional view. The study finds that high-school grade point average (HSGPA) is consistently the best predictor not only of freshman grades in college, the outcome indicator most often employed in predictive-validity studies, but of four-year college outcomes as well.

Geiser, S., & Santelices, M. V. (2007). Validity of High-School Grades in Predicting Student Success beyond the Freshman Year: High-School Record vs. Standardized Tests as Indicators of Four-Year College Outcomes. Research & Occasional Paper Series: CSHE. 6.07. Center for studies in higher education.

Quality Indicators for Group Experimental and Quasi Experimental Research in Special Education

This article presents quality indicators for experimental and quasi-experimental studies for special education.

Gersten, R., Fuchs, L. S., Compton, D., Coyne, M., Greenwood, C., & Innocenti, M. S. (2005). Quality indicators for group experimental and quasi-experimental research in special education. Exceptional children, 71(2), 149-164.

It’s official: All states have been excused from statewide testing this year

In one three-week period, a pandemic has completely changed the national landscape on assessment.

Gewertz, C. (2020). It’s official: All states have been excused from statewide testing this year. Education Week.

Conditions Under Which Assessment Supports Students’ Learning

This article focuses on the evaluation of assessment arrangements and the way they affect student learning out of class. It is assumed that assessment has an overwhelming influence on what, how and how much students study.

Gibbs, G., & Simpson, C. (2005). Conditions under which assessment supports students’ learning. Learning and teaching in higher education, (1), 3-31.

Are Students with Disabilities Accessing the Curriculum? A Meta-analysis of the Reading Achievement Gap between Students with and without Disabilities.

This meta-analysis examines 23 studies for student access to curriculum by assessing the gap in reading achievement between general education peers and students with disabilities (SWD). The study finds that SWDs performed more than three years below peers. The study looks at the implications for changing this pictures and why current policies and practices are not achieving the desired results.

Gilmour, A. F., Fuchs, D., & Wehby, J. H. (2018). Are students with disabilities accessing the curriculum? A meta-analysis of the reading achievement gap between students with and without disabilities. Exceptional Children. Advanced online publication. doi:10.1177/0014402918795830

Impacts of comprehensive teacher induction: Final results from a randomized controlled study

To evaluate the impact of comprehensive teacher induction relative to the usual induction support, the authors conducted a randomized experiment in a set of districts that were not already implementing comprehensive induction.

Glazerman, S., Isenberg, E., Dolfin, S., Bleeker, M., Johnson, A., Grider, M., & Jacobus, M. (2010). Impacts of Comprehensive Teacher Induction: Final Results from a Randomized Controlled Study. NCEE 2010-4027. National Center for Education Evaluation and Regional Assistance.

The four pillars to reading success: An action guide for states

Where, when, and how we teach reading says more about us than it does the students. Explicitly teaching and leveraging the science allows us to overcome our blind spots, assumptions, and biases which impact every aspect of instruction. In doing so, we save us from ourselves and avoid a permanent underclass filling our correctional institutions as prisoners of the ‘reading wars.’

Goldenberg, C., Glaser, D. R., Kame'enui, E. J., Butler, K., Diamond, L., Moats, L., ... & Grimes, S. C. (2020). The Four Pillars to Reading Success: An Action Guide for States. National Council on Teacher Quality.

The Importance and Decision-Making Utility of a Continuum of Fluency-Based Indicators of Foundational Reading Skills for Third-Grade High-Stakes Outcomes

In this article, we examine assessment and accountability in the context of a prevention-oriented assessment and intervention system designed to assess early reading progress formatively.

Good III, R. H., Simmons, D. C., & Kame'enui, E. J. (2001). The importance and decision-making utility of a continuum of fluency-based indicators of foundational reading skills for third-grade high-stakes outcomes. Scientific studies of reading, 5(3), 257-288.

The search for ability: Standardized testing in social perspective

A significant and eye-opening examination of the current state of the testing movement in the

United States, where more than 150 million standardized intelligence, aptitude, and

achievement tests are administered annually by schools, colleges, business and industrial

firms, government agencies, and the military services.

Goslin, D. A. (1963). The search for ability: Standardized testing in social perspective (Vol. 1). Russell Sage Foundation.

Teachers and testing

Discusses the uses and abuses of intelligence testing in our educational systems. Dr. Goslin

examines teachers' opinions and practices with regard to tests and finds considerable

discrepancies between attitude and behavior.

Goslin, D. A. (1967). Teachers and testing. Russell Sage Foundation.

Research-Based Writing Practices and the Common Core: Meta-analysis and Meta-synthesis

In order to meet writing objectives specified in the Common Core State Standards (CCSS), many teachers need to make significant changes in how writing is taught. While CCSS identified what students need to master, it did not provide guidance on how teachers are to meet these writing benchmarks.

Graham, S., Harris, K. R., & Santangelo, T. (2015). based writing practices and the common core: Meta-analysis and meta-synthesis. The Elementary School Journal, 115(4), 498-522.

What teacher preparation programs teach about K–12 assessment: A review.

This report provides information on the preparation provided to teacher candidates from

teacher training programs so that they can fully use assessment data to improve classroom

instruction.

Greenberg, J., & Walsh, K. (2012). What Teacher Preparation Programs Teach about K-12 Assessment: A Review. National Council on Teacher Quality.

Teacher prep review: A review of the nation’s teacher preparation programs

We strived to apply the standards uniformly to all the nation’s teacher preparation programs as part of our effort to bring as much transparency as possible to the way America’s teachers are prepared. In collecting information for this initial report, however, we encountered enormous resistance from leaders of many of the programs we sought to assess.

Greenberg, J., McKee, A., & Walsh, K. (2013). Teacher prep review: A review of the nation's teacher preparation programs. Available at SSRN 2353894.

Why the big standardized test is useless for teachers.

In schools throughout the country, it is testing season--time for students to take the Big Standardized Test (the PARCC, SBA, or your state's alternative). This ritual really blossomed way back in the days of No Child Left Behind, but after all these years, teachers are mostly unexcited about it. There are many problems with the testing regimen, but a big issue for classroom teachers is that the tests do not help the teacher do her job.

Greene, P. (2019, April 24). Why the big standardized test is useless for teachers. Forbes

Schools should scrap the big standardized test this year.

When schools pushed the pandemic pause button last spring, one of the casualties was the annual ritual of taking the Big Standardized Test. There were many reasons to skip the test, but in the end, students simply weren’t in school during the usual testing time

Greene, P. (2020, August 14). Schools should scrap the big standardized test this year. Forbes

Measurable change in student performance: Forgotten standard in teacher preparation?

This paper describe a few promising assessment technologies tat allow us to capture more direct, repeated, and contextually based measures of student learning, and propose an improvement-oriented approach to teaching and learning.

Greenwood, C. R., & Maheady, L. (1997). Measurable change in student performance: Forgotten standard in teacher preparation?. Teacher Education and Special Education, 20(3), 265-275.

Assessment of Treatment Integrity in School Consultation and Prereferral Intervention.

Technical issues (specification of treatment components, deviations from treatment protocols and amount of behavior change, and psychometric issues in assessing Treatment Integrity) involved in the measurement of Treatment Integrity are discussed.

Gresham, F. M. (1989). Assessment of treatment integrity in school consultation and prereferral intervention. School Psychology Review, 18(1), 37-50.

Assessing principals’ assessments: Subjective evaluations of teacher effectiveness in low- and high-stakes environments

Teacher effectiveness varies substantially, yet principals’ evaluations of teachers often fail to differentiate performance among teachers. We offer new evidence on principals’ subjective evaluations of their teachers’ effectiveness using two sources of data from a large, urban district: principals’ high-stakes personnel evaluations of teachers, and their low-stakes assessments of a subsample of those teachers provided to the researchers.

Grissom, J. A., & Loeb, S. (2017). Assessing principals’ assessments: Subjective evaluations of teacher effectiveness in low-and high-stakes environments. Education Finance and Policy, 12(3), 369-395.

A meta-analysis of team-efficacy, potency, and performance: Interdependence and level of analysis as moderators of observed relationships.

The purpose of the current study was to test theoretically derived hypotheses regarding the relationships between team efficacy, potency, and performance and to examine the moderating effects of level of analysis and interdependence on observed relationships.

Gully, S. M., Incalcaterra, K. A., Joshi, A., & Beaubien, J. M. (2002). A meta-analysis of team-efficacy, potency, and performance: interdependence and level of analysis as moderators of observed relationships. Journal of applied psychology, 87(5), 819.

No Child Left Behind, Contingencies, and Utah's Alternate Assessment

This article examine the concept of behavioral contingency and describes NCLB as a set of contingencies to promote the use of effective educational practices. Then they describe their design of an alternate assessment, including the components designed to capitalize on the contingencies of NCLB to promote positive educational outcomes.

Hager, K. D., Slocum, T. A., & Detrich, R. (2007). No Child Left Behind, Contingencies, and Utah’s Alternate Assessment. JEBPS Vol 8-N1, 63.

Treatment Integrity Assessment: How Estimates of Adherence, Quality, and Exposure Influence Interpretation of Implementation.

This study evaluated the differences in estimates of treatment integrity be measuring different dimensions of it.

Hagermoser Sanetti, L. M., & Fallon, L. M. (2011). Treatment Integrity Assessment: How Estimates of Adherence, Quality, and Exposure Influence Interpretation of Implementation. Journal of Educational & Psychological Consultation, 21(3), 209-232.

Can comprehension be taught? A quantitative synthesis of “metacognitive” studies

To assess the effect of “metacognitive” instruction on reading comprehension, 20 studies, with a total student population of 1,553, were compiled and quantitatively synthesized. In this compilation of studies, metacognitive instruction was found particularly effective for junior high students (seventh and eighth grades). Among the metacognitive skills, awareness of textual inconsistency and the use of self-questioning as both a monitoring and a regulating strategy were most effective. Reinforcement was the most effective teaching strategy.

Haller, E. P., Child, D. A., & Walberg, H. J. (1988). Can comprehension be taught? A quantitative synthesis of “metacognitive” studies. Educational researcher, 17(9), 5-8.

Making sense of test-based accountability in education

Test-based accountability systems that attach high stakes to standardized test results have

raised a number of issues on educational assessment and accountability. Do these high-

stakes tests measure student achievement accurately? How can policymakers and

educators attach the right consequences to the results of these tests? And what kinds of

tradeoffs do these testing policies introduce?

Hamilton, L. S., Stecher, B. M., & Klein, S. P. (2002). Making sense of test-based accountability in education. Rand Corporation.

Have states maintained high expectations for student performance?

The every student succeeds act (ESSA), passed into law in 2015, explicitly prohibits the federal government from creating incentives to set national standards. The law represents a major departure from recent federal initiatives, such as Race to the Top, which beginning in 2009 encouraged the adoption of uniform content standards and expectations for performance.

Hamlin, D., & Peterson, P. E. (2018). Have states maintained high expectations for student performance? An analysis of 2017 state proficiency standards. Education Next, 18(4), 42-49.

College Graduation Statistics

The number of college graduates has steadily increased in the past decade, especially among those earning bachelor’s degrees. Graduation rates have also increased overall, especially at public, 4-year institutions; college graduation statistics suggest greater student success at these institutions.

Hanson, M. (2021). College Graduation Statistics. Educationdata.org.

Does school accountability lead to improved student performance?

The authors analysis of special education placement rates, a frequently identified area of concern, does not show any responsiveness to the introduction of accountability systems.

Hanushek, E. A., & Raymond, M. E. (2005). Does school accountability lead to improved student performance?. Journal of Policy Analysis and Management: The Journal of the Association for Public Policy Analysis and Management, 24(2), 297-327.

The Value of Smarter Teachers: International Evidence on Teacher Cognitive Skills and Student Performance

This new research addresses a number of critical questions: Are a teacher’s cognitive skills a good predictor of teacher quality? This study examines the student achievement of 36 developed countries in the context of teacher cognitive skills. This study finds substantial differences in teacher cognitive skills across countries that are strongly related to student performance.

Hanushek, E. A., Piopiunik, M., & Wiederhold, S. (2014). The value of smarter teachers: International evidence on teacher cognitive skills and student performance (No. w20727). National Bureau of Economic Research.

Brief hierarchical assessment of potential treatment components with children in an outpatient clinic

Seven parents conducted assessments in an outpatient clinic using a prescribed hierarchy of antecedent and consequence treatment components for their children's problem behavior. Brief assessment of potential treatment components was conducted to identify variables that controlled the children's appropriate behavior.

Harding, J., Wacker, D. P., Cooper, L. J., Millard, T., & Jensen‐Kovalan, P. (1994). Brief hierarchical assessment of potential treatment components with children in an outpatient clinic. Journal of Applied Behavior Analysis, 27(2), 291-300.

Assessment and learning: Differences and relationships between

The central argument of this paper is that the formative and summative purposes of assessment have become confused in practice and that as a consequence assessment fails to have a truly formative role in learning.

Harlen, W., & James, M. (1997). Assessment and learning: differences and relationships between formative and summative assessment. Assessment in Education: Principles, Policy & Practice, 4(3), 365-379.

Assessment for Intervention: A Problem-solving Approach

This book provides a complete guide to implementing a wide range of problem-solving assessment methods: functional behavioral assessment, interviews, classroom observations, curriculum-based measurement, rating scales, and cognitive instruments.

Harrison, P. L. (2012). Assessment for intervention: A problem-solving approach. Guilford Press.

Student testing in America’s great city schools: An

Inventory and preliminary analysis

this report aims to provide the public, along with teachers and leaders in the Great City Schools, with objective evidence about the extent of standardized testing in public schools and how these assessments are used.